Fatemeh_Hadaeghi

@fatemehhadaeghi.bsky.social

100 followers

180 following

23 posts

NeuroAI Researcher @ICNS_Hamburg, PhD in Biomedical Engineering

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Fatemeh_Hadaeghi

Reposted by Fatemeh_Hadaeghi

Shrey Dixit

@shreydixit.bsky.social

· Jun 25

Who Does What in Deep Learning? Multidimensional Game-Theoretic Attribution of Function of Neural Units

Neural networks now generate text, images, and speech with billions of parameters, producing a need to know how each neural unit contributes to these high-dimensional outputs. Existing explainable-AI ...

arxiv.org

Reposted by Fatemeh_Hadaeghi

Shrey Dixit

@shreydixit.bsky.social

· Jun 25

Who Does What in Deep Learning? Multidimensional Game-Theoretic Attribution of Function of Neural Units

Neural networks now generate text, images, and speech with billions of parameters, producing a need to know how each neural unit contributes to these high-dimensional outputs. Existing explainable-AI ...

arxiv.org

Reposted by Fatemeh_Hadaeghi

Reposted by Fatemeh_Hadaeghi

Reposted by Fatemeh_Hadaeghi

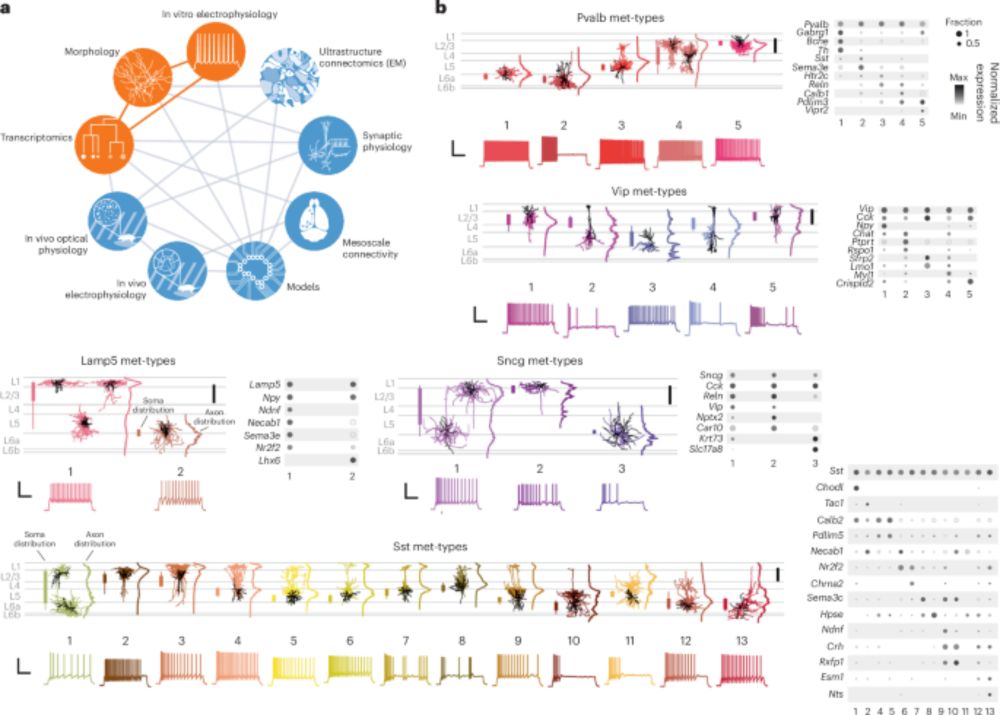

Nature Neuroscience

@natneuro.nature.com

· Apr 11

Integrating multimodal data to understand cortical circuit architecture and function - Nature Neuroscience

This paper discusses how experimental and computational studies integrating multimodal data, such as RNA expression, connectivity and neural activity, are advancing our understanding of the architectu...

www.nature.com

Reposted by Fatemeh_Hadaeghi