Shrey Dixit

@shreydixit.bsky.social

47 followers

82 following

17 posts

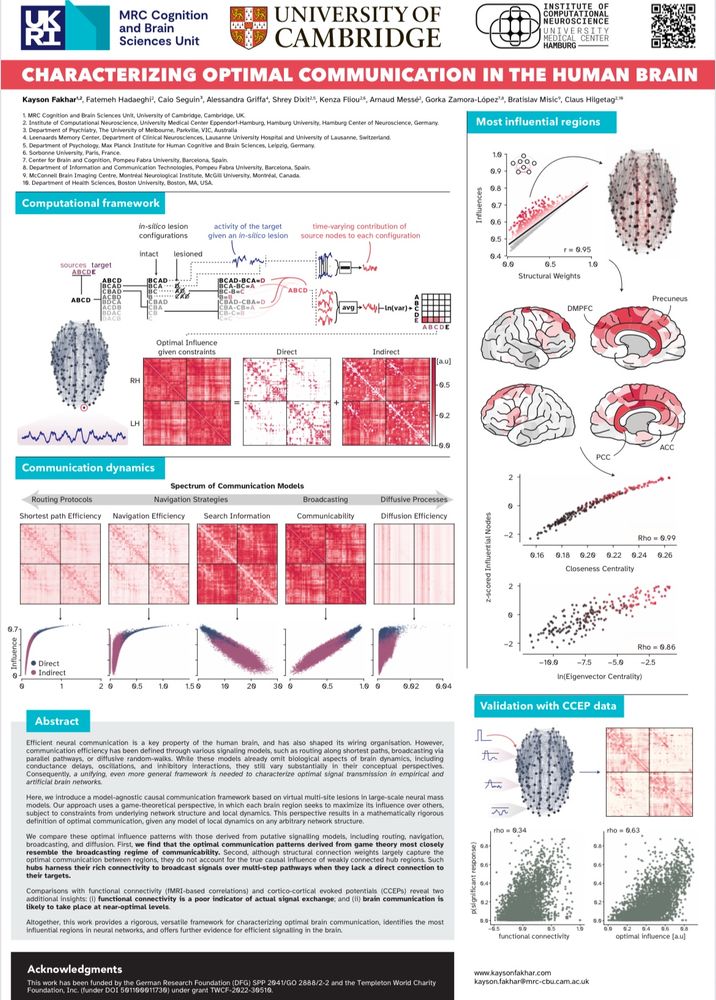

Doctoral Researcher doing NeuroAI at the Max Planck Institute of Human Cognitive and Brain Sciences

Posts

Media

Videos

Starter Packs

Pinned

Shrey Dixit

@shreydixit.bsky.social

· Jun 25

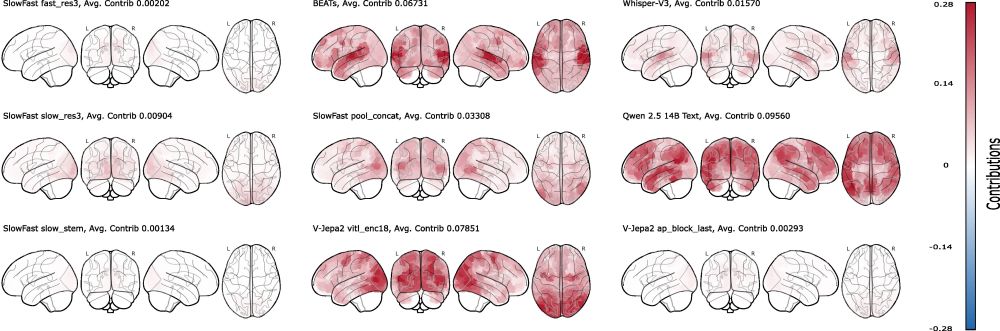

Who Does What in Deep Learning? Multidimensional Game-Theoretic Attribution of Function of Neural Units

Neural networks now generate text, images, and speech with billions of parameters, producing a need to know how each neural unit contributes to these high-dimensional outputs. Existing explainable-AI ...

arxiv.org

Reposted by Shrey Dixit

Qiaoli Huang

@qiaoli-huang.bsky.social

· Aug 26

Efficient coding in working memory is adapted to the structure of the environment

Working memory (WM) relies on efficient coding strategies to overcome its limited capacity, yet how the brain adaptively organizes WM representations to maximize coding efficiency based on environment...

www.biorxiv.org

Reposted by Shrey Dixit

Reposted by Shrey Dixit

Shrey Dixit

@shreydixit.bsky.social

· Jul 31

Shrey Dixit

@shreydixit.bsky.social

· Jul 31

Shrey Dixit

@shreydixit.bsky.social

· Jul 29

Reposted by Shrey Dixit

Brad Aimone

@jbimaknee.bsky.social

· Jul 29

Neuromorphic Computing: A Theoretical Framework for Time, Space, and Energy Scaling

Neuromorphic computing (NMC) is increasingly viewed as a low-power alternative to conventional von Neumann architectures such as central processing units (CPUs) and graphics processing units (GPUs), h...

www.arxiv.org

Shrey Dixit

@shreydixit.bsky.social

· Jul 24

Reposted by Shrey Dixit

Reposted by Shrey Dixit

Reposted by Shrey Dixit

Janis Keck

@keckjanis.bsky.social

· Jun 25

Impact of symmetry in local learning rules on predictive neural representations and generalization in spatial navigation

Author summary The hippocampus is a brain region which plays a crucial role in spatial navigation for both animals and humans. Contemporarily, it’s thought to store predictive representations of the e...

doi.org