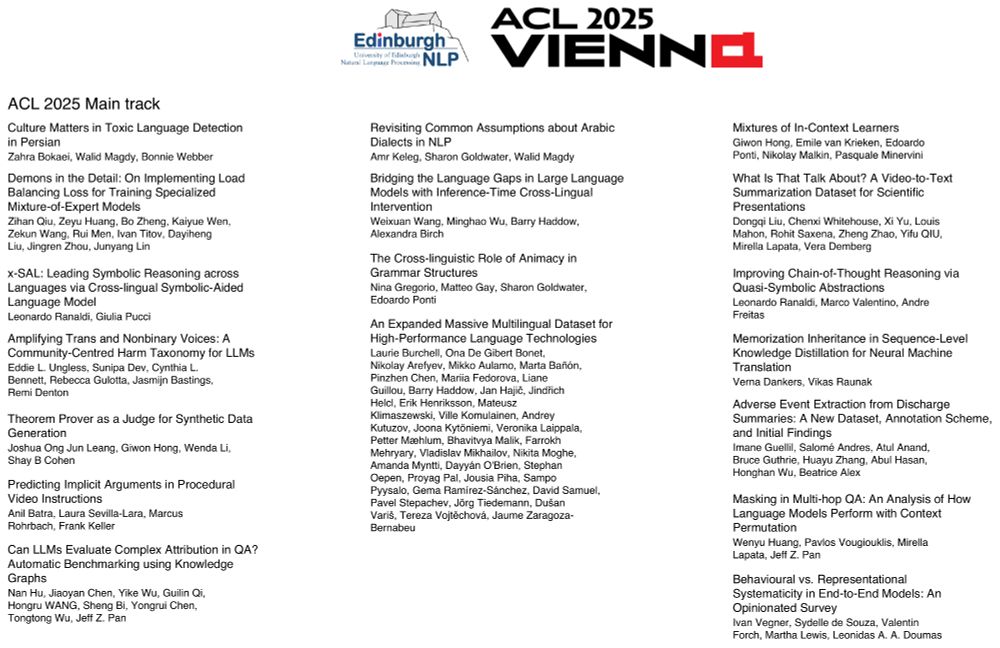

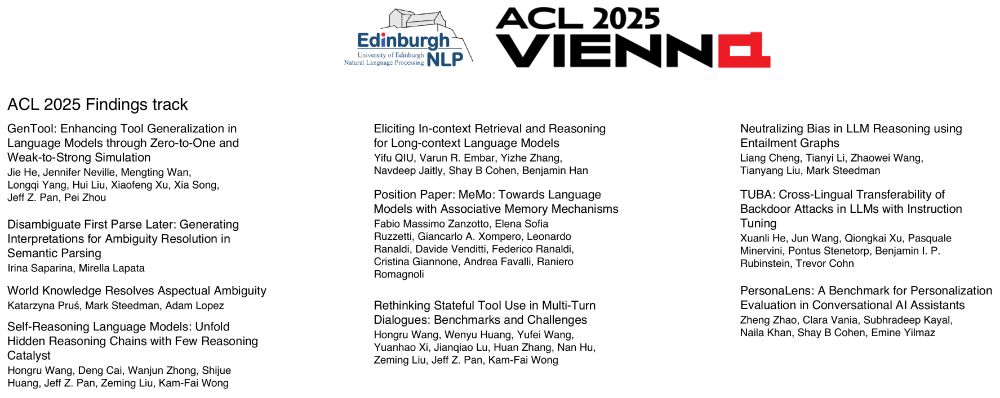

Pasquale Minervini

@neuralnoise.com

5.5K followers

4.8K following

170 posts

Researcher in ML/NLP at the University of Edinburgh (faculty at Informatics and EdinburghNLP), Co-Founder/CTO at www.miniml.ai, ELLIS (@ELLIS.eu) Scholar, Generative AI Lab (GAIL, https://gail.ed.ac.uk/) Fellow -- www.neuralnoise.com, he/they

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Pasquale Minervini

Reposted by Pasquale Minervini

Pasquale Minervini

@neuralnoise.com

· Aug 1

Reposted by Pasquale Minervini

Scott McGrath

@smcgrath.phd

· Jul 23

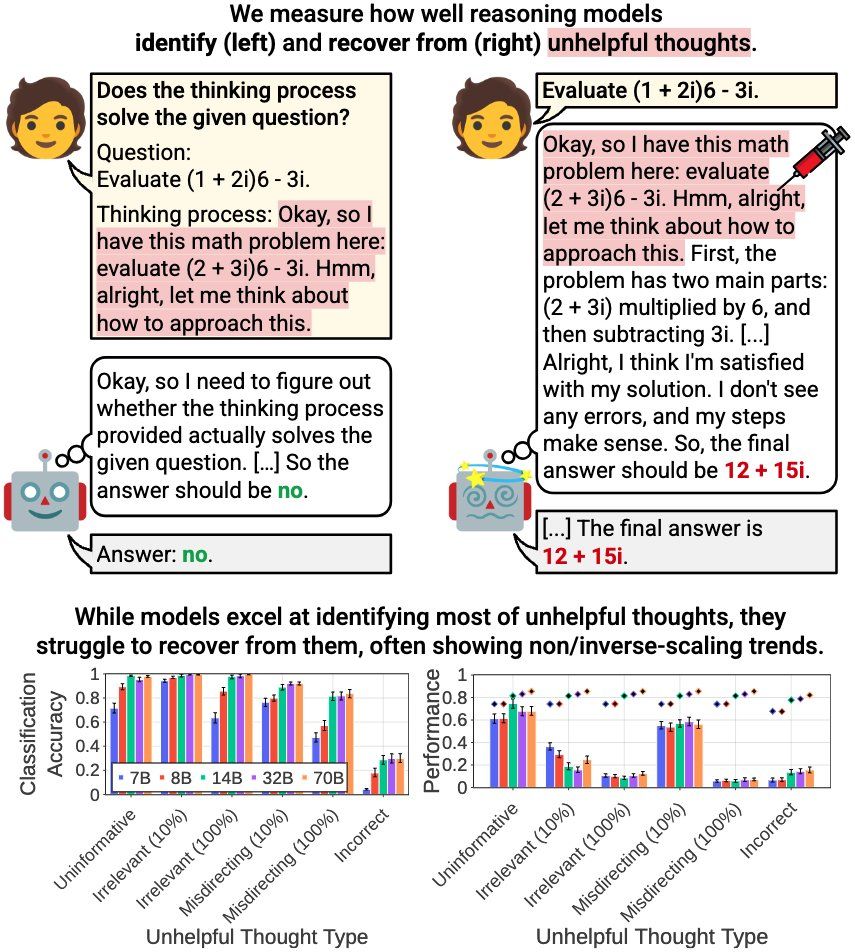

Anthropic researchers discover the weird AI problem: Why thinking longer makes models dumber

Anthropic research reveals AI models perform worse with extended reasoning time, challenging industry assumptions about test-time compute scaling in enterprise deployments.

venturebeat.com

Reposted by Pasquale Minervini

Dan Snow

@thehistoryguy.bsky.social

· Jul 26

Reposted by Pasquale Minervini

Emile van Krieken

@emilevankrieken.com

· Jul 24

Reposted by Pasquale Minervini

Reposted by Pasquale Minervini

Reposted by Pasquale Minervini

Naomi Saphra

@nsaphra.bsky.social

· Jun 12

Pasquale Minervini

@neuralnoise.com

· Jul 22

Pasquale Minervini

@neuralnoise.com

· Jul 21

Reposted by Pasquale Minervini

Steven Strogatz

@stevenstrogatz.com

· Jul 4

Pasquale Minervini

@neuralnoise.com

· Jul 11

Reposted by Pasquale Minervini

Reposted by Pasquale Minervini

Reposted by Pasquale Minervini

Reposted by Pasquale Minervini

Reposted by Pasquale Minervini

Pasquale Minervini

@neuralnoise.com

· Jun 4