Ido Aizenbud

@idoai.bsky.social

390 followers

520 following

37 posts

Computational Neuroscience PhD Student

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Ido Aizenbud

Ido Aizenbud

@idoai.bsky.social

· Aug 2

Ido Aizenbud

@idoai.bsky.social

· Aug 1

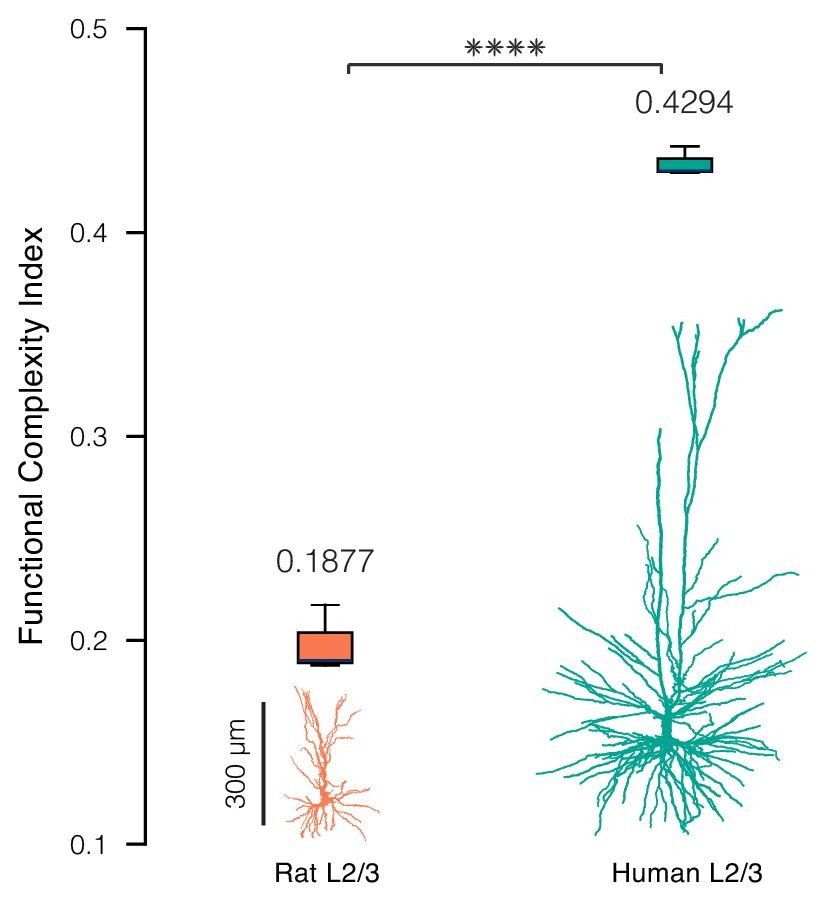

Frontiers | Biophysical and computational insights from modeling human cortical pyramidal neurons

The human brain’s remarkable computational power enables parallel processing of vast information, integrating sensory inputs, memories, and emotions for rapi...

doi.org

Ido Aizenbud

@idoai.bsky.social

· Aug 1

Ido Aizenbud

@idoai.bsky.social

· Aug 1

Ido Aizenbud

@idoai.bsky.social

· Aug 1

Frontiers | Biophysical and computational insights from modeling human cortical pyramidal neurons

The human brain’s remarkable computational power enables parallel processing of vast information, integrating sensory inputs, memories, and emotions for rapi...

doi.org

Ido Aizenbud

@idoai.bsky.social

· Apr 16

Ido Aizenbud

@idoai.bsky.social

· Apr 16

Reposted by Ido Aizenbud

Toviah Moldwin

@tmoldwin.bsky.social

· Feb 19

The calcitron: A simple neuron model that implements many learning rules via the calcium control hypothesis

Author summary Researchers have developed various learning rules for artificial neural networks, but it is unclear how these rules relate to the brain’s natural processes. This study focuses on the ca...

journals.plos.org

Ido Aizenbud

@idoai.bsky.social

· Jan 8

Ido Aizenbud

@idoai.bsky.social

· Dec 31

Ido Aizenbud

@idoai.bsky.social

· Dec 31

Ido Aizenbud

@idoai.bsky.social

· Dec 31

Ido Aizenbud

@idoai.bsky.social

· Dec 29

Ido Aizenbud

@idoai.bsky.social

· Dec 29

Ido Aizenbud

@idoai.bsky.social

· Dec 27

Ido Aizenbud

@idoai.bsky.social

· Dec 27

Ido Aizenbud

@idoai.bsky.social

· Dec 27

Ido Aizenbud

@idoai.bsky.social

· Dec 27