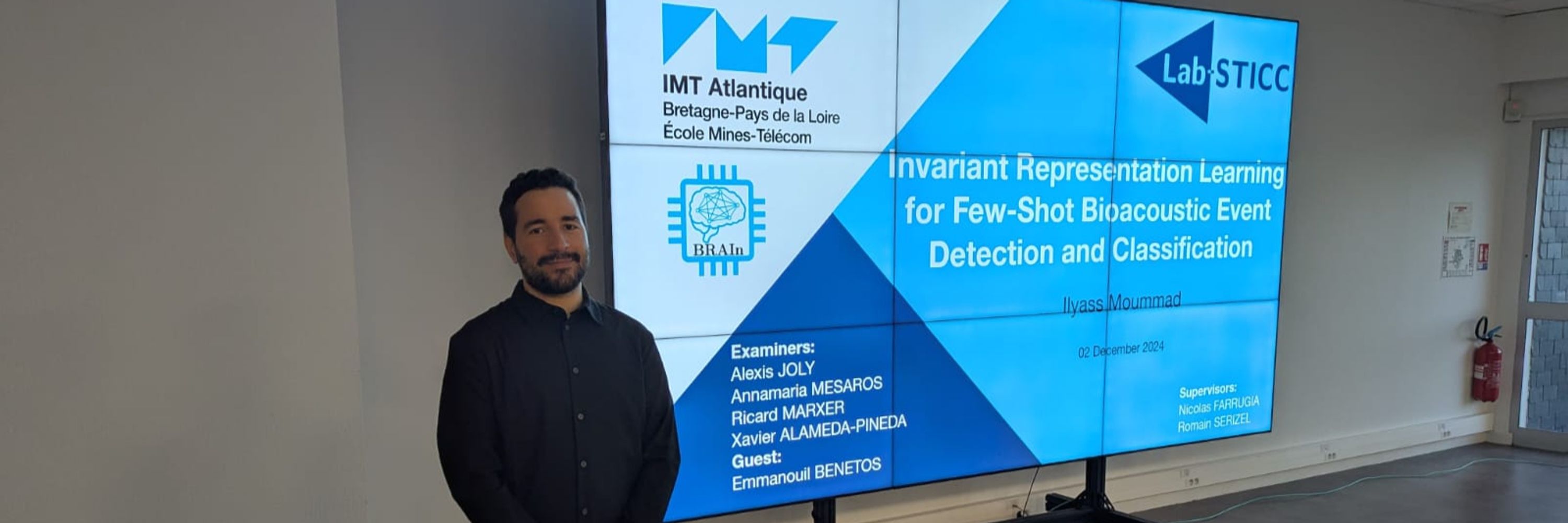

Ilyass Moummad

@ilyassmoummad.bsky.social

370 followers

330 following

55 posts

Postdoctoral Researcher @ Inria Montpellier (IROKO, Pl@ntNet)

SSL for plant images

Interested in Computer Vision, Natural Language Processing, Machine Listening, and Biodiversity Monitoring

Website: ilyassmoummad.github.io

Posts

Media

Videos

Starter Packs

Pinned

Ilyass Moummad

@ilyassmoummad.bsky.social

· Nov 19

Ilyass Moummad

@ilyassmoummad.bsky.social

· Aug 29

Ilyass Moummad

@ilyassmoummad.bsky.social

· Aug 29

Ilyass Moummad

@ilyassmoummad.bsky.social

· Aug 23

Ilyass Moummad

@ilyassmoummad.bsky.social

· Aug 19

Reposted by Ilyass Moummad

Ilyass Moummad

@ilyassmoummad.bsky.social

· Jul 18

Ilyass Moummad

@ilyassmoummad.bsky.social

· Jun 19

Ilyass Moummad

@ilyassmoummad.bsky.social

· Jun 16

Reposted by Ilyass Moummad

Reposted by Ilyass Moummad

Reposted by Ilyass Moummad

Reposted by Ilyass Moummad

Reposted by Ilyass Moummad

David Picard

@davidpicard.bsky.social

· Jun 3

Elucidating the representation of images within an unconditional diffusion model denoiser

Generative diffusion models learn probability densities over diverse image datasets by estimating the score with a neural network trained to remove noise. Despite their remarkable success in generatin...

arxiv.org

Ilyass Moummad

@ilyassmoummad.bsky.social

· May 20