Itay Itzhak @ COLM 🍁

@itay-itzhak.bsky.social

25 followers

68 following

14 posts

NLProc, deep learning, and machine learning. Ph.D. student @ Technion and The Hebrew University.

https://itay1itzhak.github.io/

Posts

Media

Videos

Starter Packs

Reposted by Itay Itzhak @ COLM 🍁

Reposted by Itay Itzhak @ COLM 🍁

Reposted by Itay Itzhak @ COLM 🍁

Reposted by Itay Itzhak @ COLM 🍁

Reposted by Itay Itzhak @ COLM 🍁

Martin Tutek

@mtutek.bsky.social

· Feb 21

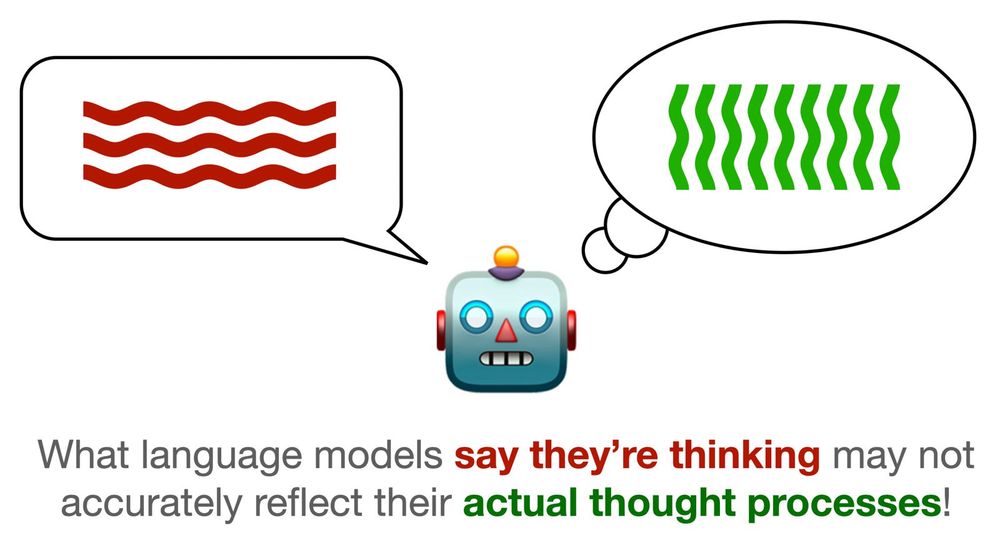

Measuring Faithfulness of Chains of Thought by Unlearning Reasoning Steps

When prompted to think step-by-step, language models (LMs) produce a chain of thought (CoT), a sequence of reasoning steps that the model supposedly used to produce its prediction. However, despite mu...

arxiv.org

Reposted by Itay Itzhak @ COLM 🍁