Yonatan Belinkov ✈️ COLM2025

@boknilev.bsky.social

110 followers

390 following

19 posts

Assistant professor of computer science at Technion; visiting scholar at @KempnerInst 2025-2026

https://belinkov.com/

Posts

Media

Videos

Starter Packs

Reposted by Yonatan Belinkov ✈️ COLM2025

Reposted by Yonatan Belinkov ✈️ COLM2025

Reposted by Yonatan Belinkov ✈️ COLM2025

Reposted by Yonatan Belinkov ✈️ COLM2025

BlackboxNLP

@blackboxnlp.bsky.social

· Jul 9

Reposted by Yonatan Belinkov ✈️ COLM2025

Reposted by Yonatan Belinkov ✈️ COLM2025

Reposted by Yonatan Belinkov ✈️ COLM2025

Reposted by Yonatan Belinkov ✈️ COLM2025

Reposted by Yonatan Belinkov ✈️ COLM2025

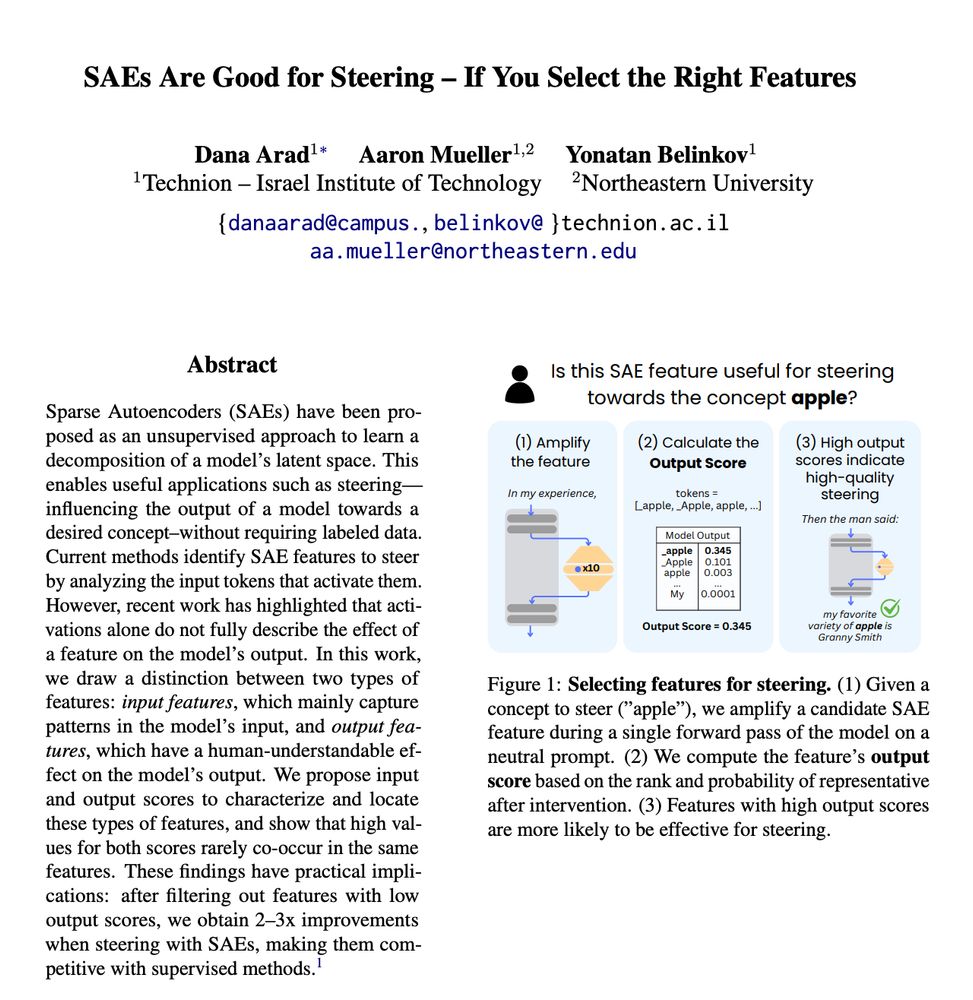

Aaron Mueller

@amuuueller.bsky.social

· Apr 23