Dana Arad

@danaarad.bsky.social

58 followers

230 following

23 posts

NLP Researcher | CS PhD Candidate @ Technion

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Dana Arad

Dana Arad

@danaarad.bsky.social

· Jul 9

Reposted by Dana Arad

Reposted by Dana Arad

Reposted by Dana Arad

Reposted by Dana Arad

Dana Arad

@danaarad.bsky.social

· May 28

Dana Arad

@danaarad.bsky.social

· May 28

Dana Arad

@danaarad.bsky.social

· May 27

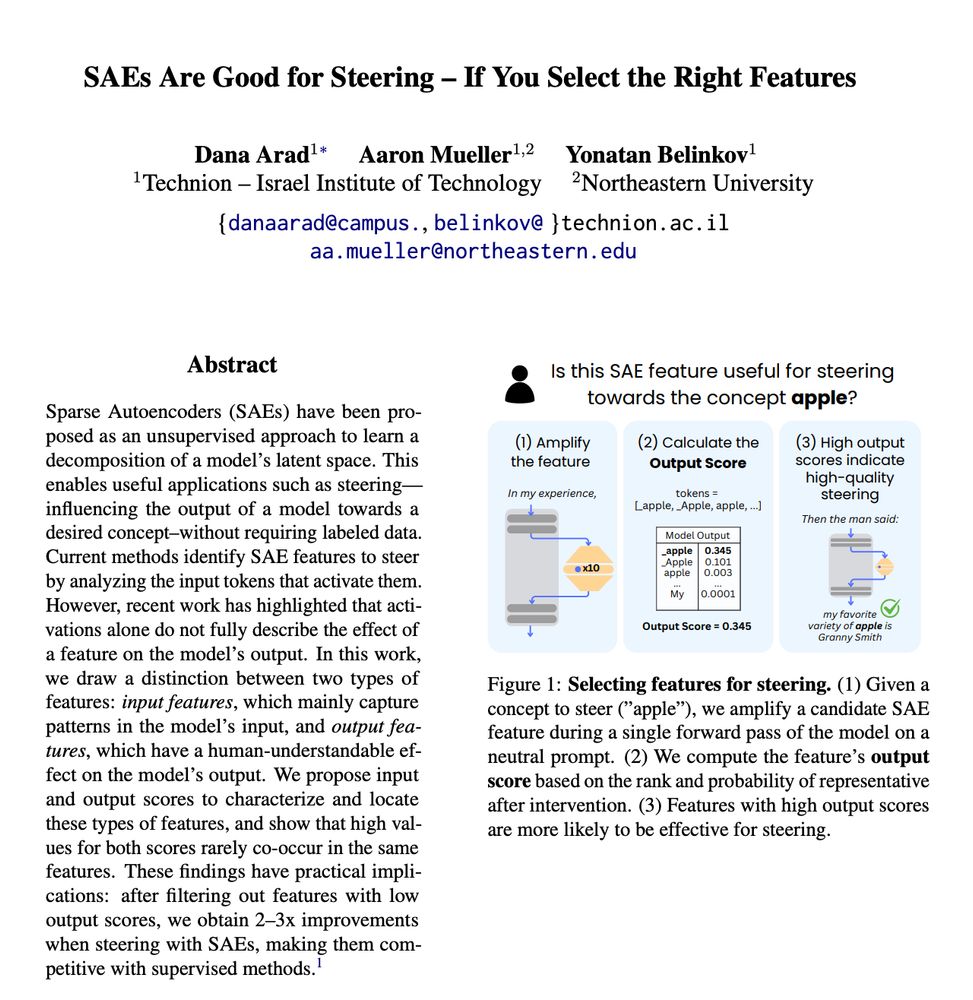

SAEs Are Good for Steering -- If You Select the Right Features

Sparse Autoencoders (SAEs) have been proposed as an unsupervised approach to learn a decomposition of a model's latent space. This enables useful applications such as steering - influencing the output...

arxiv.org