Jorge Morales

@jorge-morales.bsky.social

3.8K followers

3.2K following

650 posts

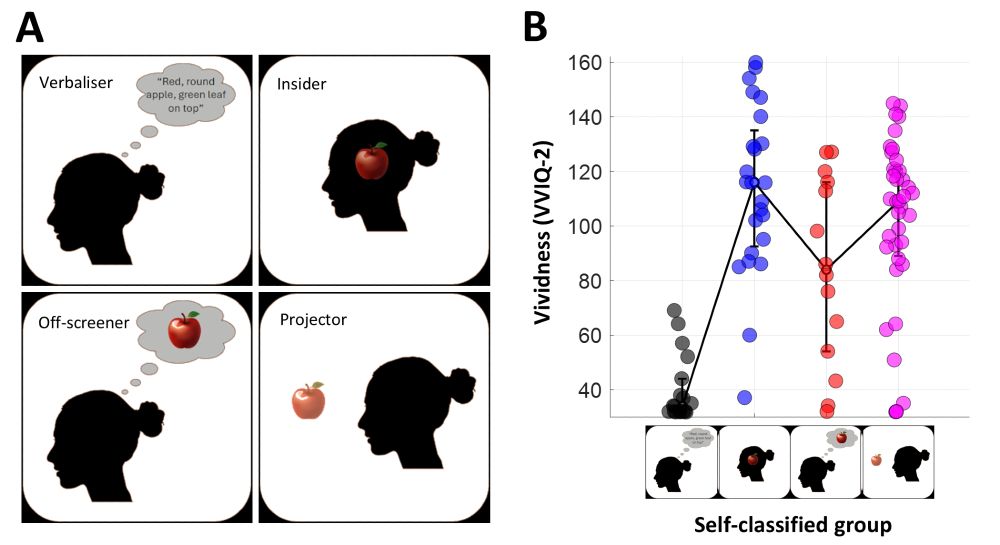

I'm a philosopher, psychologist and neuroscientist studying vision, mental imagery, consciousness and introspection. As S.S. Stevens said "there are numerous pitfalls in this business." https://www.subjectivitylab.org

Posts

Media

Videos

Starter Packs

Reposted by Jorge Morales

Reposted by Jorge Morales

Reposted by Jorge Morales

Reposted by Jorge Morales