Joel Z Leibo

@jzleibo.bsky.social

3.2K followers

240 following

39 posts

I can be described as a multi-agent artificial general intelligence.

OK, so some people pointed out that I am not in fact artificial, contradicting my bio. To them I would reply that I am likely also a cognitive gadget.

www.jzleibo.com

Posts

Media

Videos

Starter Packs

Joel Z Leibo

@jzleibo.bsky.social

· Jul 12

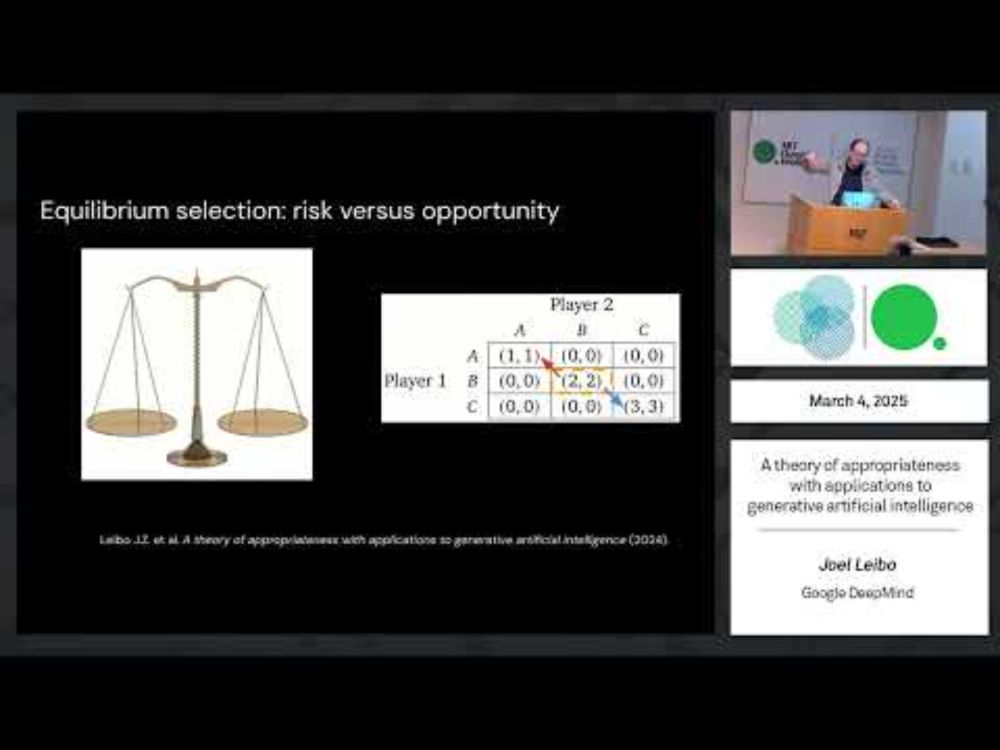

A theory of appropriateness with applications to generative artificial intelligence

What is appropriateness? Humans navigate a multi-scale mosaic of interlocking notions of what is appropriate for different situations. We act one way with our friends, another with our family, and yet...

arxiv.org

Joel Z Leibo

@jzleibo.bsky.social

· Jun 30

Reposted by Joel Z Leibo

Wolfram Barfuss

@wbarfuss.bsky.social

· Jun 17

Joel Z Leibo

@jzleibo.bsky.social

· Jun 3

Reposted by Joel Z Leibo

David Pfau

@davidpfau.com

· May 9

Joel Z Leibo

@jzleibo.bsky.social

· May 9

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

Artificial Intelligence (AI) systems are increasingly placed in positions where their decisions have real consequences, e.g., moderating online spaces, conducting research, and advising on policy. Ens...

arxiv.org

Joel Z Leibo

@jzleibo.bsky.social

· May 9

Joel Z Leibo

@jzleibo.bsky.social

· May 9

Joel Z Leibo

@jzleibo.bsky.social

· May 9

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

Artificial Intelligence (AI) systems are increasingly placed in positions where their decisions have real consequences, e.g., moderating online spaces, conducting research, and advising on policy. Ens...

arxiv.org

Joel Z Leibo

@jzleibo.bsky.social

· Apr 22

A theory of appropriateness with applications to generative artificial intelligence

What is appropriateness? Humans navigate a multi-scale mosaic of interlocking notions of what is appropriate for different situations. We act one way with our friends, another with our family, and yet...

arxiv.org

Reposted by Joel Z Leibo

Reposted by Joel Z Leibo

Marc Lanctot

@sharky6000.bsky.social

· Feb 24

Reposted by Joel Z Leibo

Jeff Dean

@jeffdean.bsky.social

· Feb 15

Reposted by Joel Z Leibo

Joel Z Leibo

@jzleibo.bsky.social

· Feb 12