Jonathan Kadmon

@kadmonj.bsky.social

120 followers

140 following

15 posts

Assistant professor of theoretical neuroscience @ELSCbrain. My opinions are deterministic activity patterns in my neocortex. http://neuro-theory.org

Posts

Media

Videos

Starter Packs

Jonathan Kadmon

@kadmonj.bsky.social

· Jul 27

Jonathan Kadmon

@kadmonj.bsky.social

· Mar 27

Jonathan Kadmon

@kadmonj.bsky.social

· Mar 23

Training Large Neural Networks With Low-Dimensional Error Feedback

Training deep neural networks typically relies on backpropagating high dimensional error signals a computationally intensive process with little evidence supporting its implementation in the brain. Ho...

arxiv.org

Jonathan Kadmon

@kadmonj.bsky.social

· Mar 23

Training Large Neural Networks With Low-Dimensional Error Feedback

Training deep neural networks typically relies on backpropagating high dimensional error signals a computationally intensive process with little evidence supporting its implementation in the brain. Ho...

arxiv.org

Jonathan Kadmon

@kadmonj.bsky.social

· Mar 23

Jonathan Kadmon

@kadmonj.bsky.social

· Mar 23

Jonathan Kadmon

@kadmonj.bsky.social

· Mar 23

Jonathan Kadmon

@kadmonj.bsky.social

· Mar 23

Jonathan Kadmon

@kadmonj.bsky.social

· Mar 23

Training Large Neural Networks With Low-Dimensional Error Feedback

Training deep neural networks typically relies on backpropagating high dimensional error signals a computationally intensive process with little evidence supporting its implementation in the brain. Ho...

arxiv.org

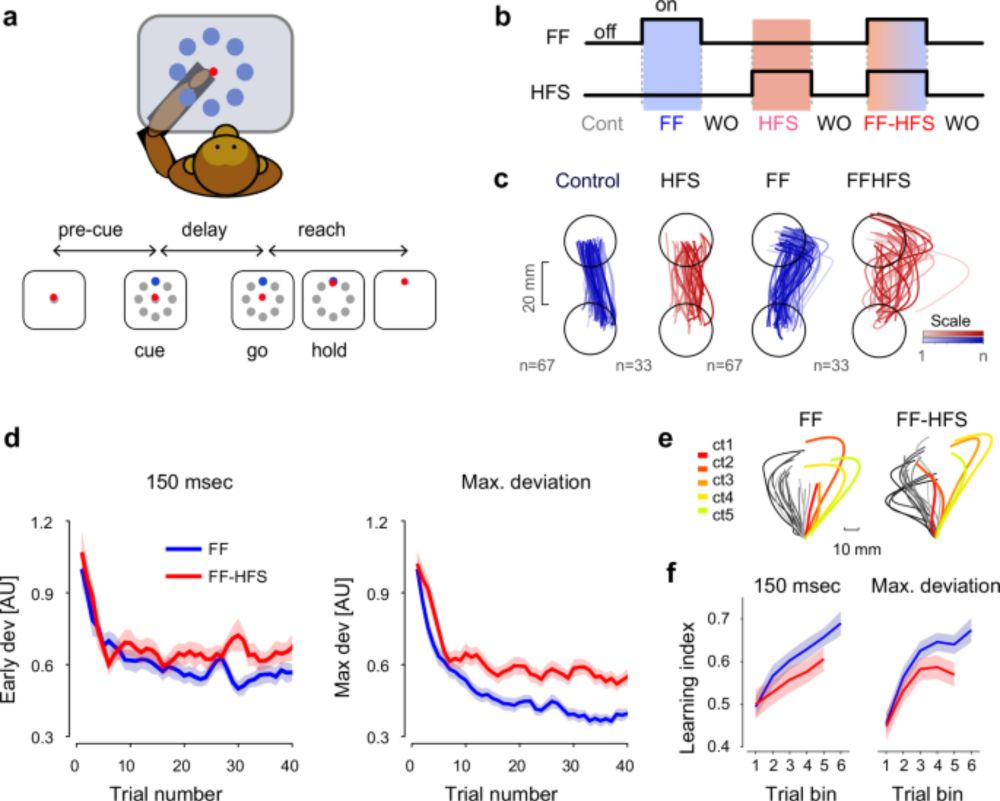

Reposted by Jonathan Kadmon

Hugo Ninou

@hugoninou.bsky.social

· Mar 22

Cerebellar output shapes cortical preparatory activity during motor adaptation - Nature Communications

Functional role of the cerebellum in motor adaptation is not fully understood. The authors show that cerebellar signals act as low-dimensional feedback which constrains the structure of the preparator...

www.nature.com

Reposted by Jonathan Kadmon

Reposted by Jonathan Kadmon

Jonathan Kadmon

@kadmonj.bsky.social

· Feb 17

Jonathan Kadmon

@kadmonj.bsky.social

· Feb 17

Neural mechanisms of flexible perceptual inference

What seems obvious in one context can take on an entirely different meaning if that context shifts. While context-dependent inference has been widely studied, a fundamental question remains: how does ...

www.biorxiv.org

Jonathan Kadmon

@kadmonj.bsky.social

· Feb 17

Jonathan Kadmon

@kadmonj.bsky.social

· Feb 17

Jonathan Kadmon

@kadmonj.bsky.social

· Feb 17

Jonathan Kadmon

@kadmonj.bsky.social

· Feb 17

Neural mechanisms of flexible perceptual inference

What seems obvious in one context can take on an entirely different meaning if that context shifts. While context-dependent inference has been widely studied, a fundamental question remains: how does ...

www.biorxiv.org