open.substack.com/pub/heteroto...?

open.substack.com/pub/heteroto...?

Synthetic Labor:

"Synthetic labor is the autonomous execution of cognitive or physical tasks by non-human systems to generate economic value."

open.substack.com/pub/techneai...

Synthetic Labor:

"Synthetic labor is the autonomous execution of cognitive or physical tasks by non-human systems to generate economic value."

open.substack.com/pub/techneai...

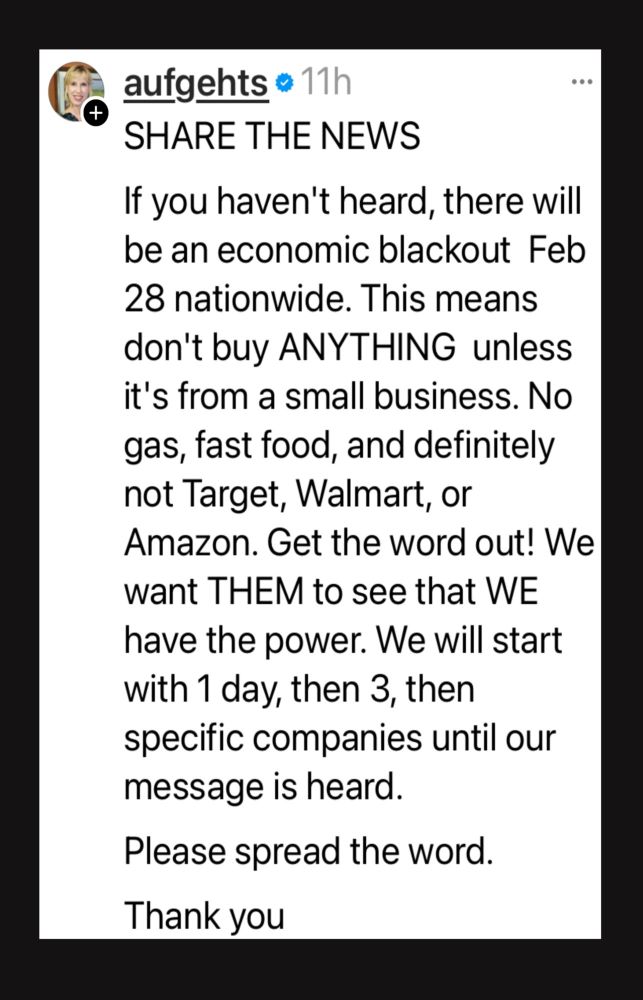

Beliefs are just held.

In a moment like this, I can’t just hold beliefs."

substack.com/home/post/p-...

Beliefs are just held.

In a moment like this, I can’t just hold beliefs."

substack.com/home/post/p-...

This year, I've been fortunate enough to present a series of talks on AI at Portal Innovations, LLC.

This year, I've been fortunate enough to present a series of talks on AI at Portal Innovations, LLC.

sloanreview.mit.edu/article/a-fr...

sloanreview.mit.edu/article/a-fr...

www.youtube.com/watch?v=yQ2f...

www.youtube.com/watch?v=yQ2f...

substack.com/home/post/p-...

substack.com/home/post/p-...

successful AI adoption will be a determining factor in national strength." A sobering vision. arxiv.org/pdf/2503.05628

successful AI adoption will be a determining factor in national strength." A sobering vision. arxiv.org/pdf/2503.05628

www.linkedin.com/pulse/future...

www.linkedin.com/pulse/future...

Is nuclear arms control applicable to AI? McNamara argues that comparing AI and nuclear weapons can be insightful, incomplete, and not quite right, but still useful with caution. www.heterotopia.ai/p/a-cautiona...

Is nuclear arms control applicable to AI? McNamara argues that comparing AI and nuclear weapons can be insightful, incomplete, and not quite right, but still useful with caution. www.heterotopia.ai/p/a-cautiona...