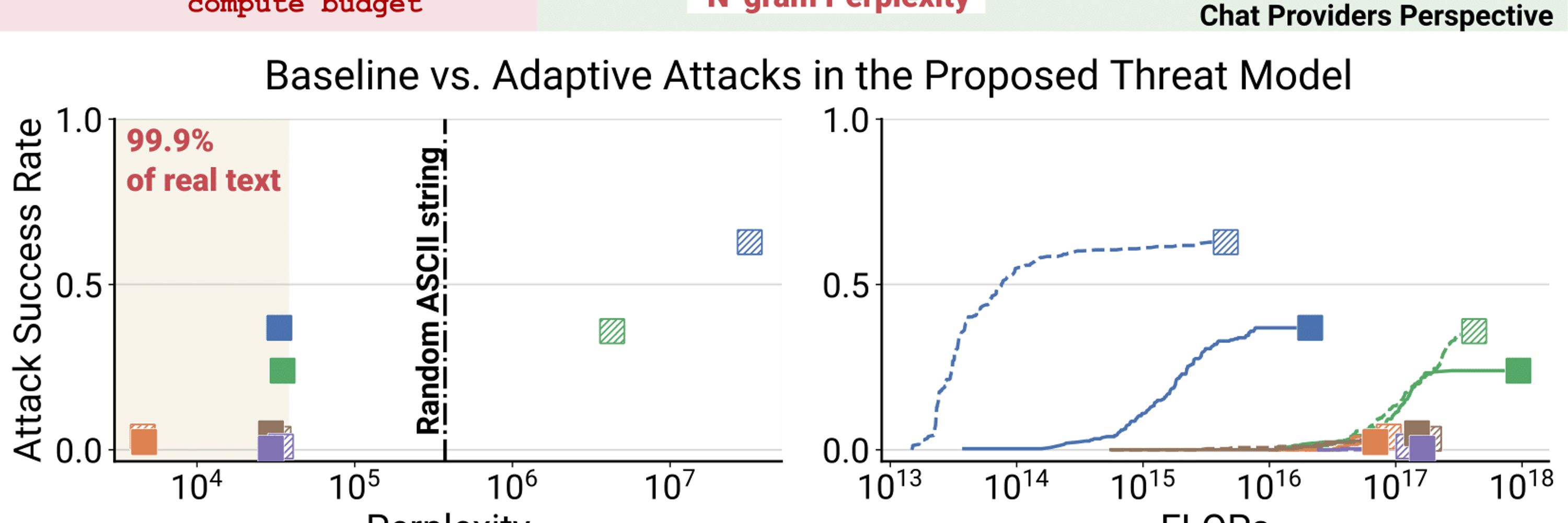

🔍 ASIDE boosts prompt injection robustness without safety-tuning: we simply rotate embeddings of marked tokens by 90° during instruction-tuning and inference.

👇 code & docs👇

🔍 ASIDE boosts prompt injection robustness without safety-tuning: we simply rotate embeddings of marked tokens by 90° during instruction-tuning and inference.

👇 code & docs👇

✅ ASIDE = architecturally separating instructions and data in LLMs from layer 0

🔍 +12–44 pp↑ separation, no utility loss

📉 lowers prompt‑injection ASR (without safety tuning!)

🚀 Talk: Hall 4 #6, 28 Apr, 4:45

✅ ASIDE = architecturally separating instructions and data in LLMs from layer 0

🔍 +12–44 pp↑ separation, no utility loss

📉 lowers prompt‑injection ASR (without safety tuning!)

🚀 Talk: Hall 4 #6, 28 Apr, 4:45

We’re building cutting-edge, open-source AI tutoring models for high-quality, adaptive learning for all pupils with support from the Hector Foundation.

👉 forms.gle/sxvXbJhZSccr...

We’re building cutting-edge, open-source AI tutoring models for high-quality, adaptive learning for all pupils with support from the Hector Foundation.

👉 forms.gle/sxvXbJhZSccr...

1. Not only GPT-4 but also other frontier LLMs have memorized the same set of NYT articles from the lawsuit.

2. Very large models, particularly with >100B parameters, have memorized significantly more.

🧵1/n

1. Not only GPT-4 but also other frontier LLMs have memorized the same set of NYT articles from the lawsuit.

2. Very large models, particularly with >100B parameters, have memorized significantly more.

🧵1/n