@majhas.bsky.social

120 followers

110 following

11 posts

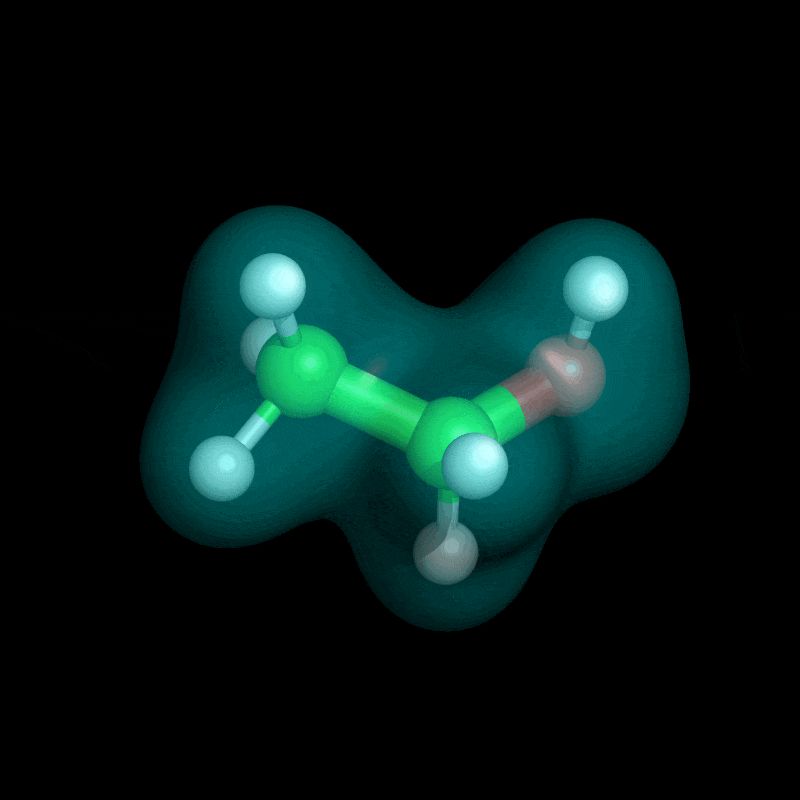

PhD Student at Mila & University of Montreal | Generative modeling, sampling, molecules

majhas.github.io

Posts

Media

Videos

Starter Packs

Reposted

Reposted

Reposted

Reposted

Reposted