Avery HW Ryoo

@averyryoo.bsky.social

1.1K followers

300 following

49 posts

i like generative models, science, and Toronto sports teams

phd @ mila/udem, prev. @ uwaterloo

averyryoo.github.io 🇨🇦🇰🇷

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Avery HW Ryoo

Avery HW Ryoo

@averyryoo.bsky.social

· Jul 12

Reposted by Avery HW Ryoo

Reposted by Avery HW Ryoo

Reposted by Avery HW Ryoo

Avery HW Ryoo

@averyryoo.bsky.social

· Jun 6

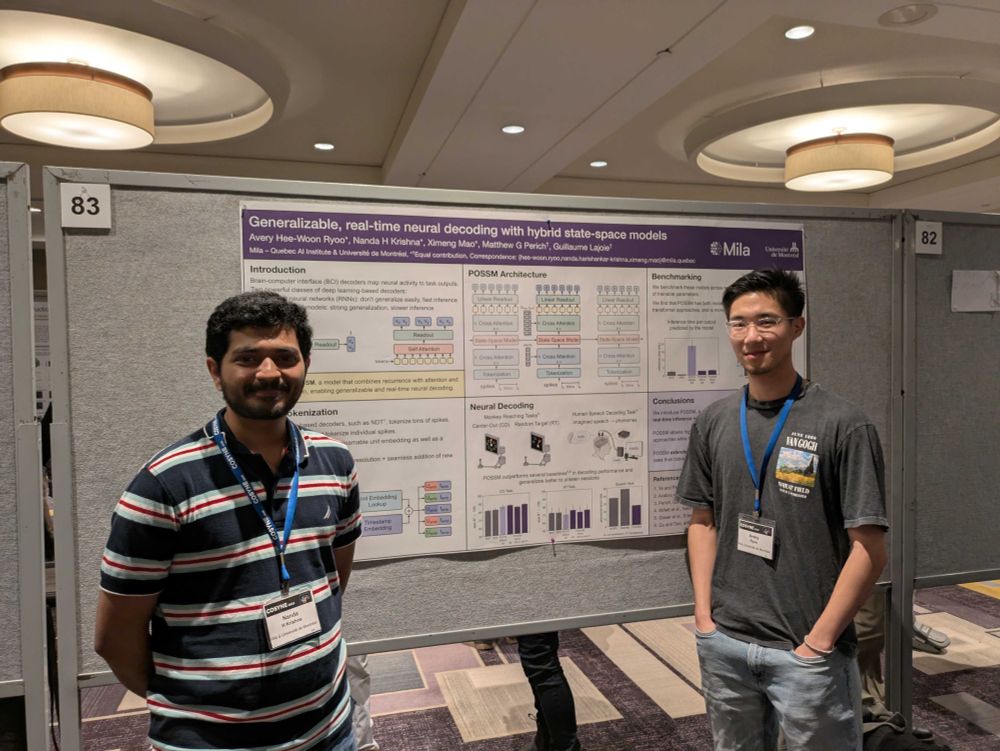

Generalizable, real-time neural decoding with hybrid state-space models

Real-time decoding of neural activity is central to neuroscience and neurotechnology applications, from closed-loop experiments to brain-computer interfaces, where models are subject to strict latency...

arxiv.org

Reposted by Avery HW Ryoo

Reposted by Avery HW Ryoo

Reposted by Avery HW Ryoo

Reposted by Avery HW Ryoo

Reposted by Avery HW Ryoo

Reposted by Avery HW Ryoo

Charlotte Volk

@charlottevolk.bsky.social

· Mar 27

Reposted by Avery HW Ryoo

Avery HW Ryoo

@averyryoo.bsky.social

· Mar 27

Reposted by Avery HW Ryoo

Blake Richards

@tyrellturing.bsky.social

· Mar 23