Mehdi Azabou

@mehdiazabou.bsky.social

510 followers

54 following

36 posts

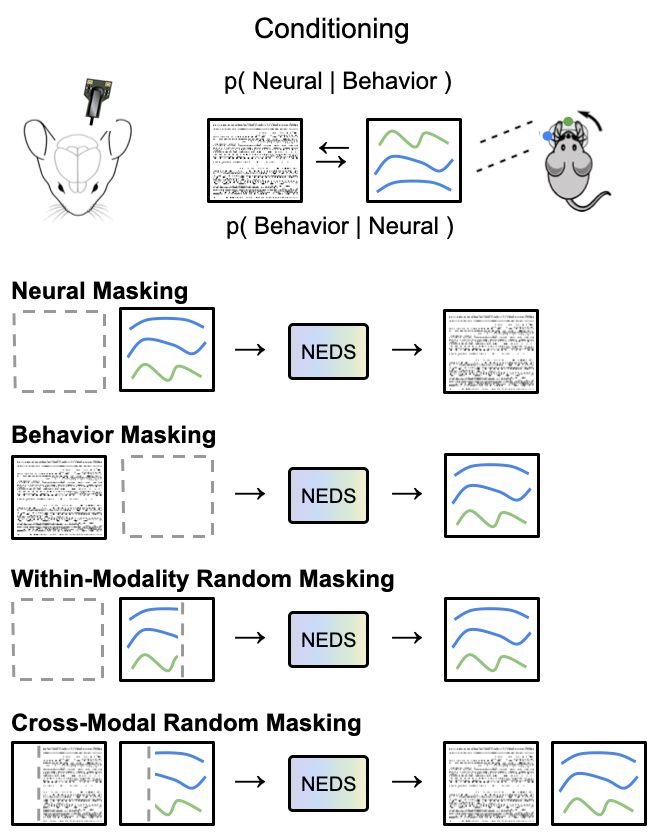

Working on neuro-foundation models

Postdoc at Columbia | ML PhD, Georgia Tech | https://www.mehai.dev/

Posts

Media

Videos

Starter Packs

Reposted by Mehdi Azabou

Reposted by Mehdi Azabou

Reposted by Mehdi Azabou

Reposted by Mehdi Azabou

Mehdi Azabou

@mehdiazabou.bsky.social

· Apr 25

Mehdi Azabou

@mehdiazabou.bsky.social

· Apr 25

Mehdi Azabou

@mehdiazabou.bsky.social

· Apr 25

Reposted by Mehdi Azabou

Reposted by Mehdi Azabou

Reposted by Mehdi Azabou

Memming Park

@memming.bsky.social

· Apr 3

Cosyne 2025 Tutorial - Eva Dyer - Foundations of Transformers in Neuroscience

Cosyne 2025 Tutorial Session Sponsored by the Simons Foundation

TOPIC: Foundations of Transformers in Neuroscience

SPEAKER: Eva Dyer, Georgia Institute of Technology

DATE: 27 March 2025

In this tutorial, we’ll introduce the fundamentals of transformers and their applications in neuroscience usin

youtu.be

Reposted by Mehdi Azabou

Reposted by Mehdi Azabou

Reposted by Mehdi Azabou

Mehdi Azabou

@mehdiazabou.bsky.social

· Mar 31