Manfred Diaz

@manfreddiaz.bsky.social

2.3K followers

750 following

39 posts

Ph.D. Candidate at Mila and the University of Montreal, interested in AI/ML connections with economics, game theory, and social choice theory.

https://manfreddiaz.github.io

Posts

Media

Videos

Starter Packs

Manfred Diaz

@manfreddiaz.bsky.social

· Aug 8

Manfred Diaz

@manfreddiaz.bsky.social

· Jun 16

Manfred Diaz

@manfreddiaz.bsky.social

· Jun 5

Reposted by Manfred Diaz

Marc Lanctot

@sharky6000.bsky.social

· May 18

A Tutorial on General Evaluation of AI Agents

Artificial Intelligence (AI) and machine learning (ML), in particular, have emerged as scientific disciplines concerned with understanding and building single and multi-agent systems with the ability ...

sites.google.com

Reposted by Manfred Diaz

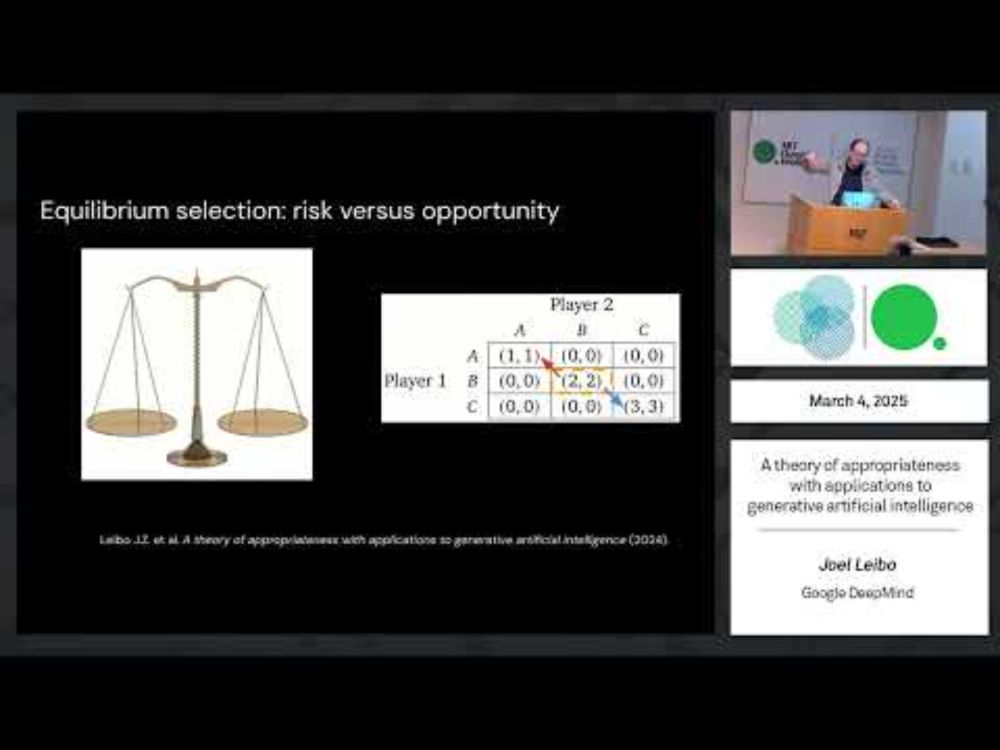

Joel Z Leibo

@jzleibo.bsky.social

· May 9

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

Artificial Intelligence (AI) systems are increasingly placed in positions where their decisions have real consequences, e.g., moderating online spaces, conducting research, and advising on policy. Ens...

arxiv.org

Manfred Diaz

@manfreddiaz.bsky.social

· May 1

Manfred Diaz

@manfreddiaz.bsky.social

· Apr 16

Reposted by Manfred Diaz

Reposted by Manfred Diaz

Manfred Diaz

@manfreddiaz.bsky.social

· Mar 6

Manfred Diaz

@manfreddiaz.bsky.social

· Mar 4

Marc Lanctot

@sharky6000.bsky.social

· Mar 4

A Tutorial on General Evaluation of AI Agents

Artificial Intelligence (AI) and machine learning (ML), in particular, have emerged as scientific disciplines concerned with understanding and building single and multi-agent systems with the ability ...

sites.google.com

Manfred Diaz

@manfreddiaz.bsky.social

· Mar 4

Manfred Diaz

@manfreddiaz.bsky.social

· Mar 4

Manfred Diaz

@manfreddiaz.bsky.social

· Feb 25

Manfred Diaz

@manfreddiaz.bsky.social

· Feb 25

Manfred Diaz

@manfreddiaz.bsky.social

· Feb 25

Reposted by Manfred Diaz

Marc Lanctot

@sharky6000.bsky.social

· Feb 24

Manfred Diaz

@manfreddiaz.bsky.social

· Feb 20

Manfred Diaz

@manfreddiaz.bsky.social

· Feb 12