previous: postdoc @ umass_nlp

phd from utokyo

https://marzenakrp.github.io/

@microsoft.com (will forever miss this team) and moved to Vancouver, Canada, where I'm starting my lab as an assistant professor at the gorgeous @sfu.ca 🏔️

I'm looking to hire 1-2 students starting in Fall 2026. Details in 🧵

@microsoft.com (will forever miss this team) and moved to Vancouver, Canada, where I'm starting my lab as an assistant professor at the gorgeous @sfu.ca 🏔️

I'm looking to hire 1-2 students starting in Fall 2026. Details in 🧵

www.pangram.com/history/01bf...

www.pangram.com/history/01bf...

📍4:30–6:30 PM / Room 710 – Poster #8

📍4:30–6:30 PM / Room 710 – Poster #8

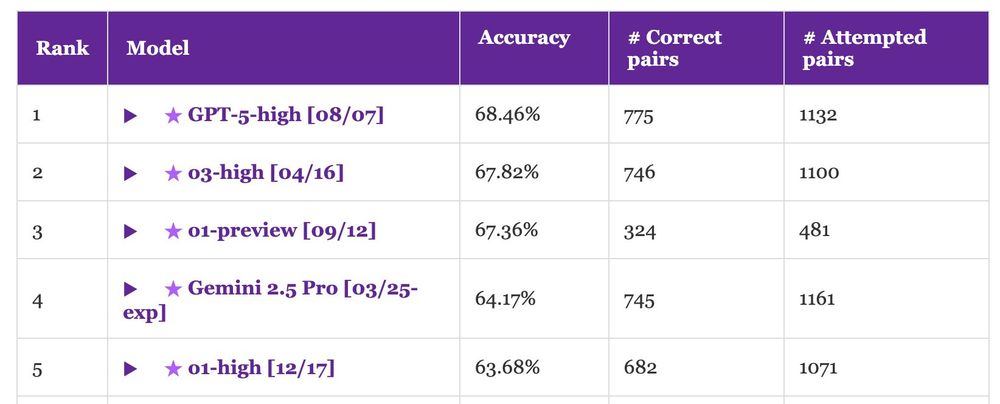

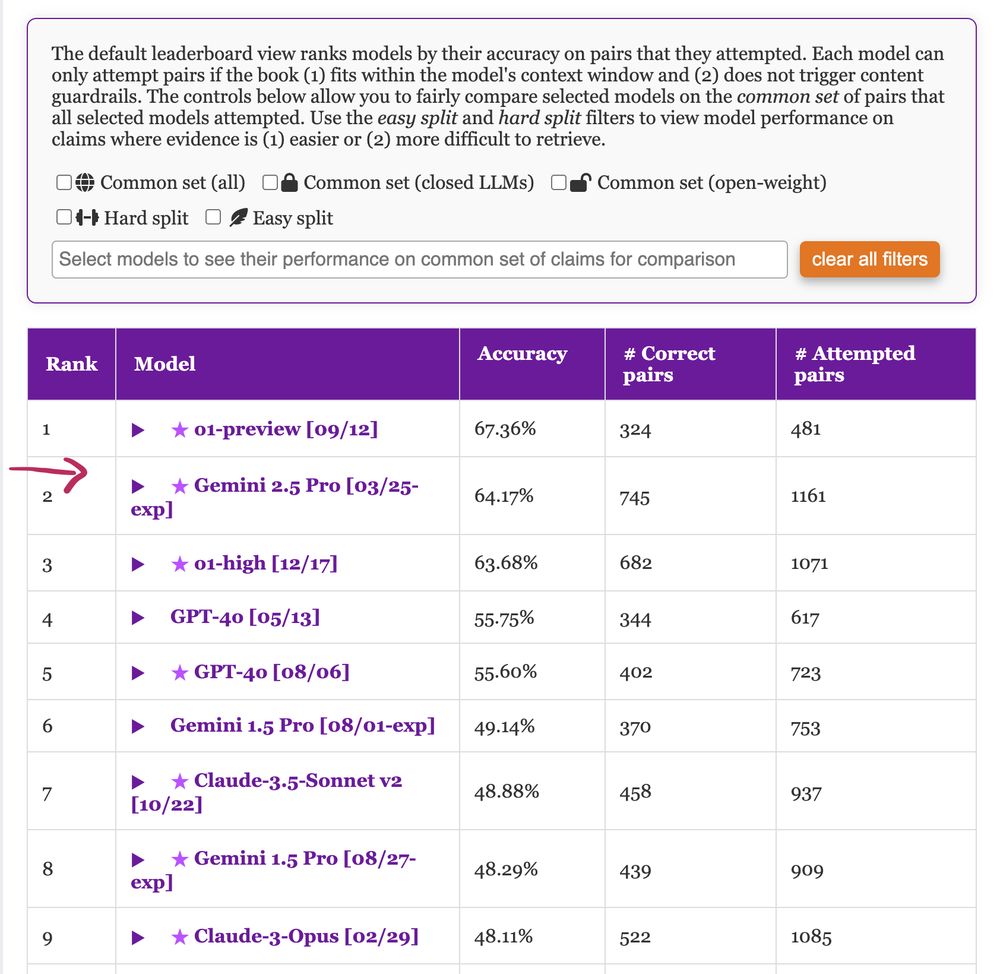

That said, this is a tiny improvement (~1%) over o1-preview, which was released almost one year ago. Have long-context models hit a wall?

Accuracy of human readers is >97%... Long way to go!

That said, this is a tiny improvement (~1%) over o1-preview, which was released almost one year ago. Have long-context models hit a wall?

Accuracy of human readers is >97%... Long way to go!

- 6k reasoning tokens is often not enough to get an ans and more means being able to process only short books

- OpenAI adds sth to the prompt: ~8k extra tokens-> less room for book+reason+generation!