www.arxiv.org/abs/2506.14045

www.arxiv.org/abs/2506.14045

@marloscmachado.bsky.social

@marloscmachado.bsky.social

Finding temporal structure is challenging. As such, we carefully laid down some of the most pressing questions in the field.

We also identified domains that are particularly promising, e.g. open-ended systems.

Finding temporal structure is challenging. As such, we carefully laid down some of the most pressing questions in the field.

We also identified domains that are particularly promising, e.g. open-ended systems.

We believe hierarchical RL is fundamentally about the algorithm through which we discover temporal structure.

We believe hierarchical RL is fundamentally about the algorithm through which we discover temporal structure.

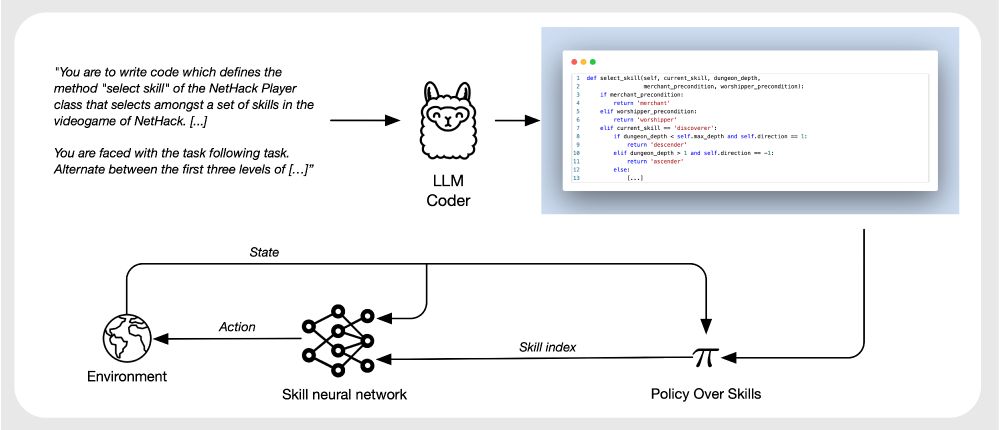

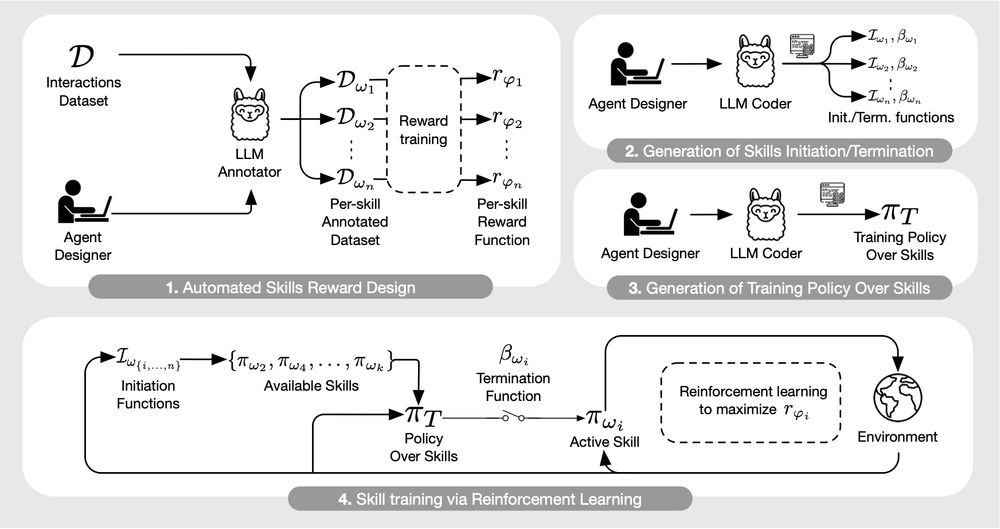

(1) directly from experience, (2) through offline datasets and (3) with foundation models (LLMs).

We present each methods through the fundamental challenges of decision making, namely:

(a) exploration (b) credit assignment and (c) transferability

(1) directly from experience, (2) through offline datasets and (3) with foundation models (LLMs).

We present each methods through the fundamental challenges of decision making, namely:

(a) exploration (b) credit assignment and (c) transferability

When and in what way should we expect these methods to benefit agents? What are the trade-offs involved?

When and in what way should we expect these methods to benefit agents? What are the trade-offs involved?

Computers are built on this same principle.

How will AI agents discover and use such structure? What is "good" structure in the first place?

Computers are built on this same principle.

How will AI agents discover and use such structure? What is "good" structure in the first place?

With the advent of thinking models, it would be interesting to further investigate this.

With the advent of thinking models, it would be interesting to further investigate this.

TL;DR: Hierarchy affords learnability.

TL;DR: Hierarchy affords learnability.