Max Kleiman-Weiner

@maxkw.bsky.social

4.2K followers

370 following

430 posts

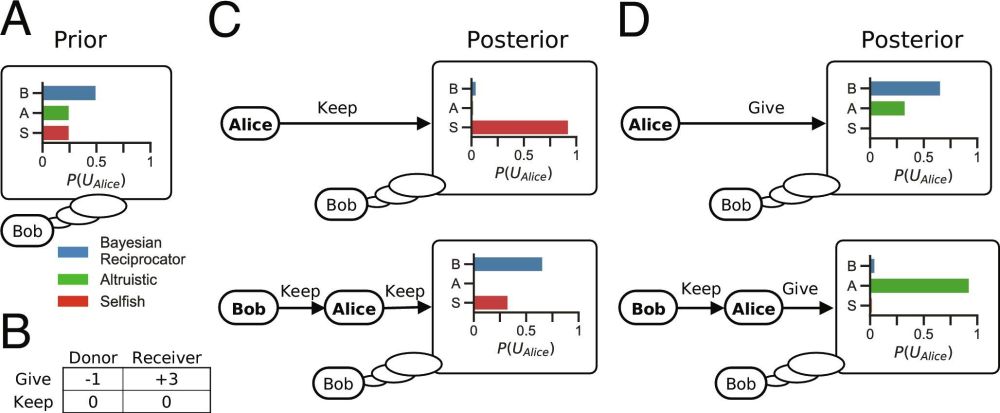

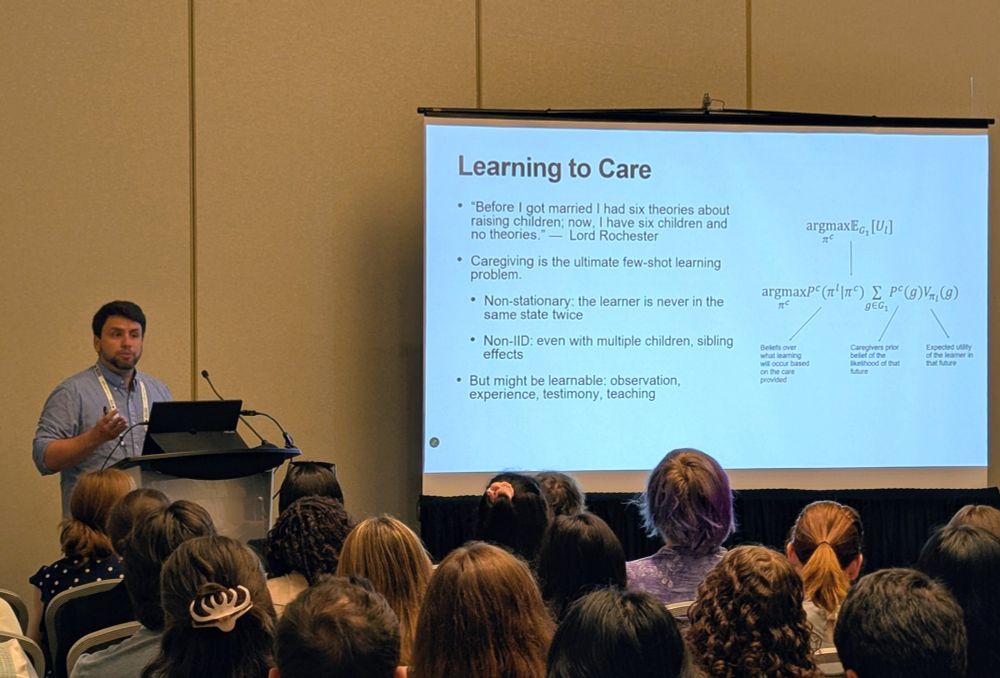

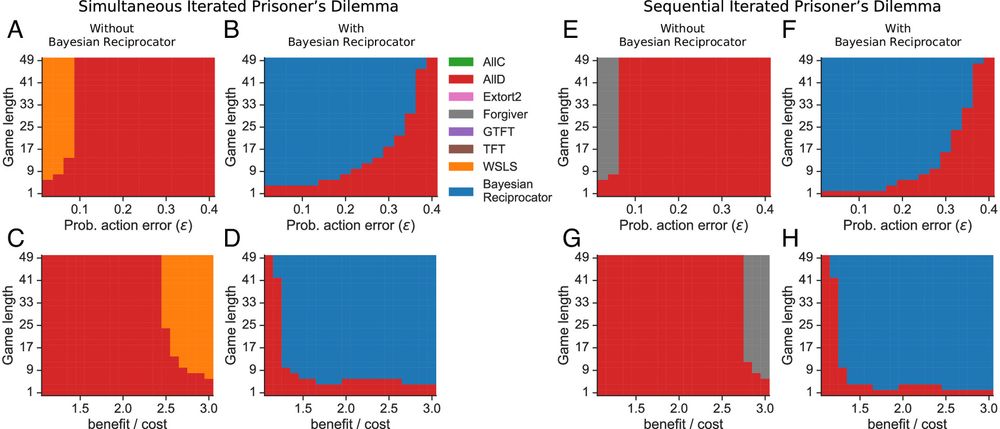

professor at university of washington and founder at csm.ai. computational cognitive scientist. working on social and artificial intelligence and alignment.

http://faculty.washington.edu/maxkw/

Posts

Media

Videos

Starter Packs

Reposted by Max Kleiman-Weiner

Reposted by Max Kleiman-Weiner

Max Kleiman-Weiner

@maxkw.bsky.social

· Jul 31

Reposted by Max Kleiman-Weiner

Max Kleiman-Weiner

@maxkw.bsky.social

· Jul 22

Max Kleiman-Weiner

@maxkw.bsky.social

· Jul 22

Reposted by Max Kleiman-Weiner

Kartik Chandra

@kartikchandra.bsky.social

· Jul 18