Maria Ryskina

@mryskina.bsky.social

74 followers

120 following

15 posts

Postdoc @vectorinstitute.ai | organizer @queerinai.com | previously MIT, CMU LTI | 🐀 rodent enthusiast | she/they

🌐 https://ryskina.github.io/

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Maria Ryskina

Reposted by Maria Ryskina

Reposted by Maria Ryskina

Reposted by Maria Ryskina

Reposted by Maria Ryskina

Reposted by Maria Ryskina

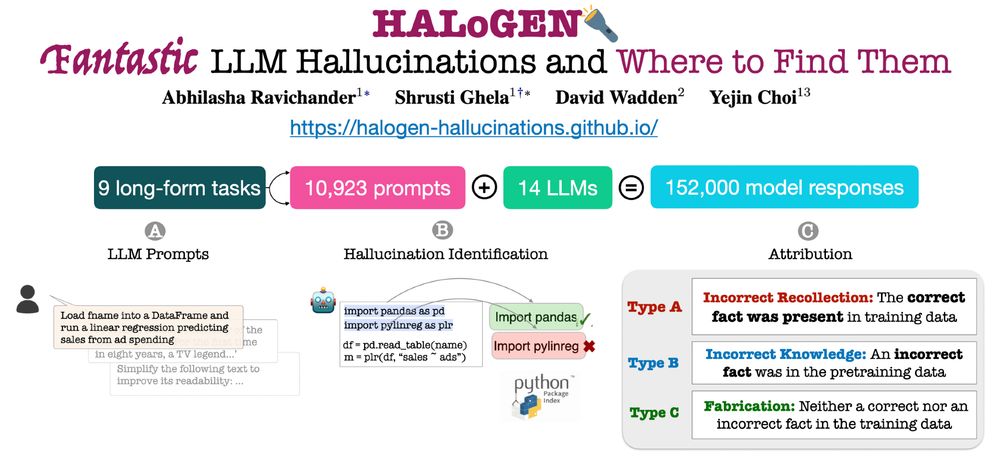

Abhilasha Ravichander

@lasha.bsky.social

· Jul 30

Reposted by Maria Ryskina