Nico Bohlinger

@nicobohlinger.bsky.social

35 followers

36 following

21 posts

26 | Morphology-aware Robotics, RL Research | PhD student at @ias-tudarmstadt.bsky.social

Posts

Media

Videos

Starter Packs

Reposted by Nico Bohlinger

Antonin Raffin

@araffin.bsky.social

· Jul 7

Getting SAC to Work on a Massive Parallel Simulator: Tuning for Speed (Part II) | Antonin Raffin | Homepage

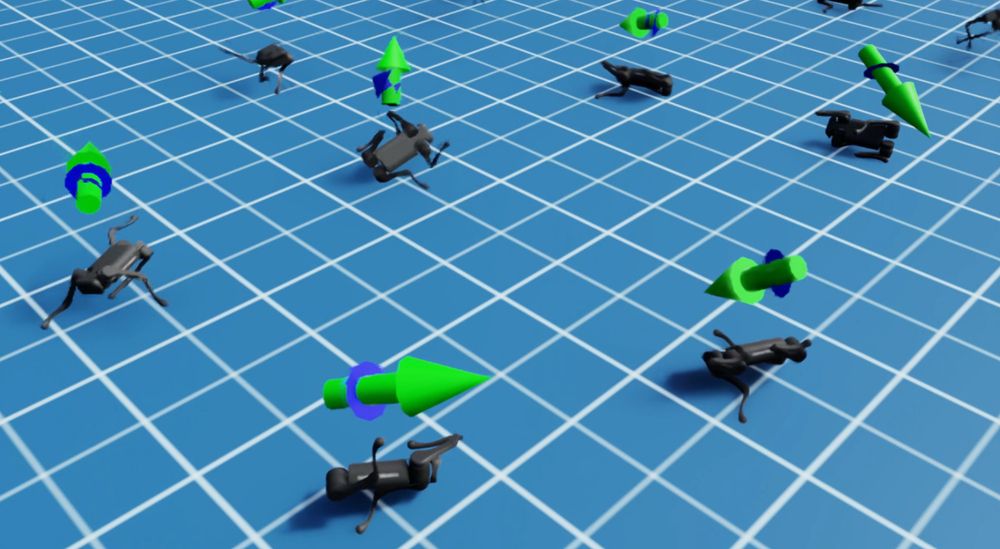

This second post details how I tuned the Soft-Actor Critic (SAC) algorithm to learn as fast as PPO in the context of a massively parallel simulator (thousands of robots simulated in parallel).

araffin.github.io

Nico Bohlinger

@nicobohlinger.bsky.social

· Jun 13

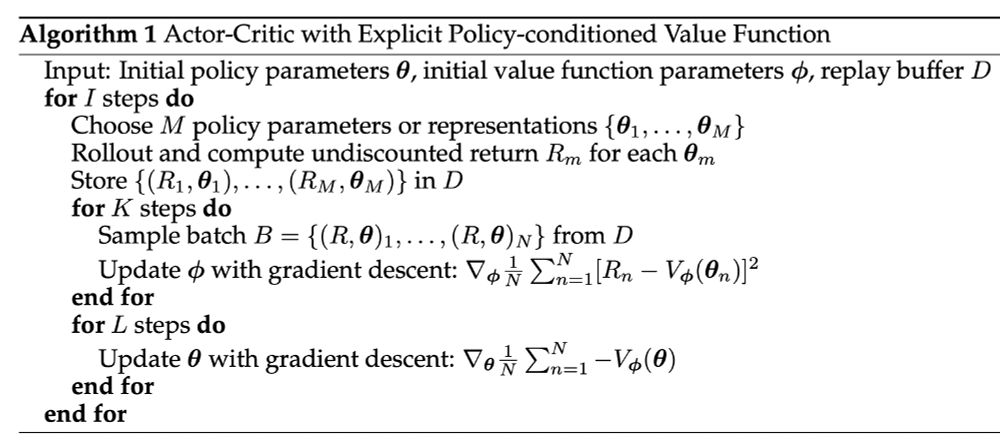

Massively Scaling Explicit Policy-conditioned Value Functions

We introduce a scaling strategy for Explicit Policy-Conditioned Value Functions (EPVFs) that significantly improves performance on challenging continuous-control tasks. EPVFs learn a value function V(...

arxiv.org

Reposted by Nico Bohlinger

Nico Bohlinger

@nicobohlinger.bsky.social

· Mar 18

Nico Bohlinger

@nicobohlinger.bsky.social

· Mar 11

Reposted by Nico Bohlinger

Antonin Raffin

@araffin.bsky.social

· Mar 10

Getting SAC to Work on a Massive Parallel Simulator: An RL Journey With Off-Policy Algorithms (Part I) | Antonin Raffin | Homepage

This post details how I managed to get the Soft-Actor Critic (SAC) and other off-policy reinforcement learning algorithms to work on massively parallel simulators (think Isaac Sim with thousands of ro...

araffin.github.io