Nicolas Papernot

@nicolaspapernot.bsky.social

590 followers

230 following

24 posts

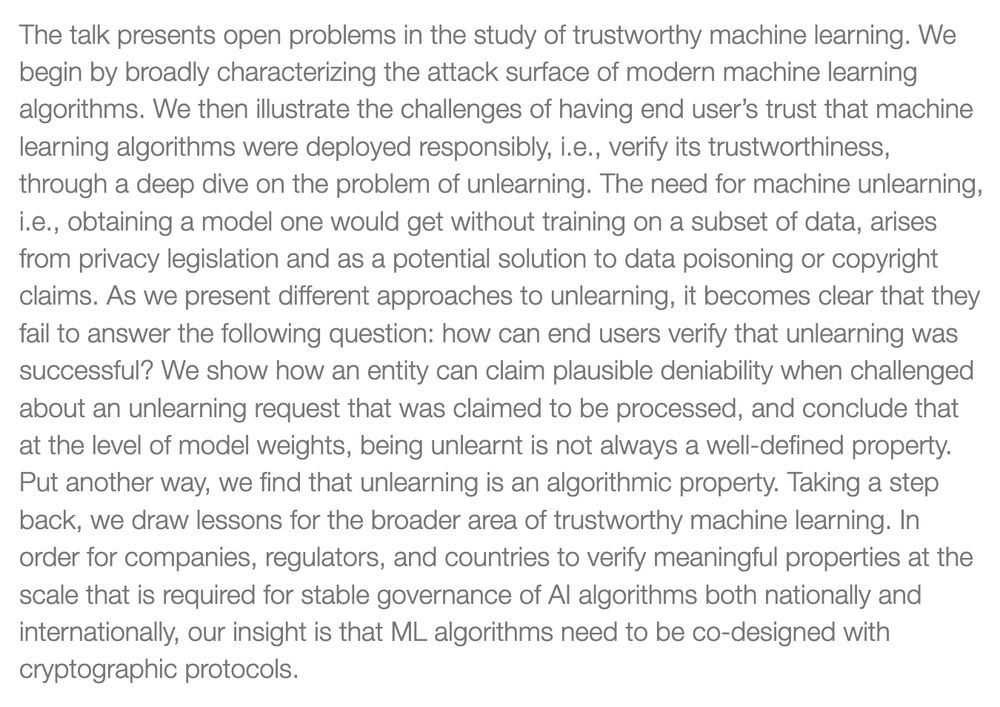

Security and Privacy of Machine Learning at UofT, Vector Institute, and Google 🇨🇦🇫🇷🇪🇺 Co-Director of Canadian AI Safety Institute (CAISI) Research Program at CIFAR. Opinions mine

Posts

Media

Videos

Starter Packs

Reposted by Nicolas Papernot

Reposted by Nicolas Papernot

Reposted by Nicolas Papernot

Reposted by Nicolas Papernot

Reposted by Nicolas Papernot

Reposted by Nicolas Papernot

Reposted by Nicolas Papernot

Asia Biega

@asiabiega.bsky.social

· May 23

Reposted by Nicolas Papernot

Tudor Cebere

@tcebere.bsky.social

· Apr 21

Tighter Privacy Auditing of DP-SGD in the Hidden State Threat Model

Machine learning models can be trained with formal privacy guarantees via differentially private optimizers such as DP-SGD. In this work, we focus on a threat model where the adversary has access...

openreview.net

Reposted by Nicolas Papernot

Reposted by Nicolas Papernot