introducing ✨rank1✨

rank1 is distilled from R1 & designed for reranking.

rank1 is state-of-the-art at complex reranking tasks in reasoning, instruction-following, and general semantics (often 2x RankLlama 🤯)

🧵

introducing ✨rank1✨

rank1 is distilled from R1 & designed for reranking.

rank1 is state-of-the-art at complex reranking tasks in reasoning, instruction-following, and general semantics (often 2x RankLlama 🤯)

🧵

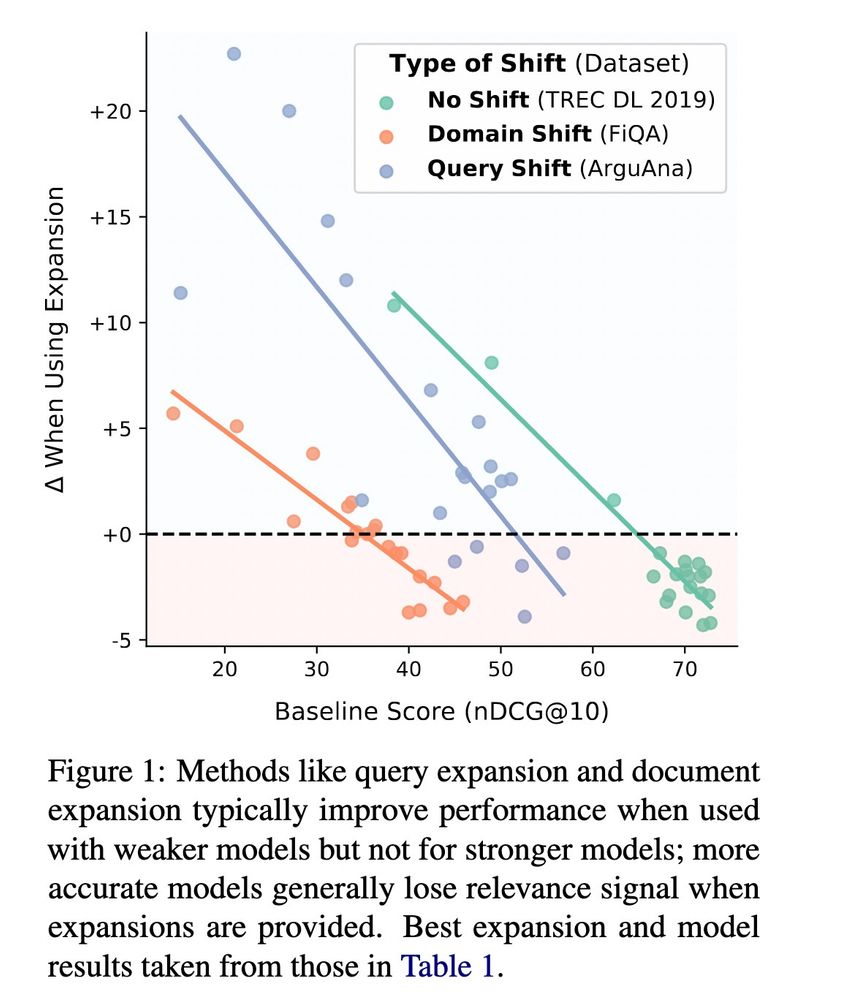

Interestingly, these effects are the least strong on long query shift (e.g. paragraph+ sized queries, a la ArguAna).

Interestingly, these effects are the least strong on long query shift (e.g. paragraph+ sized queries, a la ArguAna).

It turns out there's a strong and consistent negative correlation between model performance and gains from using expansion. And it holds for all 20+ rankers we tested!

It turns out there's a strong and consistent negative correlation between model performance and gains from using expansion. And it holds for all 20+ rankers we tested!

But do these approaches work for all IR models and for different types of distribution shifts? Turns out its actually more 📉 🚨

📝 (arxiv soon): orionweller.github.io/assets/pdf/L...

But do these approaches work for all IR models and for different types of distribution shifts? Turns out its actually more 📉 🚨

📝 (arxiv soon): orionweller.github.io/assets/pdf/L...