We work on Computer Vision, Machine Learning, AI Safety and much more

Learn more about us at: https://torrvision.com

New article by Deni Bechard at Scientific America covering our work on hijacking Multimodal computer agents published on Arxiv earlier this year. A massive effort by Lukas Aichberger, supported by myself Yarin Gal, Philip Torr, FREng, FRS & Adel Bibi

New article by Deni Bechard at Scientific America covering our work on hijacking Multimodal computer agents published on Arxiv earlier this year. A massive effort by Lukas Aichberger, supported by myself Yarin Gal, Philip Torr, FREng, FRS & Adel Bibi

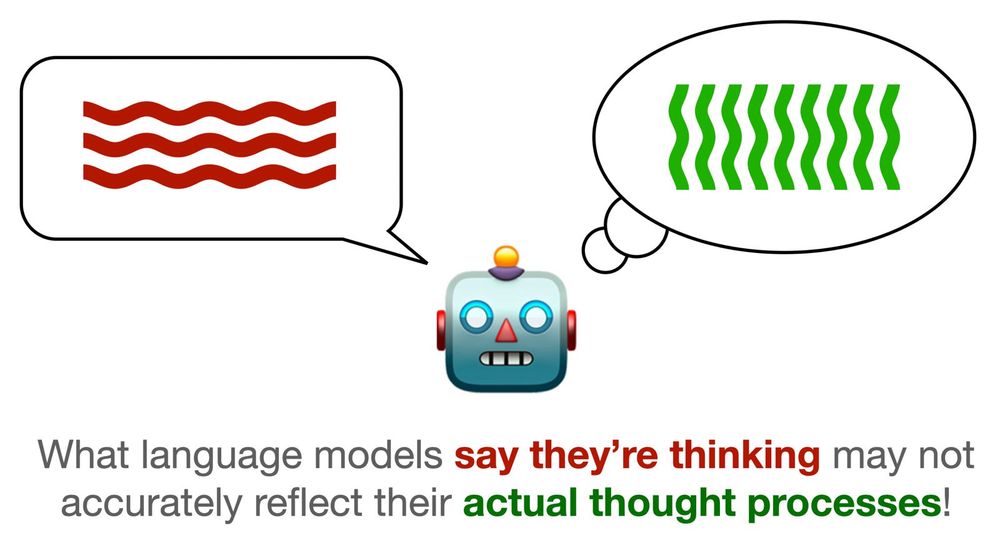

We propose a new framework for LLMs safety. 🧵

(1/7)

#LLM #AISafety #ICLR2025 #Certification #AdversarialRobustness #NLP #Shhhhhh #DomainCertification #AI

We propose a new framework for LLMs safety. 🧵

(1/7)

#LLM #AISafety #ICLR2025 #Certification #AdversarialRobustness #NLP #Shhhhhh #DomainCertification #AI

www.linkedin.com/posts/philip...

www.linkedin.com/posts/philip...

Our latest research exposes critical security risks in AI assistants. An attacker can hijack them by simply posting an image on social media and waiting for it to be captured. [1/6] 🧵

Our latest research exposes critical security risks in AI assistants. An attacker can hijack them by simply posting an image on social media and waiting for it to be captured. [1/6] 🧵

🚨 New Paper Alert: Open Problem in Machine Unlearning for AI Safety 🚨

Can AI truly "forget"? While unlearning promises data removal, controlling emergent capabilities is a inherent challenge. Here's why it matters: 👇

Paper: arxiv.org/pdf/2501.04952

1/8

🚨 New Paper Alert: Open Problem in Machine Unlearning for AI Safety 🚨

Can AI truly "forget"? While unlearning promises data removal, controlling emergent capabilities is a inherent challenge. Here's why it matters: 👇

Paper: arxiv.org/pdf/2501.04952

1/8

- 💥 Improving on #StylizedImageNet, use #IllusionBench: can you see the cat 🐈⬛ Hidden in Plain Sight in the picture 🖼️?

Paper: arxiv.org/abs/2411.06287

- 💥 Improving on #StylizedImageNet, use #IllusionBench: can you see the cat 🐈⬛ Hidden in Plain Sight in the picture 🖼️?

Paper: arxiv.org/abs/2411.06287