Pete Shaw

@ptshaw.bsky.social

1.6K followers

360 following

9 posts

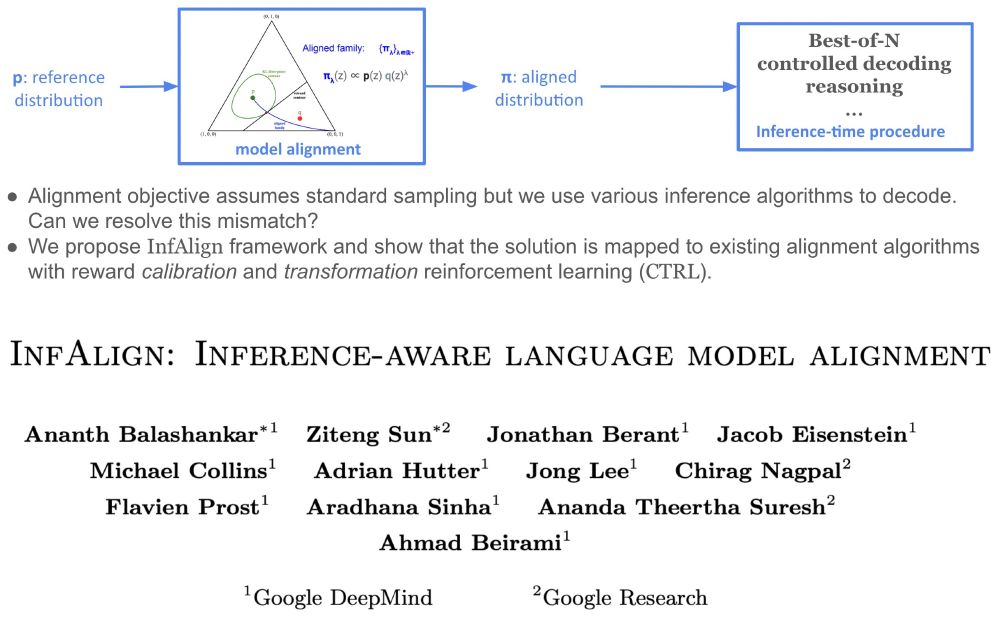

Research Scientist at Google DeepMind. Mostly work on ML, NLP, and BioML. Based in Seattle.

http://ptshaw.com

Posts

Media

Videos

Starter Packs

Reposted by Pete Shaw

Pete Shaw

@ptshaw.bsky.social

· Dec 9

Reposted by Pete Shaw

Marc Lanctot

@sharky6000.bsky.social

· Oct 28

Reposted by Pete Shaw

Pete Shaw

@ptshaw.bsky.social

· Nov 19

Reposted by Pete Shaw

M A Osborne

@maosbot.bsky.social

· Nov 9

Pete Shaw

@ptshaw.bsky.social

· Nov 16