CS, economics, policy, and industry experts predict similar futures.

Superforecasters and experts align closely — though where there is disagreement, superforecasters often expect slower progress.

CS, economics, policy, and industry experts predict similar futures.

Superforecasters and experts align closely — though where there is disagreement, superforecasters often expect slower progress.

2030 forecasts:

🚗 20% vs 12% rides autonomous

💻 18% vs 10% work hours AI-assisted

💊 25% vs 15% AI-developed drug sales

Experts see faster change overall.

2030 forecasts:

🚗 20% vs 12% rides autonomous

💻 18% vs 10% work hours AI-assisted

💊 25% vs 15% AI-developed drug sales

Experts see faster change overall.

These explain why experts disagree — on things like AI’s effect on science, and whether it’ll achieve major breakthroughs.

These explain why experts disagree — on things like AI’s effect on science, and whether it’ll achieve major breakthroughs.

Top quartile: >50% of new 2040 drugs AI-discovered.

Bottom quartile: <10%.

Top quartile: >81% chance AI solves a Millennium Prize Problem by 2040.

Bottom quartile: <30%

Top quartile: >50% of new 2040 drugs AI-discovered.

Bottom quartile: <10%.

Top quartile: >81% chance AI solves a Millennium Prize Problem by 2040.

Bottom quartile: <30%

They expect AI ≈ “tech of the century” (like electricity).

32% think it’ll be “tech of the millennium” (like the printing press).

They expect AI ≈ “tech of the century” (like electricity).

32% think it’ll be “tech of the millennium” (like the printing press).

By 2030:

⌚ 18% of work hours AI-assisted

⚡ 7% of US electricity to AI

🧮 75% accuracy on FrontierMath

🚗 20% of ride-hailing = autonomous

By 2040:

👥 30% use AI companionship daily

💊 25% of new drugs AI-discovered

By 2030:

⌚ 18% of work hours AI-assisted

⚡ 7% of US electricity to AI

🧮 75% accuracy on FrontierMath

🚗 20% of ride-hailing = autonomous

By 2040:

👥 30% use AI companionship daily

💊 25% of new drugs AI-discovered

339 top experts across AI, CS, economics, and policy will forecast AI’s trajectory monthly for 3 years.

🧵👇

339 top experts across AI, CS, economics, and policy will forecast AI’s trajectory monthly for 3 years.

🧵👇

GPT-4 achieved a difficulty-adjusted Brier score of 0.131.

~2 years later, GPT-4.5 scored 0.101—a substantial improvement.

A linear extrapolation of SOTA LLM forecasting performance suggests LLMs will match superforecasters in November 2026.

GPT-4 achieved a difficulty-adjusted Brier score of 0.131.

~2 years later, GPT-4.5 scored 0.101—a substantial improvement.

A linear extrapolation of SOTA LLM forecasting performance suggests LLMs will match superforecasters in November 2026.

A year ago, when we first released ForecastBench, the median public forecast sat at #2 in our leaderboard—trailing behind only superforecasters.

Today, the median public forecast is beaten by multiple LLMs, putting it at #22 in our new leaderboard.

A year ago, when we first released ForecastBench, the median public forecast sat at #2 in our leaderboard—trailing behind only superforecasters.

Today, the median public forecast is beaten by multiple LLMs, putting it at #22 in our new leaderboard.

👇Superforecasters top the ForecastBench leaderboard, with a difficulty-adjusted Brier score of 0.081 (lower Brier scores indicate higher accuracy).

🤖The best-performing LLM in our dataset is @OpenAI’s GPT-4.5 with a score of 0.101—a gap of 0.02.

👇Superforecasters top the ForecastBench leaderboard, with a difficulty-adjusted Brier score of 0.081 (lower Brier scores indicate higher accuracy).

🤖The best-performing LLM in our dataset is @OpenAI’s GPT-4.5 with a score of 0.101—a gap of 0.02.

Today, FRI is releasing an update to ForecastBench—our benchmark that tracks how accurate large language models (LLMs) are at forecasting real-world events.

Here’s what you need to know: 🧵

Today, FRI is releasing an update to ForecastBench—our benchmark that tracks how accurate large language models (LLMs) are at forecasting real-world events.

Here’s what you need to know: 🧵

These are the 10 most surprising questions for superforecasters, calculated using the standardized absolute forecast errors (SAFE) metric. Full details in the paper.

These are the 10 most surprising questions for superforecasters, calculated using the standardized absolute forecast errors (SAFE) metric. Full details in the paper.

Ideally, we’d use short-term forecasting accuracy to assess the reliability of judgments about humanity’s long-term future.

In our data, no such relationship emerged.

Ideally, we’d use short-term forecasting accuracy to assess the reliability of judgments about humanity’s long-term future.

In our data, no such relationship emerged.

The performance gap between the most and least accurate XPT participant groups spanned just 0.18 standard deviations, and was not statistically significant.

The performance gap between the most and least accurate XPT participant groups spanned just 0.18 standard deviations, and was not statistically significant.

Superforecasters predicted green H₂ would cost $4.50/kg in 2024. Experts said $3.50/kg.

In reality, it was $7.50/kg.

For direct air capture, superforecasters predicted 0.32 MtCO₂/year and experts said 0.60 MtCO₂/year in 2024.

In reality: 0.01 MtCO₂/year.

Superforecasters predicted green H₂ would cost $4.50/kg in 2024. Experts said $3.50/kg.

In reality, it was $7.50/kg.

For direct air capture, superforecasters predicted 0.32 MtCO₂/year and experts said 0.60 MtCO₂/year in 2024.

In reality: 0.01 MtCO₂/year.

AI systems achieved gold-level performance at the IMO in July 2025.

Superforecasters assigned this outcome just a 2.3% probability. Domain experts put it at 8.6%.

AI systems achieved gold-level performance at the IMO in July 2025.

Superforecasters assigned this outcome just a 2.3% probability. Domain experts put it at 8.6%.

Participants predicted the state-of-the-art accuracy of AI on MATH, MMLU, and QuaLITY benchmarks by mid-2025.

Experts gave probabilities of 21.4%, 25% and 43.5% to the achieved outcomes.

Superforecasters gave even lower probabilities: 9.3%, 7.2% and 20.1%.

Participants predicted the state-of-the-art accuracy of AI on MATH, MMLU, and QuaLITY benchmarks by mid-2025.

Experts gave probabilities of 21.4%, 25% and 43.5% to the achieved outcomes.

Superforecasters gave even lower probabilities: 9.3%, 7.2% and 20.1%.

We now have answers for 38 questions covering AI, climate tech, bioweapons and more.

Here’s what we found out: 🧵

We now have answers for 38 questions covering AI, climate tech, bioweapons and more.

Here’s what we found out: 🧵

Combining proprietary models + anti-jailbreaking measures + mandatory DNA synthesis screening dropped risk from 1.25% back to 0.4%.

Combining proprietary models + anti-jailbreaking measures + mandatory DNA synthesis screening dropped risk from 1.25% back to 0.4%.

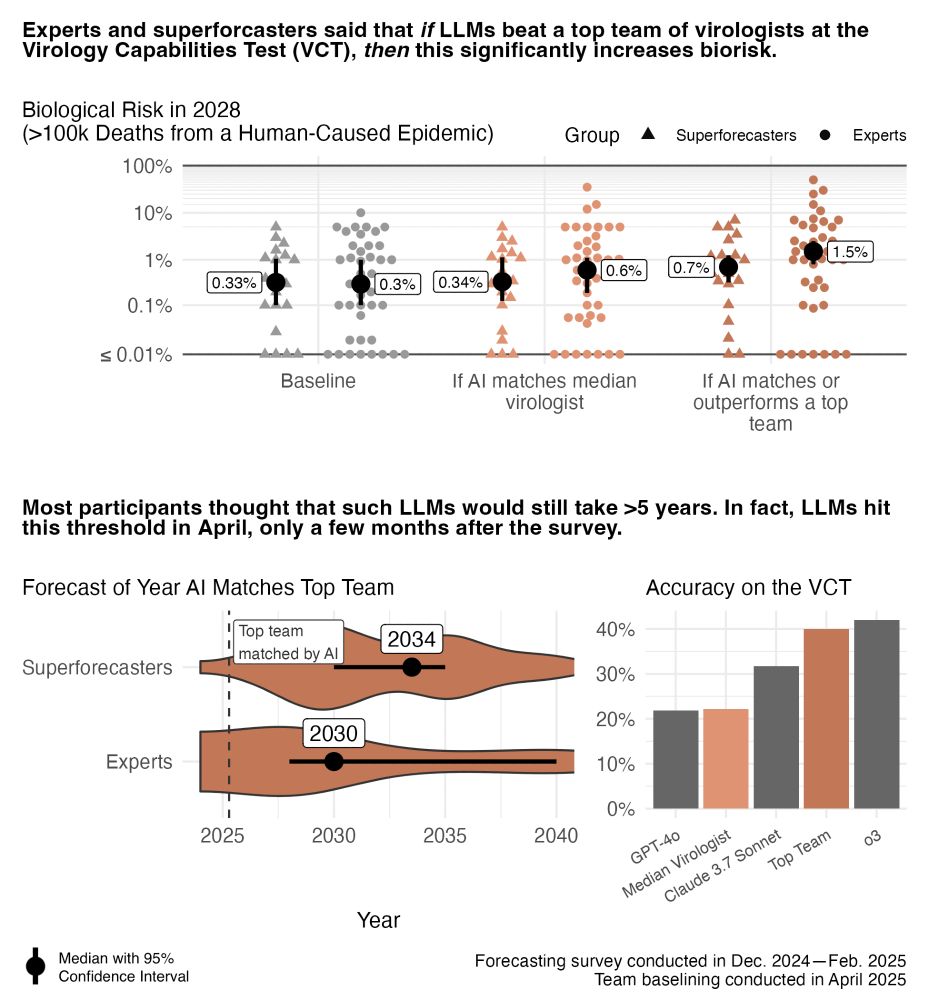

Both groups thought this capability would increase risk of a human-caused epidemic, with experts predicting a higher probability than superforecasters.

Both groups thought this capability would increase risk of a human-caused epidemic, with experts predicting a higher probability than superforecasters.

However, results from a collaboration with @securebio.org suggest that OpenAI's o3 model already matches top virologist teams on troubleshooting tests.

However, results from a collaboration with @securebio.org suggest that OpenAI's o3 model already matches top virologist teams on troubleshooting tests.

Key finding: the median expert thinks the baseline risk of a human-caused epidemic (>100k deaths) is 0.3% annually. But this figure rose to 1.5% conditional on certain LLM capabilities.

Key finding: the median expert thinks the baseline risk of a human-caused epidemic (>100k deaths) is 0.3% annually. But this figure rose to 1.5% conditional on certain LLM capabilities.