ML, music, generative models, robustness, ML & health

curr & prev affils:

Dalhousie University (CS), Vector Institute, Google Research

But what's🔪?Which point is🔪er?

Find out

*why it's a tricky Q(hint: #symmetry)

*why our answer does let🔪predict generalz'n, even in ✅formers!

@ our #ICML2025 #spotlight E-2001 on Wed 11AM

by MF da Silva and F Dangel

@vectorinstitute.ai

But what's🔪?Which point is🔪er?

Find out

*why it's a tricky Q(hint: #symmetry)

*why our answer does let🔪predict generalz'n, even in ✅formers!

@ our #ICML2025 #spotlight E-2001 on Wed 11AM

by MF da Silva and F Dangel

@vectorinstitute.ai

Just a few examples here. Note that the use of 'bold' was chatGPT's own choice, and I had not mentioned anything about safety nets in my interactions in this session.

Just a few examples here. Note that the use of 'bold' was chatGPT's own choice, and I had not mentioned anything about safety nets in my interactions in this session.

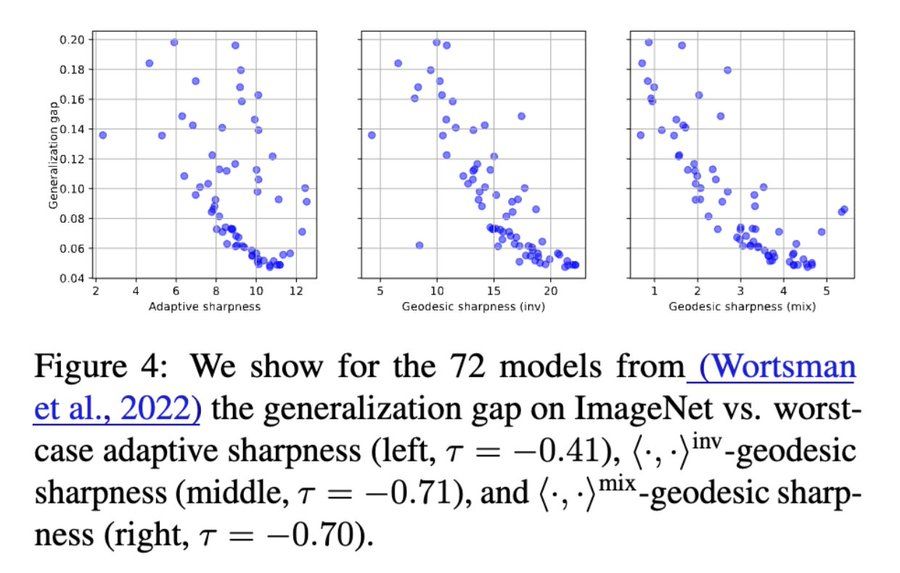

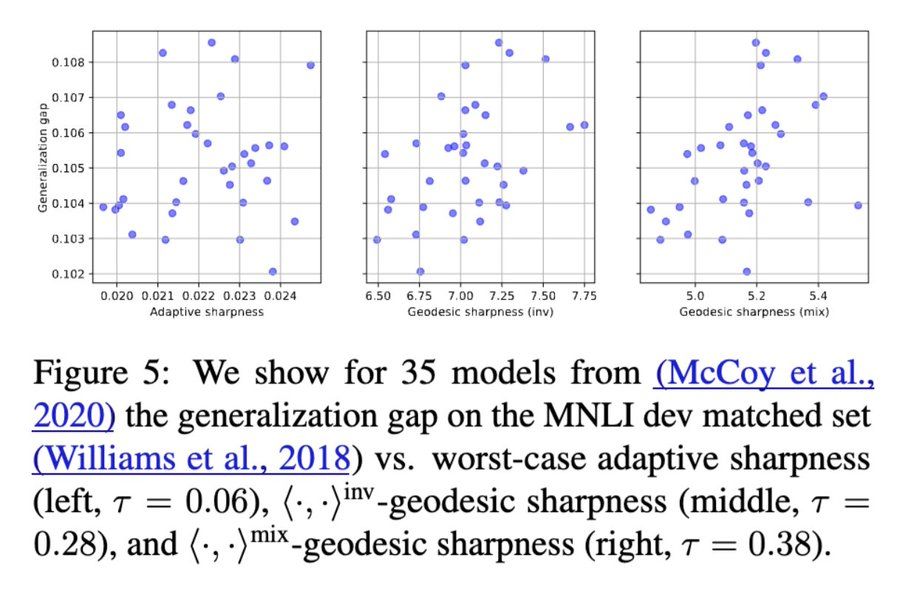

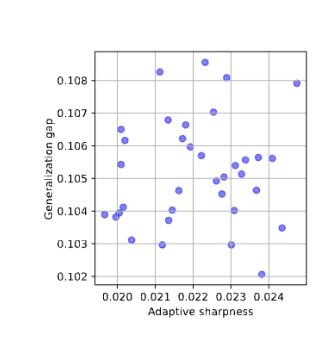

Perturbations are no longer arbitrary—they respect functional equivalences in transformer weights.

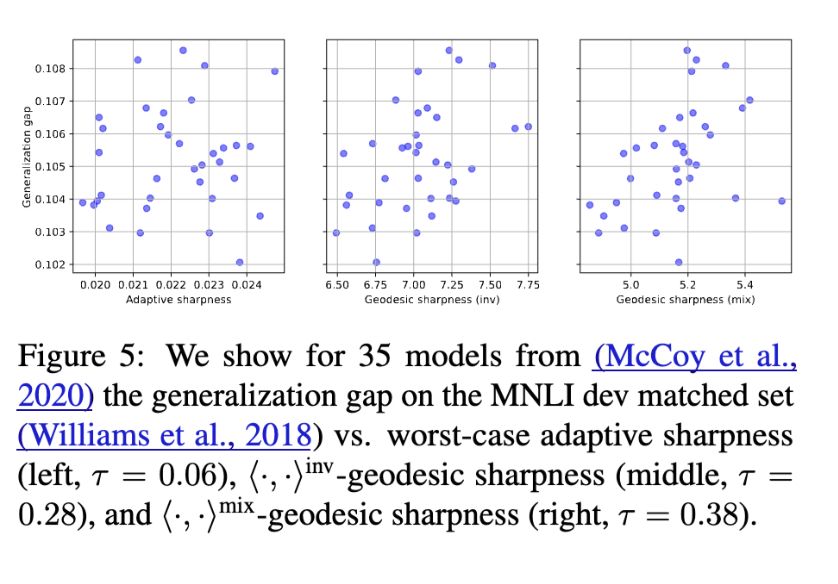

Result: Geodesic sharpness shows clearer, stronger correlations (as measured by the tau correlation coefficient) with generalization. 📈

Perturbations are no longer arbitrary—they respect functional equivalences in transformer weights.

Result: Geodesic sharpness shows clearer, stronger correlations (as measured by the tau correlation coefficient) with generalization. 📈

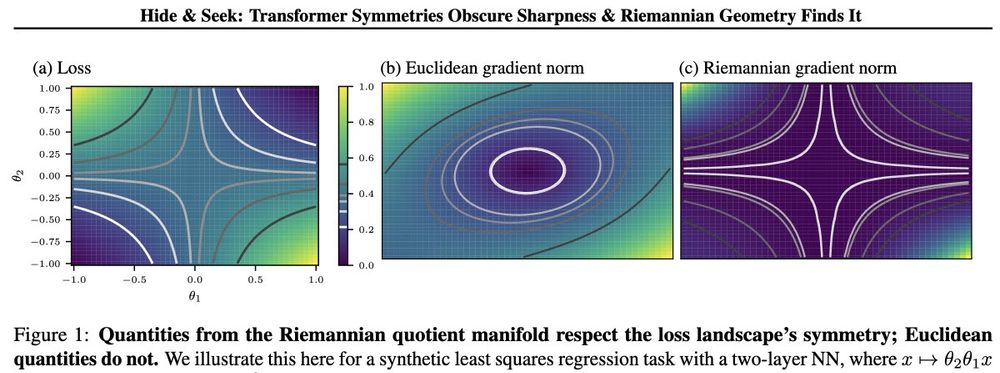

We need to redefine sharpness and measure it not directly in parameter space, but on a symmetry-aware quotient manifold.

We need to redefine sharpness and measure it not directly in parameter space, but on a symmetry-aware quotient manifold.

Sharpness (flat minima) often predicts how well models generalize. But Andriushchenko et al. (2023) found that transformers consistently break this intuition. Why?

Sharpness (flat minima) often predicts how well models generalize. But Andriushchenko et al. (2023) found that transformers consistently break this intuition. Why?

Excited to announce our paper on factoring out param symmetries to better predict generalization in transformers ( #ICML25 spotlight! 🎉)

Amazing work by @marvinfsilva.bsky.social and Felix Dangel.

👇

Excited to announce our paper on factoring out param symmetries to better predict generalization in transformers ( #ICML25 spotlight! 🎉)

Amazing work by @marvinfsilva.bsky.social and Felix Dangel.

👇

archive.org/details/lyus...

archive.org/details/lyus...

****Unleash your CREATIVITY, right NOW!!****

No time to build your prompting skills? No problem!

Just insert *any* of the discs into the machine and then press the "PLAY" button. You won't believe the music that will come out!!!!

****Unleash your CREATIVITY, right NOW!!****

No time to build your prompting skills? No problem!

Just insert *any* of the discs into the machine and then press the "PLAY" button. You won't believe the music that will come out!!!!

6/n

6/n

Cool bonus: this improves classifier-guided diffusion, and it leads to *perceptually aligned gradients* 👇.

5/n

Cool bonus: this improves classifier-guided diffusion, and it leads to *perceptually aligned gradients* 👇.

5/n

4/n

4/n

@cssastry.bsky.social will present work on this on Thurs 11am-2pm, Poster 1804 #NeurIPS2024 :

1/n

@cssastry.bsky.social will present work on this on Thurs 11am-2pm, Poster 1804 #NeurIPS2024 :

1/n