Stella Frank

@scfrank.bsky.social

85 followers

400 following

19 posts

Thinking about multimodal representations | Postdoc at UCPH/Pioneer Centre for AI (DK).

Posts

Media

Videos

Starter Packs

Reposted by Stella Frank

Reposted by Stella Frank

Reposted by Stella Frank

Reposted by Stella Frank

Reposted by Stella Frank

Stella Frank

@scfrank.bsky.social

· Jun 13

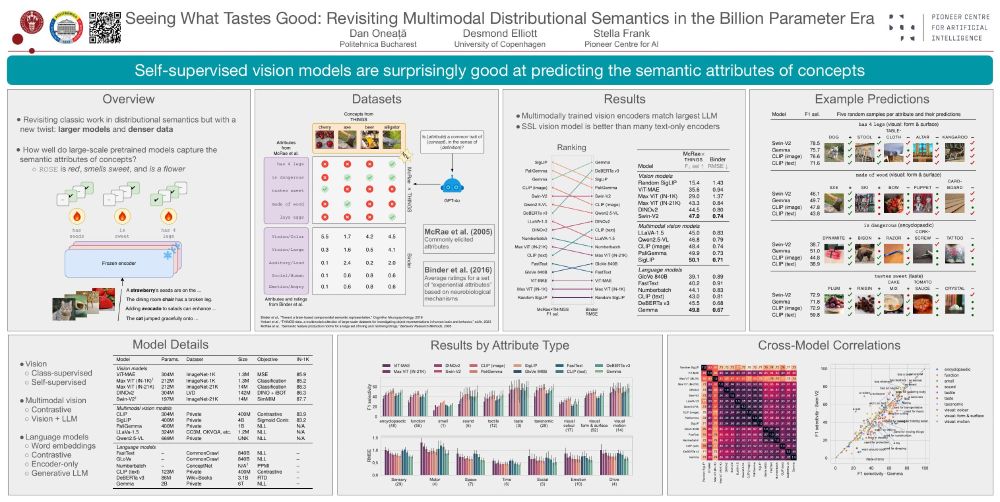

Seeing What Tastes Good: Revisiting Multimodal Distributional Semantics in the Billion Parameter Era

Human learning and conceptual representation is grounded in sensorimotor experience, in contrast to state-of-the-art foundation models. In this paper, we investigate how well such large-scale models, ...

arxiv.org

Reposted by Stella Frank

Reposted by Stella Frank

Reposted by Stella Frank

Reposted by Stella Frank

Reposted by Stella Frank

Lazaros Nalpantidis

@lanalpa.bsky.social

· Apr 11

Postdoc in Robotics and Computer Vision for Life Science Laboratory Automation - DTU Electro

As part of a joint research collaboration between DTU and Novo Nordisk, we are looking for a postdoc to join our multidisciplinary research program focusing on the interplay between AI-Protein design,...

efzu.fa.em2.oraclecloud.com

Stella Frank

@scfrank.bsky.social

· Apr 10

Reposted by Stella Frank

Stella Frank

@scfrank.bsky.social

· Apr 10

Reposted by Stella Frank

Reposted by Stella Frank

Reposted by Stella Frank

Reposted by Stella Frank

Reposted by Stella Frank