👩🏻💻 Working on multi-modal AI reasoning models in scientific domains

https://smamooler.github.io/

@smontariol.bsky.social, @abosselut.bsky.social,

@trackingskills.bsky.social

@smontariol.bsky.social, @abosselut.bsky.social,

@trackingskills.bsky.social

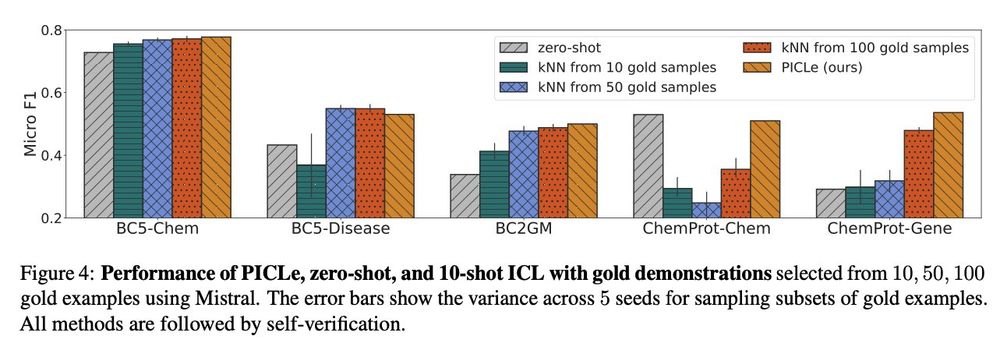

✨ With zero human annotations, PICLe outperforms ICL in low-resource settings, where limited gold examples can be used as in-context demonstrations!

✨ With zero human annotations, PICLe outperforms ICL in low-resource settings, where limited gold examples can be used as in-context demonstrations!

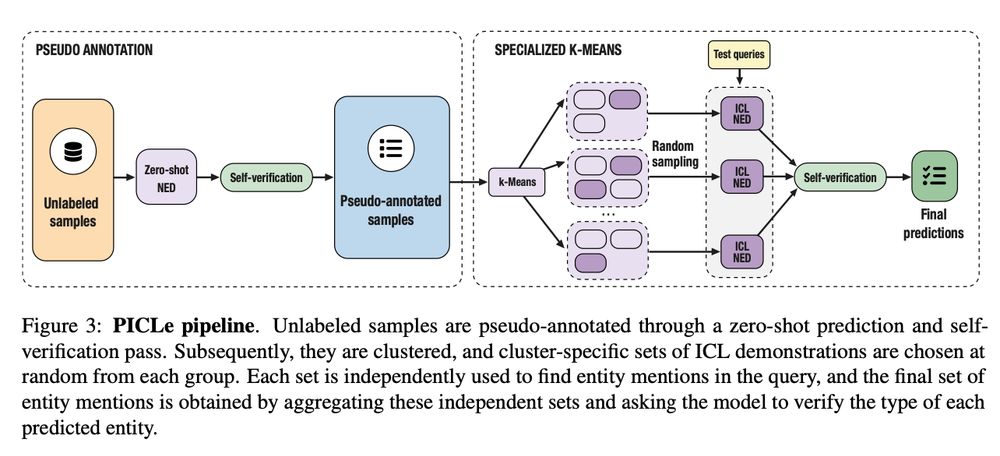

1️⃣ LLMs annotate demonstrations in a zero-shot first pass.

2️⃣ Synthetic demos are clustered, and in-context sets are sampled.

3️⃣ Entity mentions are predicted using each set independently.

4️⃣ Self-verification selects the final predictions.

1️⃣ LLMs annotate demonstrations in a zero-shot first pass.

2️⃣ Synthetic demos are clustered, and in-context sets are sampled.

3️⃣ Entity mentions are predicted using each set independently.

4️⃣ Self-verification selects the final predictions.

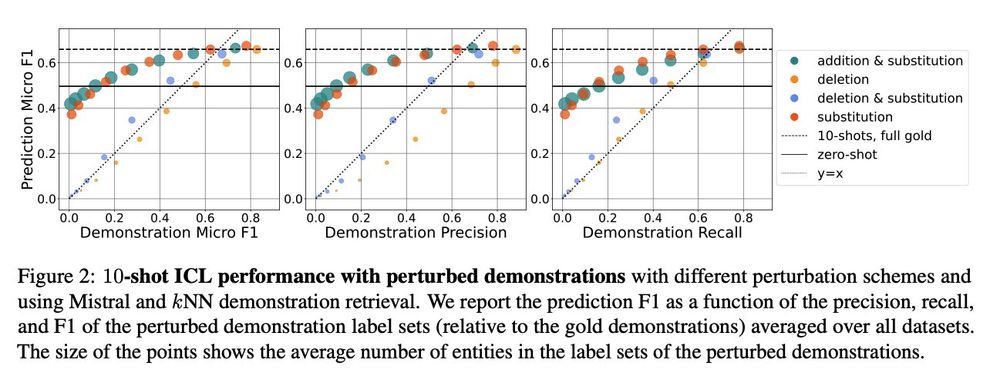

We use perturbation schemes that create demonstrations with varying correctness levels to analyze key demonstration attributes.

We use perturbation schemes that create demonstrations with varying correctness levels to analyze key demonstration attributes.