Sonia

@soniajoseph.bsky.social

AI researcher at Mila, visiting researcher at Meta

Also on X: @soniajoseph_

Also on X: @soniajoseph_

Pinned

Sonia

@soniajoseph.bsky.social

· Oct 11

Multimodal interpretability in 2024

Multimodal interpretability, from sparse feature circuits with SAEs, to vision transformers leveraging CLIP's shared text-image space.

soniajoseph.ai

I wrote a post on multimodal interpretability techniques, including sparse feature circuit discovery, exploiting the shared text-image space of CLIP, and training adapters.

soniajoseph.ai/multimodal-i... 💜

soniajoseph.ai/multimodal-i... 💜

It was a pleasure to be interviewed about world model interpretability, physical intelligence, and robot security by Paige Harriman @climatepaige.bsky.social.

It takes skill to lead an interview that everyone from technical researchers to laymen can enjoy and understand! 🤖

tinyurl.com/ycypkmjf

It takes skill to lead an interview that everyone from technical researchers to laymen can enjoy and understand! 🤖

tinyurl.com/ycypkmjf

November 7, 2025 at 12:33 AM

It was a pleasure to be interviewed about world model interpretability, physical intelligence, and robot security by Paige Harriman @climatepaige.bsky.social.

It takes skill to lead an interview that everyone from technical researchers to laymen can enjoy and understand! 🤖

tinyurl.com/ycypkmjf

It takes skill to lead an interview that everyone from technical researchers to laymen can enjoy and understand! 🤖

tinyurl.com/ycypkmjf

It has been extremely disorienting traveling between the tech right in SF and the academic left in Montreal and has induced a somewhat deep sense of moral relativism.

May 23, 2025 at 2:49 AM

It has been extremely disorienting traveling between the tech right in SF and the academic left in Montreal and has induced a somewhat deep sense of moral relativism.

Half the people who say you don’t need to go to grad school to do AI research have been to grad school

May 4, 2025 at 2:52 AM

Half the people who say you don’t need to go to grad school to do AI research have been to grad school

We visualized thousands of CLIP SAE features in collaboration between @fraunhoferhhi.bsky.social and @mila-quebec.bsky.social!

Search thousands of interpretable CLIP features in our vision atlas, with autointerp labels and scores like clarity and polysemanticity.

Link: tinyurl.com/3ffy8xk6

Search thousands of interpretable CLIP features in our vision atlas, with autointerp labels and scores like clarity and polysemanticity.

Link: tinyurl.com/3ffy8xk6

April 28, 2025 at 2:45 PM

We visualized thousands of CLIP SAE features in collaboration between @fraunhoferhhi.bsky.social and @mila-quebec.bsky.social!

Search thousands of interpretable CLIP features in our vision atlas, with autointerp labels and scores like clarity and polysemanticity.

Link: tinyurl.com/3ffy8xk6

Search thousands of interpretable CLIP features in our vision atlas, with autointerp labels and scores like clarity and polysemanticity.

Link: tinyurl.com/3ffy8xk6

I’m really excited about Diffusion Steering Lens, an intuitive and elegant new “logit lens” technique for decoding the attention and MLP blocks of vision transformers!

Vision is much more expressive than language, so some new mech interp rules apply:

Vision is much more expressive than language, so some new mech interp rules apply:

🔍Logit Lens tracks what transformer LMs “believe” at each layer. How can we effectively adapt this approach to Vision Transformers?

Happy to share our “Decoding Vision Transformers: the Diffusion Steering Lens” was accepted at the CVPR 2025 Workshop on Mechanistic Interpretability for Vision!

(1/7)

Happy to share our “Decoding Vision Transformers: the Diffusion Steering Lens” was accepted at the CVPR 2025 Workshop on Mechanistic Interpretability for Vision!

(1/7)

April 25, 2025 at 1:36 PM

I’m really excited about Diffusion Steering Lens, an intuitive and elegant new “logit lens” technique for decoding the attention and MLP blocks of vision transformers!

Vision is much more expressive than language, so some new mech interp rules apply:

Vision is much more expressive than language, so some new mech interp rules apply:

A genuine question for the death by 2027 people:

China authors 30% of top papers, is winning in video zero shot retrieval with InternVideo2, & has crazy centralization of data and infra.

The CCP is not stupid. What does it mean, if they have no plans for doom within 2 yrs?

China authors 30% of top papers, is winning in video zero shot retrieval with InternVideo2, & has crazy centralization of data and infra.

The CCP is not stupid. What does it mean, if they have no plans for doom within 2 yrs?

April 23, 2025 at 8:30 PM

A genuine question for the death by 2027 people:

China authors 30% of top papers, is winning in video zero shot retrieval with InternVideo2, & has crazy centralization of data and infra.

The CCP is not stupid. What does it mean, if they have no plans for doom within 2 yrs?

China authors 30% of top papers, is winning in video zero shot retrieval with InternVideo2, & has crazy centralization of data and infra.

The CCP is not stupid. What does it mean, if they have no plans for doom within 2 yrs?

AI research is famously autistic— but autism in women can look different: intense daydreaming, an obsession with celebrities, or a fixation with female-coded activities like makeup/beauty. It’s socially masked and not visible to the untrained eye.

April 23, 2025 at 5:49 PM

AI research is famously autistic— but autism in women can look different: intense daydreaming, an obsession with celebrities, or a fixation with female-coded activities like makeup/beauty. It’s socially masked and not visible to the untrained eye.

Looking forward to being a speaker at the first mechanistic interpretability workshop for vision at CVPR! 🔥

sites.google.com/view/miv-cvp...

Paper submission deadline March 1st

sites.google.com/view/miv-cvp...

Paper submission deadline March 1st

February 6, 2025 at 6:51 PM

Looking forward to being a speaker at the first mechanistic interpretability workshop for vision at CVPR! 🔥

sites.google.com/view/miv-cvp...

Paper submission deadline March 1st

sites.google.com/view/miv-cvp...

Paper submission deadline March 1st

Leaving a digital trail, just in case, given there is speculation of foul play currently in the Valley.

I am happy and healthy and intend to live a long and beautiful life. If anything were to happen to me, that would be highly suspicious and should be investigated.

I am happy and healthy and intend to live a long and beautiful life. If anything were to happen to me, that would be highly suspicious and should be investigated.

December 15, 2024 at 9:29 PM

Leaving a digital trail, just in case, given there is speculation of foul play currently in the Valley.

I am happy and healthy and intend to live a long and beautiful life. If anything were to happen to me, that would be highly suspicious and should be investigated.

I am happy and healthy and intend to live a long and beautiful life. If anything were to happen to me, that would be highly suspicious and should be investigated.

Reposted by Sonia

I’ve been giving some context on AI development environments here, particularly how co-living and tribal bonds among researchers in Silicon Valley influence ethical decisions, whistleblowing, and the trajectory of AGI development.

x.com/soniajoseph_...

x.com/soniajoseph_...

x.com

x.com

December 6, 2024 at 4:36 PM

I’ve been giving some context on AI development environments here, particularly how co-living and tribal bonds among researchers in Silicon Valley influence ethical decisions, whistleblowing, and the trajectory of AGI development.

x.com/soniajoseph_...

x.com/soniajoseph_...

I’ll be tweeting out something important this weekend that has been happening in the shadows of the AI industry.

December 6, 2024 at 4:07 PM

I’ll be tweeting out something important this weekend that has been happening in the shadows of the AI industry.

Reposted by Sonia

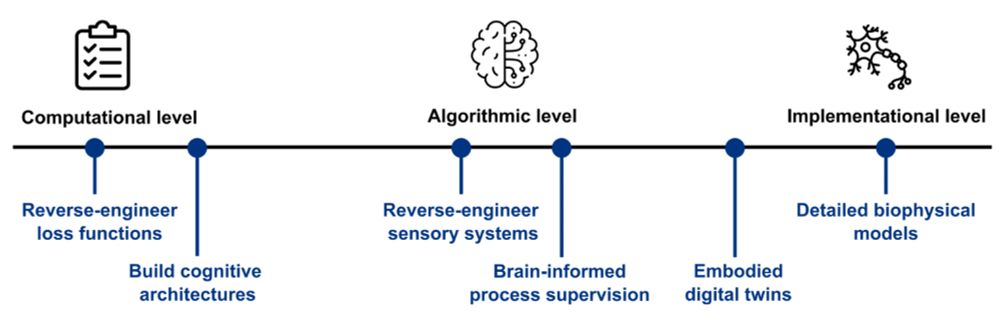

Excited to release what we’ve been working on at Amaranth Foundation, our latest whitepaper, NeuroAI for AI safety! A detailed, ambitious roadmap for how neuroscience research can help build safer AI systems while accelerating both virtual neuroscience and neurotech. 1/N

December 2, 2024 at 4:17 PM

Excited to release what we’ve been working on at Amaranth Foundation, our latest whitepaper, NeuroAI for AI safety! A detailed, ambitious roadmap for how neuroscience research can help build safer AI systems while accelerating both virtual neuroscience and neurotech. 1/N

Reposted by Sonia

How to drive your research forward?

“I tested the idea we discussed last time. Here are some results. It does not work. (… awkward silence)”

Such conversations happen so many times when meetings with students. How do we move forward?

You need …

“I tested the idea we discussed last time. Here are some results. It does not work. (… awkward silence)”

Such conversations happen so many times when meetings with students. How do we move forward?

You need …

December 1, 2024 at 10:09 PM

How to drive your research forward?

“I tested the idea we discussed last time. Here are some results. It does not work. (… awkward silence)”

Such conversations happen so many times when meetings with students. How do we move forward?

You need …

“I tested the idea we discussed last time. Here are some results. It does not work. (… awkward silence)”

Such conversations happen so many times when meetings with students. How do we move forward?

You need …

I returned to Boston for Thanksgiving, my birthday, and my grandmother’s funeral. She passed down to me a necklace from Kerala, and a vast collection of British and Indo-Anglian literature.

Death, while tragic, can be re-energizing in reminding you who you are.

Death, while tragic, can be re-energizing in reminding you who you are.

December 1, 2024 at 6:07 PM

I returned to Boston for Thanksgiving, my birthday, and my grandmother’s funeral. She passed down to me a necklace from Kerala, and a vast collection of British and Indo-Anglian literature.

Death, while tragic, can be re-energizing in reminding you who you are.

Death, while tragic, can be re-energizing in reminding you who you are.

Reposted by Sonia

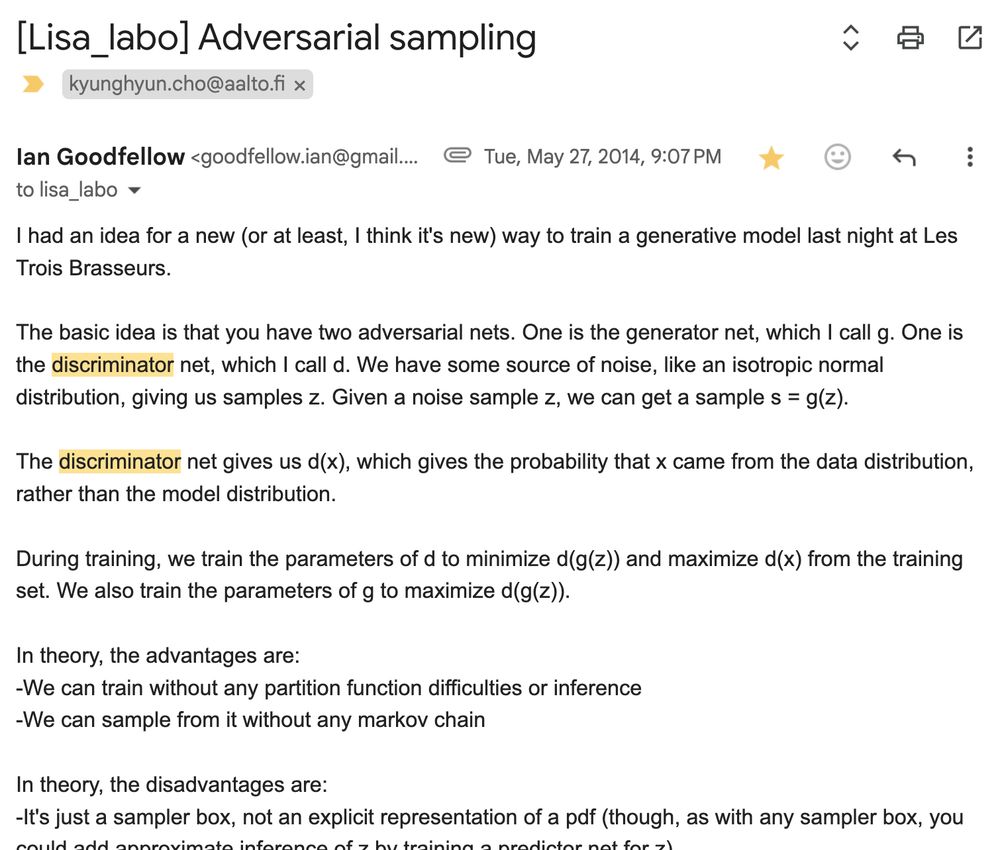

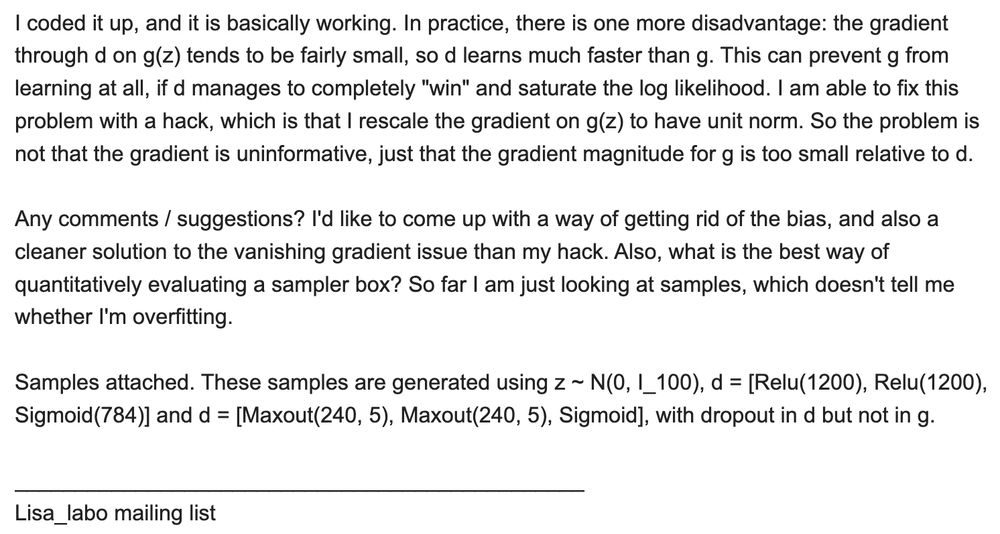

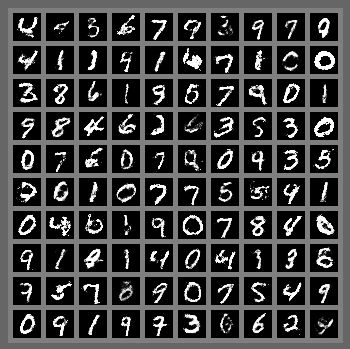

congratulations, @ian-goodfellow.bsky.social, for the test-of-time award at @neuripsconf.bsky.social!

this award reminds me of how GAN started with this one email ian sent to the Mila (then Lisa) lab mailing list in May 2014. super insightful and amazing execution!

this award reminds me of how GAN started with this one email ian sent to the Mila (then Lisa) lab mailing list in May 2014. super insightful and amazing execution!

November 27, 2024 at 6:31 PM

congratulations, @ian-goodfellow.bsky.social, for the test-of-time award at @neuripsconf.bsky.social!

this award reminds me of how GAN started with this one email ian sent to the Mila (then Lisa) lab mailing list in May 2014. super insightful and amazing execution!

this award reminds me of how GAN started with this one email ian sent to the Mila (then Lisa) lab mailing list in May 2014. super insightful and amazing execution!

Where are my Bluesky followers coming from? How are you finding me?

November 25, 2024 at 10:34 PM

Where are my Bluesky followers coming from? How are you finding me?

Reposted by Sonia

I wrote a post on multimodal interpretability techniques, including sparse feature circuit discovery, exploiting the shared text-image space of CLIP, and training adapters.

soniajoseph.ai/multimodal-i... 💜

soniajoseph.ai/multimodal-i... 💜

Multimodal interpretability in 2024

Multimodal interpretability, from sparse feature circuits with SAEs, to vision transformers leveraging CLIP's shared text-image space.

soniajoseph.ai

October 11, 2024 at 2:20 PM

I wrote a post on multimodal interpretability techniques, including sparse feature circuit discovery, exploiting the shared text-image space of CLIP, and training adapters.

soniajoseph.ai/multimodal-i... 💜

soniajoseph.ai/multimodal-i... 💜

It took me years to build a following and community on X. I’m very curious what will happen on Bluesky.

November 19, 2024 at 6:12 AM

It took me years to build a following and community on X. I’m very curious what will happen on Bluesky.

Reposted by Sonia

It's wild how much of an influence Canada has had on neuroscience, psychology, and machine learning. Wilder Penfield, Donald Hebb, Brenda Milner, Geoffrey Hinton, Yoshua Bengio...

Is there a book or article about this?

Is there a book or article about this?

November 18, 2024 at 5:04 PM

It's wild how much of an influence Canada has had on neuroscience, psychology, and machine learning. Wilder Penfield, Donald Hebb, Brenda Milner, Geoffrey Hinton, Yoshua Bengio...

Is there a book or article about this?

Is there a book or article about this?

So many new ppl on this app let’s go!!

November 10, 2024 at 9:24 PM

So many new ppl on this app let’s go!!

Reposted by Sonia

Bluesky is getting fuller with life and academics 🔥

November 8, 2024 at 5:47 AM

Bluesky is getting fuller with life and academics 🔥

Reposted by Sonia

@soniajoseph.bsky.social has been doing fascinating work on mechanistic interpretability in multi-modal models. It's like neuroscience for AI!

If this kind of stuff interests you I strongly recommend reading this blog post.

#MLSky #AI #NeuroAI 🧪

If this kind of stuff interests you I strongly recommend reading this blog post.

#MLSky #AI #NeuroAI 🧪

I wrote a post on multimodal interpretability techniques, including sparse feature circuit discovery, exploiting the shared text-image space of CLIP, and training adapters.

soniajoseph.ai/multimodal-i... 💜

soniajoseph.ai/multimodal-i... 💜

Multimodal interpretability in 2024

Multimodal interpretability, from sparse feature circuits with SAEs, to vision transformers leveraging CLIP's shared text-image space.

soniajoseph.ai

October 29, 2024 at 6:33 PM

@soniajoseph.bsky.social has been doing fascinating work on mechanistic interpretability in multi-modal models. It's like neuroscience for AI!

If this kind of stuff interests you I strongly recommend reading this blog post.

#MLSky #AI #NeuroAI 🧪

If this kind of stuff interests you I strongly recommend reading this blog post.

#MLSky #AI #NeuroAI 🧪

I wrote a post on multimodal interpretability techniques, including sparse feature circuit discovery, exploiting the shared text-image space of CLIP, and training adapters.

soniajoseph.ai/multimodal-i... 💜

soniajoseph.ai/multimodal-i... 💜

Multimodal interpretability in 2024

Multimodal interpretability, from sparse feature circuits with SAEs, to vision transformers leveraging CLIP's shared text-image space.

soniajoseph.ai

October 11, 2024 at 2:20 PM

I wrote a post on multimodal interpretability techniques, including sparse feature circuit discovery, exploiting the shared text-image space of CLIP, and training adapters.

soniajoseph.ai/multimodal-i... 💜

soniajoseph.ai/multimodal-i... 💜