Academic: language grounding, vision+language, interp, rigorous & creative evals, cogsci

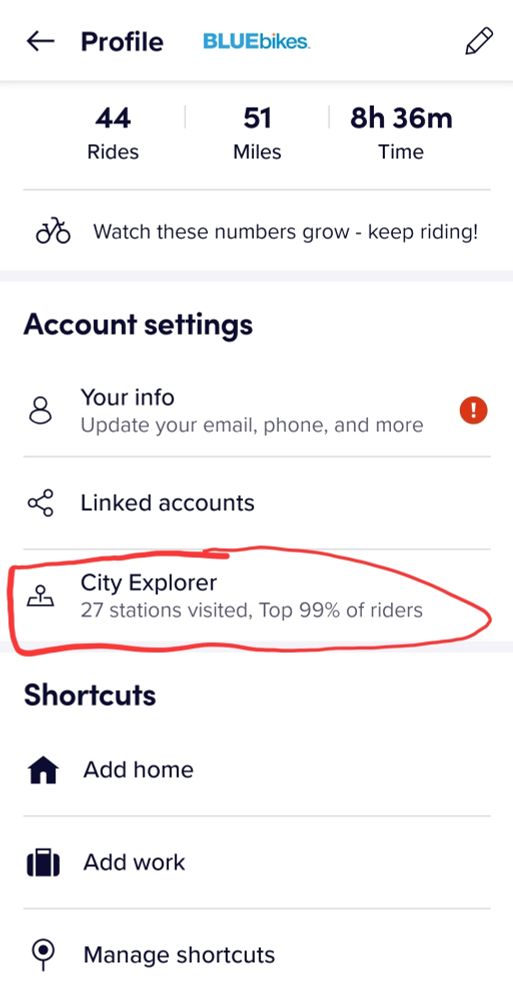

Other: many sports, urban explorations, puzzles/quizzes

bennokrojer.com

Sharing my notes and thoughts here 🧵

As LLMs become increasingly used as sources of factual knowledge, we ask:

Do they perform equitably across users of different backgrounds?

🧵⬇️

1/6

As LLMs become increasingly used as sources of factual knowledge, we ask:

Do they perform equitably across users of different backgrounds?

🧵⬇️

1/6

What hopeful times it was back then, as I'm now at the chapters (2006-2008) describing their 2008 run for president

What hopeful times it was back then, as I'm now at the chapters (2006-2008) describing their 2008 run for president

i wonder if the appearance on tv is meant to represent the sin of pride

i wonder if the appearance on tv is meant to represent the sin of pride

For my current paper it made it so much easier to quickly grasp different interp tools and their effects throughout the whole project

For my current paper it made it so much easier to quickly grasp different interp tools and their effects throughout the whole project

"Better late than never: Getting into interpretability in 2025"

It's been a great year pivoting into interp and i wanted to reflect on it

bennokrojer.com/interp.html

"Better late than never: Getting into interpretability in 2025"

It's been a great year pivoting into interp and i wanted to reflect on it

bennokrojer.com/interp.html

#NLProc #AI

Excited to chat about about anything vision+language, interpretability, cogsci/psych, embedding spaces, visual reasoning, video/world models

Excited to chat about about anything vision+language, interpretability, cogsci/psych, embedding spaces, visual reasoning, video/world models

It covered lots from crowdworker rights, the ideologies (doomers, EA, ...) and the silicon valley startup world to the many big egos and company-internal battles

Great work by @karenhao.bsky.social

It covered lots from crowdworker rights, the ideologies (doomers, EA, ...) and the silicon valley startup world to the many big egos and company-internal battles

Great work by @karenhao.bsky.social

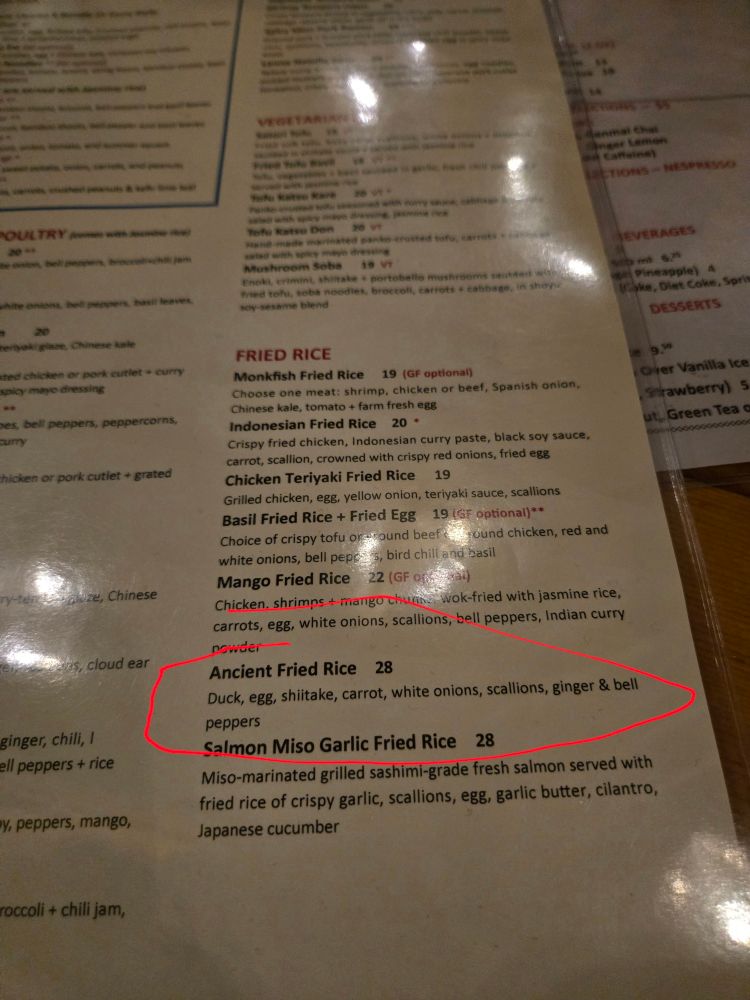

love the detailed montreal spots mentioned

consider including such a section in your next appendix!

(paper by @a-krishnan.bsky.social arxiv.org/pdf/2504.050...)

love the detailed montreal spots mentioned

consider including such a section in your next appendix!

(paper by @a-krishnan.bsky.social arxiv.org/pdf/2504.050...)

In linguistics, the "apparent time hypothesis" famously discusses this but never empirically tests it

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

In linguistics, the "apparent time hypothesis" famously discusses this but never empirically tests it

It's truly been an honour sharing the PhD journey with you. I wasn’t ready for the void your sudden departure left (in the office and in my life!).

Your new colleagues are lucky to have you! 🥺🥰

Behind the Research of AI:

We look behind the scenes, beyond the polished papers 🧐🧪

If this sounds fun, check out our first "official" episode with the awesome Gauthier Gidel

from @mila-quebec.bsky.social :

open.spotify.com/episode/7oTc...

Behind the Research of AI:

We look behind the scenes, beyond the polished papers 🧐🧪

If this sounds fun, check out our first "official" episode with the awesome Gauthier Gidel

from @mila-quebec.bsky.social :

open.spotify.com/episode/7oTc...

Finally the video from Mila's speed science competition is on YouTube!

From a soup of raw pixels to abstract meaning

t.co/RDpu1kR7jM

Finally the video from Mila's speed science competition is on YouTube!

From a soup of raw pixels to abstract meaning

t.co/RDpu1kR7jM

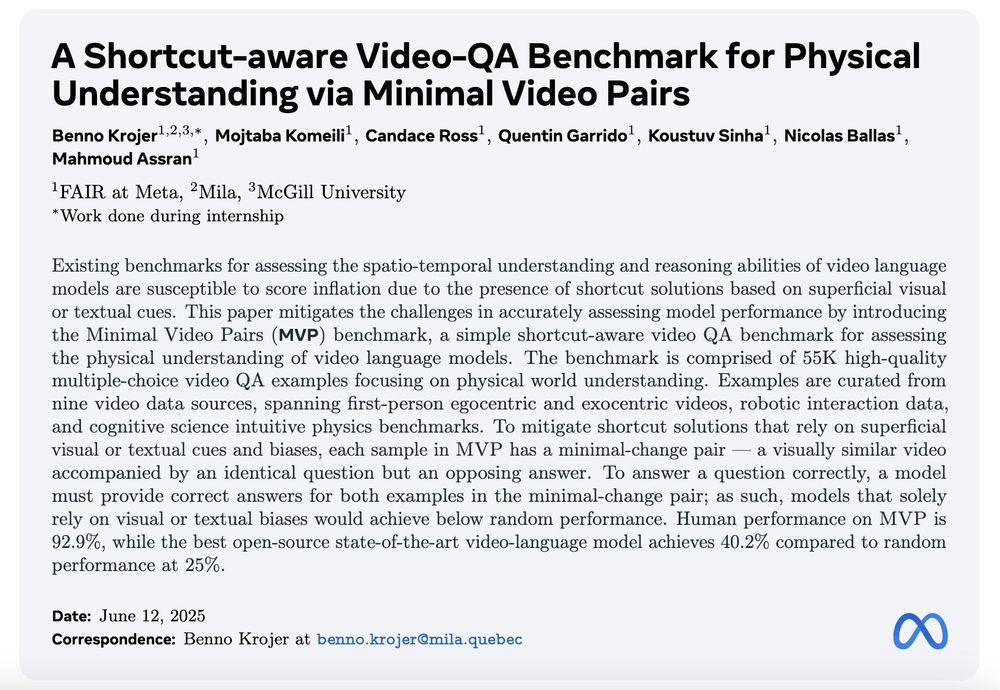

We ask 🤔

What subtle shortcuts are VideoLLMs taking on spatio-temporal questions?

And how can we instead curate shortcut-robust examples at a large-scale?

We release: MVPBench

Details 👇🔬

We ask 🤔

What subtle shortcuts are VideoLLMs taking on spatio-temporal questions?

And how can we instead curate shortcut-robust examples at a large-scale?

We release: MVPBench

Details 👇🔬

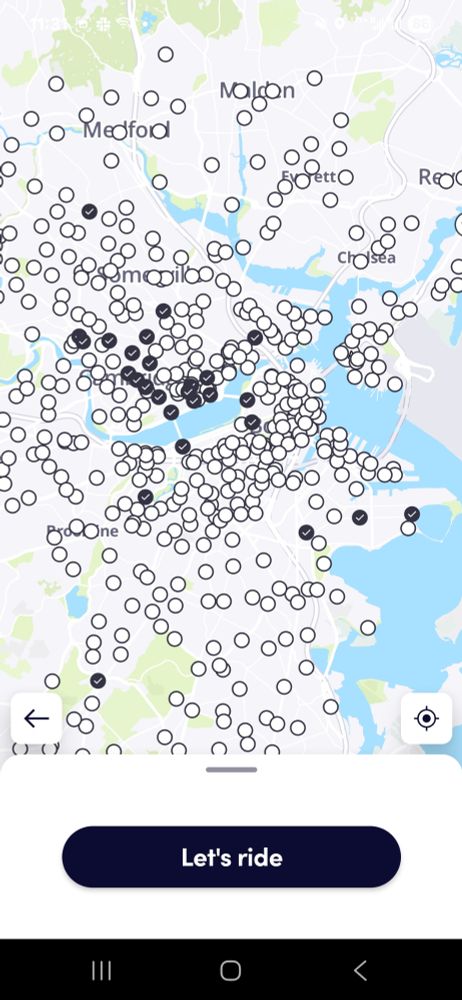

Love these interactive maps

Love these interactive maps

And yes I've tried to customizing my feeds and whatnot but no feed can fix a lack of posts

And yes I've tried to customizing my feeds and whatnot but no feed can fix a lack of posts

And yes I've tried to customizing my feeds and whatnot but no feed can fix a lack of posts

Also sycophancy is annoying af

Also sycophancy is annoying af