Academic stuff: language grounding, vision+language, interp, rigorous & creative evals, cogsci

Other: many sports, urban explorations, puzzles/quizzes

bennokrojer.com

bennokrojer.com/interp.html

bennokrojer.com/interp.html

Test your assumptions, do not assume the field already has settled

Test your assumptions, do not assume the field already has settled

But this was not the full story yet...

@mariusmosbach.bsky.social and @elinorpd.bsky.social nudged me to use contextual embeddings

But this was not the full story yet...

@mariusmosbach.bsky.social and @elinorpd.bsky.social nudged me to use contextual embeddings

We just assumed visual tokens going into an LLM would not be that interpretable (based on the literature and our intuition)

But we never fully tested it for many weeks!

We just assumed visual tokens going into an LLM would not be that interpretable (based on the literature and our intuition)

But we never fully tested it for many weeks!

Pivoting to a new direction, wondering what kind of interp work would be meaningful, getting feedback from my lab, ...

Pivoting to a new direction, wondering what kind of interp work would be meaningful, getting feedback from my lab, ...

I think this paper in particular has a lot going on behind the scenes; from lessons learned to personal reflections

let me share some

I think this paper in particular has a lot going on behind the scenes; from lessons learned to personal reflections

let me share some

How can LatentLens outperform EmbeddingLens even at layer 0?

Our hypothsis: Visual tokens arrive already packaged in a semantic format

Concretely: An input visual token might have the highest similarity with text representations at e.g. LLM layer 8

We call this "Mid-Layer Leap"

How can LatentLens outperform EmbeddingLens even at layer 0?

Our hypothsis: Visual tokens arrive already packaged in a semantic format

Concretely: An input visual token might have the highest similarity with text representations at e.g. LLM layer 8

We call this "Mid-Layer Leap"

The two baselines are a mixed bag: some models and some layers are okay but many others not

LatentLens shows high interpretability across the board

The two baselines are a mixed bag: some models and some layers are okay but many others not

LatentLens shows high interpretability across the board

We can see:

a) LatentLens makes visual tokens interpretable at all layers

b) it has no issues with single-token results like logit lens and provides detailed full sentences!

(no training or tuning involved)

We can see:

a) LatentLens makes visual tokens interpretable at all layers

b) it has no issues with single-token results like logit lens and provides detailed full sentences!

(no training or tuning involved)

Instead of an LLM's fixed vocabulary (eg embedding matrix), we propose contextual representations as our pool of nearest neighbors

→ e.g. the representation of “dog” in “my brown dog” at some layer N

Instead of an LLM's fixed vocabulary (eg embedding matrix), we propose contextual representations as our pool of nearest neighbors

→ e.g. the representation of “dog” in “my brown dog” at some layer N

Are visual tokens going into an LLM interpretable 🤔

Existing methods (e.g. logit lens) and assumptions would lead you to think “not much”...

We propose LatentLens and show that most visual tokens are interpretable across *all* layers 💡

Details 🧵

Are visual tokens going into an LLM interpretable 🤔

Existing methods (e.g. logit lens) and assumptions would lead you to think “not much”...

We propose LatentLens and show that most visual tokens are interpretable across *all* layers 💡

Details 🧵

i wonder if the appearance on tv is meant to represent the sin of pride

i wonder if the appearance on tv is meant to represent the sin of pride

It covered lots from crowdworker rights, the ideologies (doomers, EA, ...) and the silicon valley startup world to the many big egos and company-internal battles

Great work by @karenhao.bsky.social

It covered lots from crowdworker rights, the ideologies (doomers, EA, ...) and the silicon valley startup world to the many big egos and company-internal battles

Great work by @karenhao.bsky.social

love the detailed montreal spots mentioned

consider including such a section in your next appendix!

(paper by @a-krishnan.bsky.social arxiv.org/pdf/2504.050...)

love the detailed montreal spots mentioned

consider including such a section in your next appendix!

(paper by @a-krishnan.bsky.social arxiv.org/pdf/2504.050...)

Finally the video from Mila's speed science competition is on YouTube!

From a soup of raw pixels to abstract meaning

t.co/RDpu1kR7jM

Finally the video from Mila's speed science competition is on YouTube!

From a soup of raw pixels to abstract meaning

t.co/RDpu1kR7jM

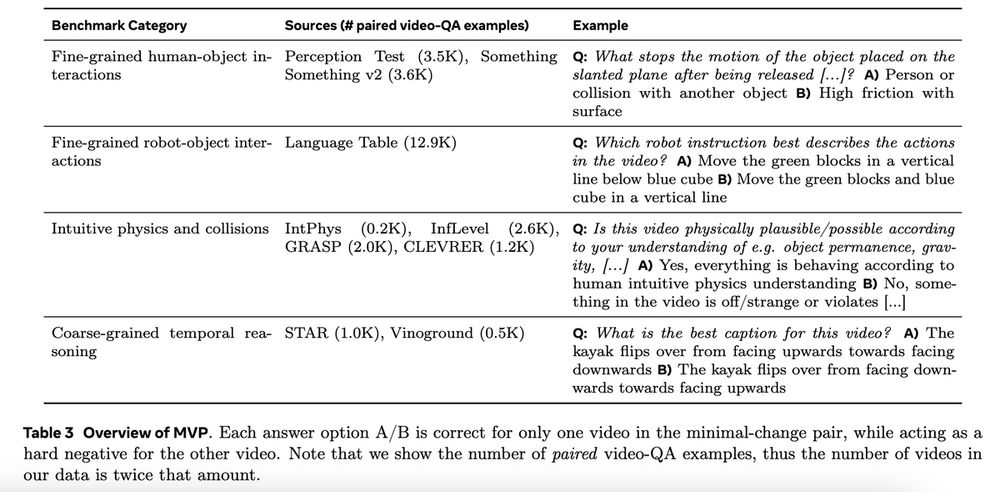

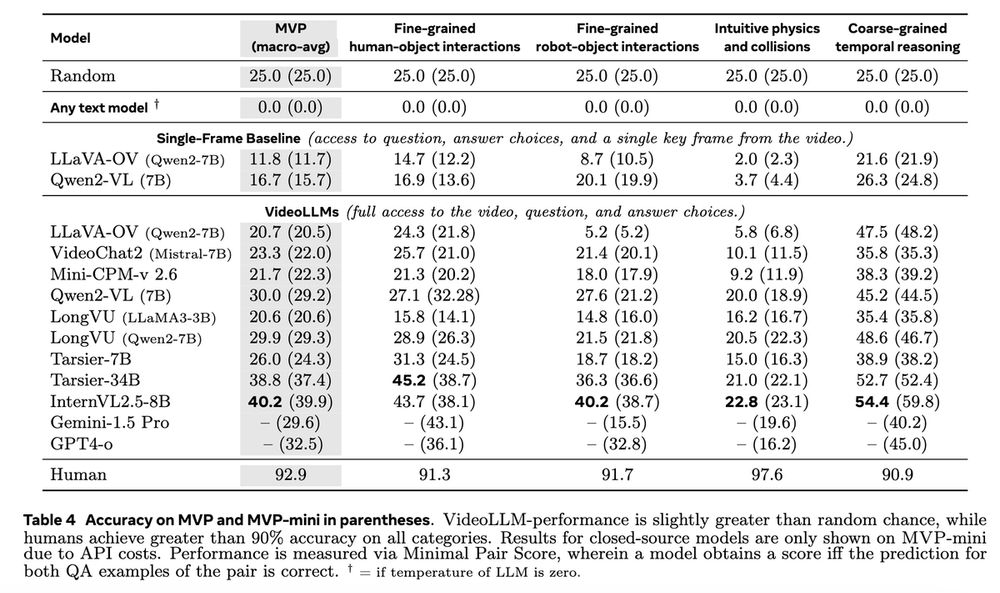

There's a lot of talk about math reasoning these days, but this project made me appreciate what simple reasoning we humans take for granted, arising in our first months and years of living

As usual i also included "Behind The Scenes" in the Appendix:

There's a lot of talk about math reasoning these days, but this project made me appreciate what simple reasoning we humans take for granted, arising in our first months and years of living

As usual i also included "Behind The Scenes" in the Appendix:

(Mido Assran Nicolas Ballas @koustuvsinha.com @candaceross.bsky.social @quentin-garrido.bsky.social Mojtaba Komeili)

The Montreal office in general is a very fun place 👇

(Mido Assran Nicolas Ballas @koustuvsinha.com @candaceross.bsky.social @quentin-garrido.bsky.social Mojtaba Komeili)

The Montreal office in general is a very fun place 👇

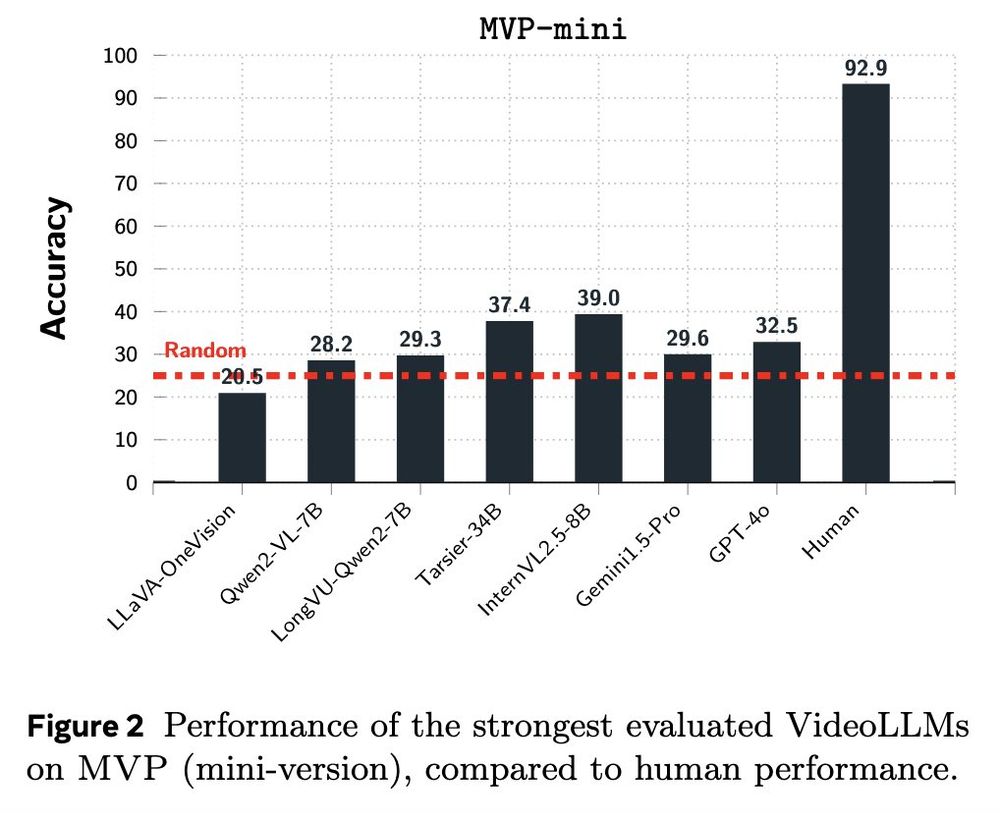

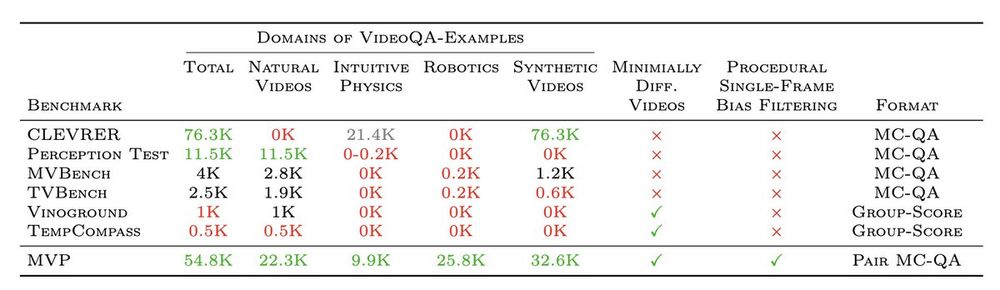

We encourage the community to use MVPBench to check if the latest VideoLLMs possess a *real* understanding of the physical world!

We encourage the community to use MVPBench to check if the latest VideoLLMs possess a *real* understanding of the physical world!