Sophia Sirko-Galouchenko 🇺🇦

@ssirko.bsky.social

200 followers

320 following

7 posts

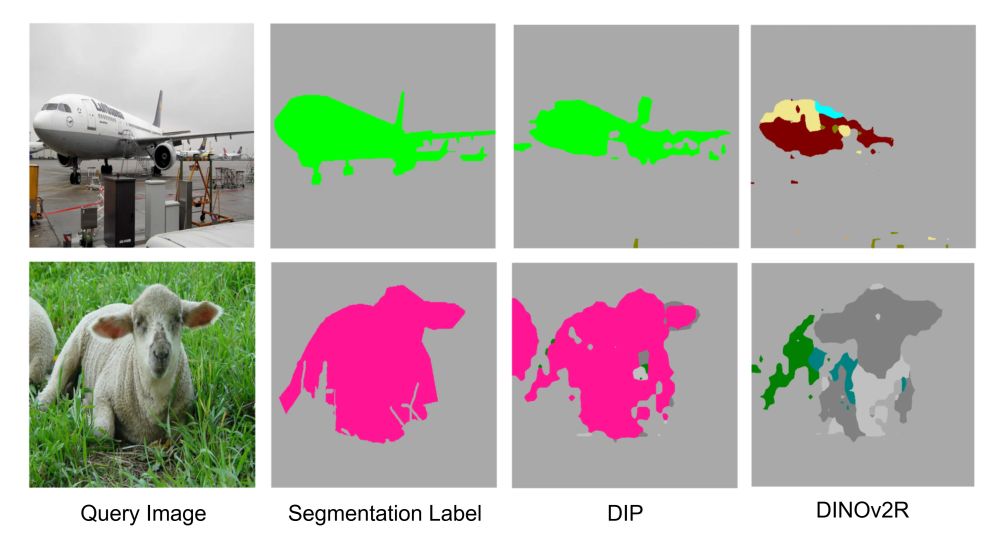

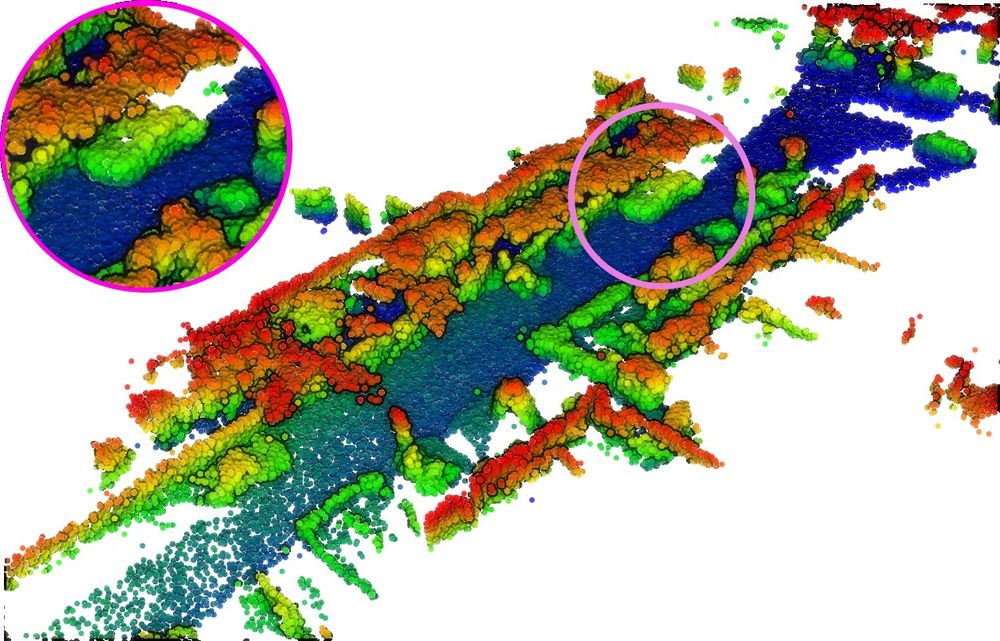

PhD student in visual representation learning at Valeo.ai and Sorbonne Université (MLIA)

Posts

Media

Videos

Starter Packs

Reposted by Sophia Sirko-Galouchenko 🇺🇦

Reposted by Sophia Sirko-Galouchenko 🇺🇦

Reposted by Sophia Sirko-Galouchenko 🇺🇦

Reposted by Sophia Sirko-Galouchenko 🇺🇦

Reposted by Sophia Sirko-Galouchenko 🇺🇦

Reposted by Sophia Sirko-Galouchenko 🇺🇦

Reposted by Sophia Sirko-Galouchenko 🇺🇦

Noa Garcia

@noagarciad.bsky.social

· Nov 22