arxiv.org/abs/2401.17173

arxiv.org/abs/2401.17173

i’ll have to dig into iRoPE

i’ll have to dig into iRoPE

Best information right now appears to be this blog post: https://ai.meta.com/blog/llama-4-multimodal-intelligence/

Best information right now appears to be this blog post: https://ai.meta.com/blog/llama-4-multimodal-intelligence/

- Llama 4 Scout and

- Llama 4 Maverick.

These models are optimised for

- multimodal understanding,

- multilingual tasks,

- coding,

- tool-calling, and

- powering agentic systems”

www.llama.com/docs/model-c...

- Llama 4 Scout and

- Llama 4 Maverick.

These models are optimised for

- multimodal understanding,

- multilingual tasks,

- coding,

- tool-calling, and

- powering agentic systems”

www.llama.com/docs/model-c...

Surveys extending RAG beyond text to computer vision, examining how external knowledge retrieval enhances visual understanding and generation tasks.

📝 arxiv.org/abs/2503.18016

Surveys extending RAG beyond text to computer vision, examining how external knowledge retrieval enhances visual understanding and generation tasks.

📝 arxiv.org/abs/2503.18016

Accenture explicitly incorporates logical reasoning into retrieval, extracting logical structures from natural language queries and combining similarity scores to improve performance.

📝 arxiv.org/abs/2503.17860

Accenture explicitly incorporates logical reasoning into retrieval, extracting logical structures from natural language queries and combining similarity scores to improve performance.

📝 arxiv.org/abs/2503.17860

Surveys long context LLMs covering data strategies, architecture designs, workflow approaches, infrastructure, evaluation, and applications with analysis of context window capabilities.

📝 arxiv.org/abs/2503.17407

Surveys long context LLMs covering data strategies, architecture designs, workflow approaches, infrastructure, evaluation, and applications with analysis of context window capabilities.

📝 arxiv.org/abs/2503.17407

https://rocm.docs.amd.com/projects/ai-developer-hub/en/

https://rocm.docs.amd.com/projects/ai-developer-hub/en/

Improves GraphRAG by filtering irrelevant information and integrating LLMs' intrinsic reasoning with external graph knowledge to reduce hallucinations.

📝 arxiv.org/abs/2503.13804

Improves GraphRAG by filtering irrelevant information and integrating LLMs' intrinsic reasoning with external graph knowledge to reduce hallucinations.

📝 arxiv.org/abs/2503.13804

Enhances RAG systems with entity-specific query handling, multi-modal outputs, and proactive security measures.

📝 arxiv.org/abs/2503.13563

Reviews LLM ensemble techniques across weight merging, knowledge fusion, mixture of experts, and more.

📝 arxiv.org/abs/2503.13505

Reviews LLM ensemble techniques across weight merging, knowledge fusion, mixture of experts, and more.

📝 arxiv.org/abs/2503.13505

Surveys RAG from a knowledge-centric perspective, examining fundamental components, advanced techniques, evaluation methods, and real-world applications.

📝 arxiv.org/abs/2503.10677

👨🏽💻 github.com/USTCAGI/Awes...

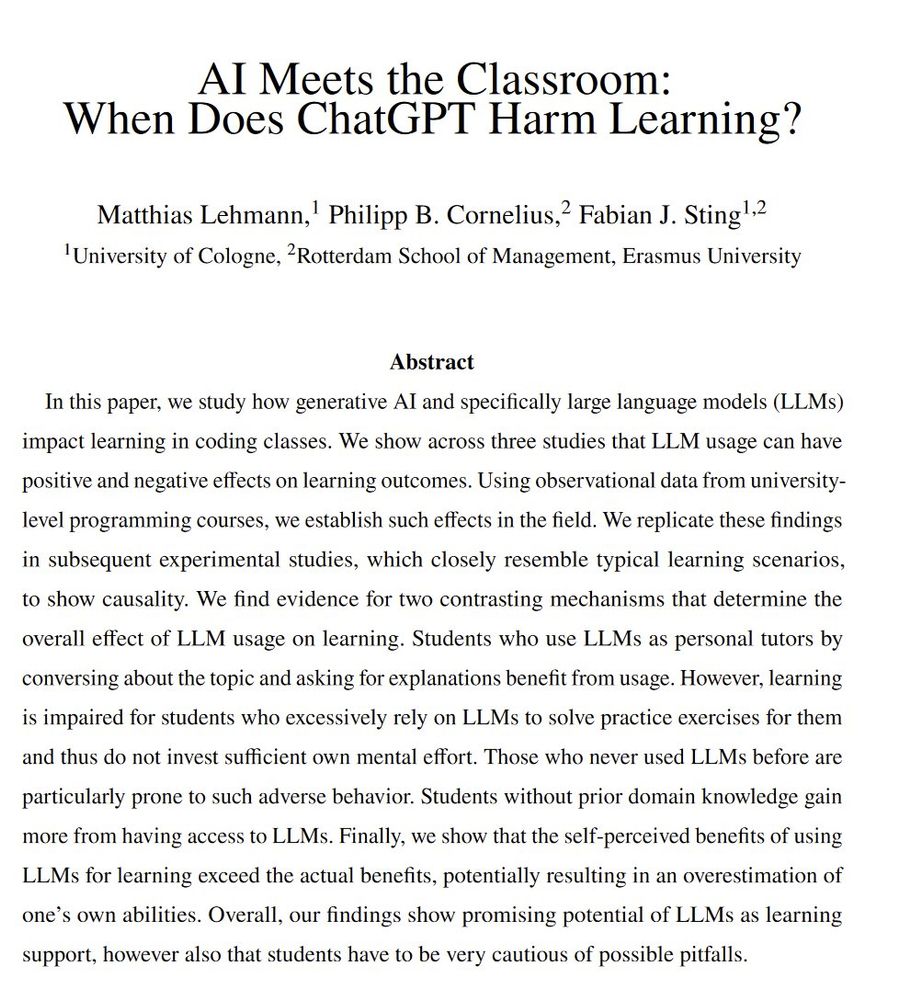

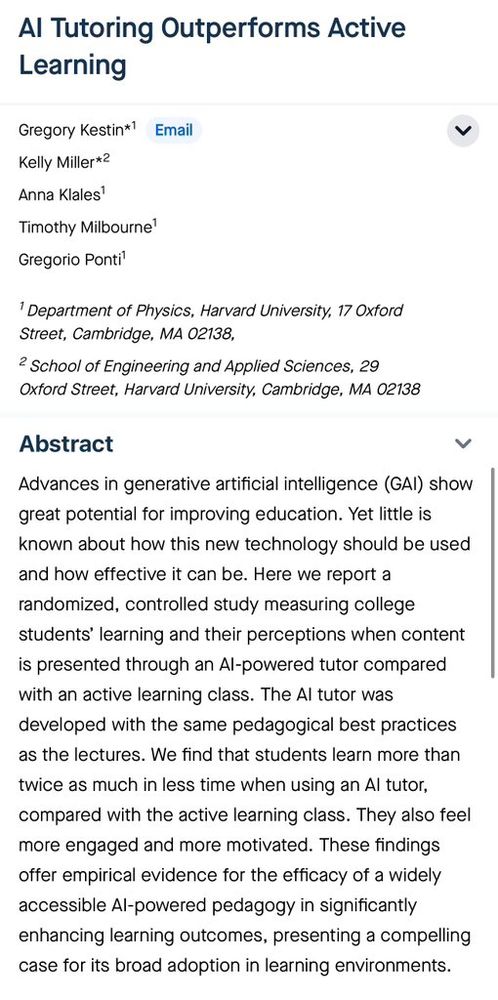

But AIs properly prompted to act like tutors, especially with instructor support, seem to be able to boost learning a lot through customized instruction

But AIs properly prompted to act like tutors, especially with instructor support, seem to be able to boost learning a lot through customized instruction

In this paper we show that we can thanks to Large Language Models! Why LLMs? They can identify useful optimization structure and have a lot of built in math programming knowledge!

In this paper we show that we can thanks to Large Language Models! Why LLMs? They can identify useful optimization structure and have a lot of built in math programming knowledge!

LLMs for Cold-Start Cutting Plane Separator Configuration

🔗: arxiv.org/abs/2412.12038

LLMs for Cold-Start Cutting Plane Separator Configuration

🔗: arxiv.org/abs/2412.12038

If you have any suggestions or requests, please reach out!

ccanonne.github.io/teaching/COM... #TCSSky

If you have any suggestions or requests, please reach out!

ccanonne.github.io/teaching/COM... #TCSSky

So sometimes it's 20 cents for saving you 20 minutes of work.

Other times it's $1 for wasting 10 minutes.

So sometimes it's 20 cents for saving you 20 minutes of work.

Other times it's $1 for wasting 10 minutes.

decomposition,

retrieval, and

reasoning with self-verification.

By integrating these components, CogGRAG enhances the accuracy of LLMs in complex problem solving”

Introduces a graph-based RAG framework that mimics human cognitive processes through question decomposition and self-verification to enhance complex reasoning in KGQA tasks.

📝 arxiv.org/abs/2503.06567

decomposition,

retrieval, and

reasoning with self-verification.

By integrating these components, CogGRAG enhances the accuracy of LLMs in complex problem solving”