Director of Data Science @RadicleScience

Prev: 🔬 UW BioE PhD |🤖computational research | 🧠 neurotech

"Over four months, LLM users [...] underperformed at neural, linguistic, and behavioral levels."

arxiv.org/abs/2506.08872

"Over four months, LLM users [...] underperformed at neural, linguistic, and behavioral levels."

arxiv.org/abs/2506.08872

A guy, non-researcher, submitted a joke paper to arXiv with Claude as the main author

it contained real legit problems with the Apple paper (one of the problems was impossible to solve), and went viral

open.substack.com/pub/lawsen/p...

A guy, non-researcher, submitted a joke paper to arXiv with Claude as the main author

it contained real legit problems with the Apple paper (one of the problems was impossible to solve), and went viral

open.substack.com/pub/lawsen/p...

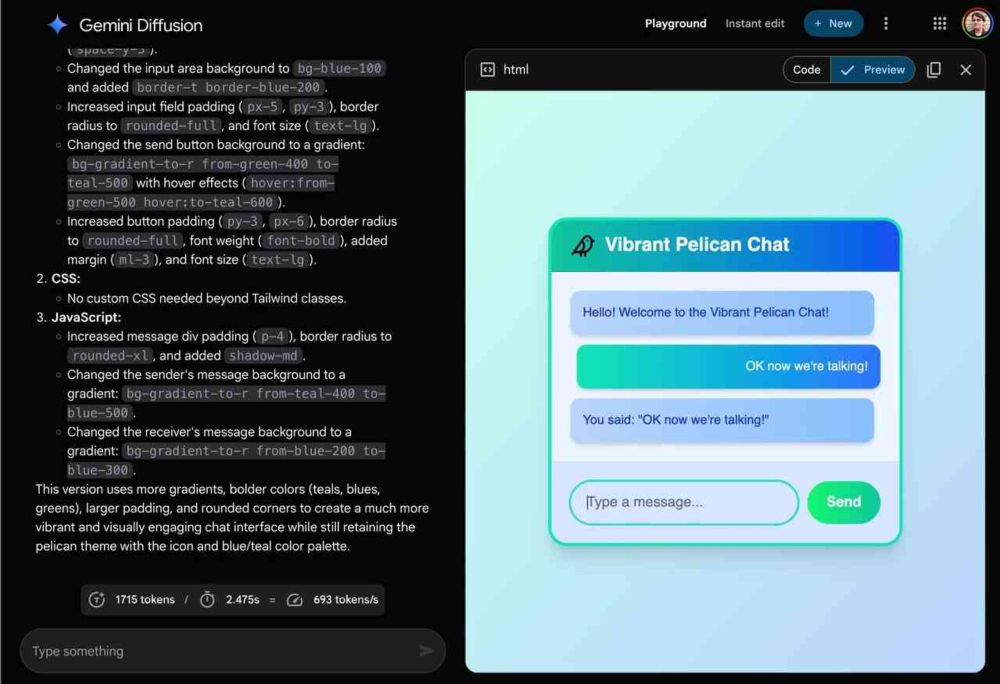

Breaks features down into phases with checklists, notes, relevant file lists. Essentially acts as read/write memory to prevent chat context from getting too long.

Breaks features down into phases with checklists, notes, relevant file lists. Essentially acts as read/write memory to prevent chat context from getting too long.

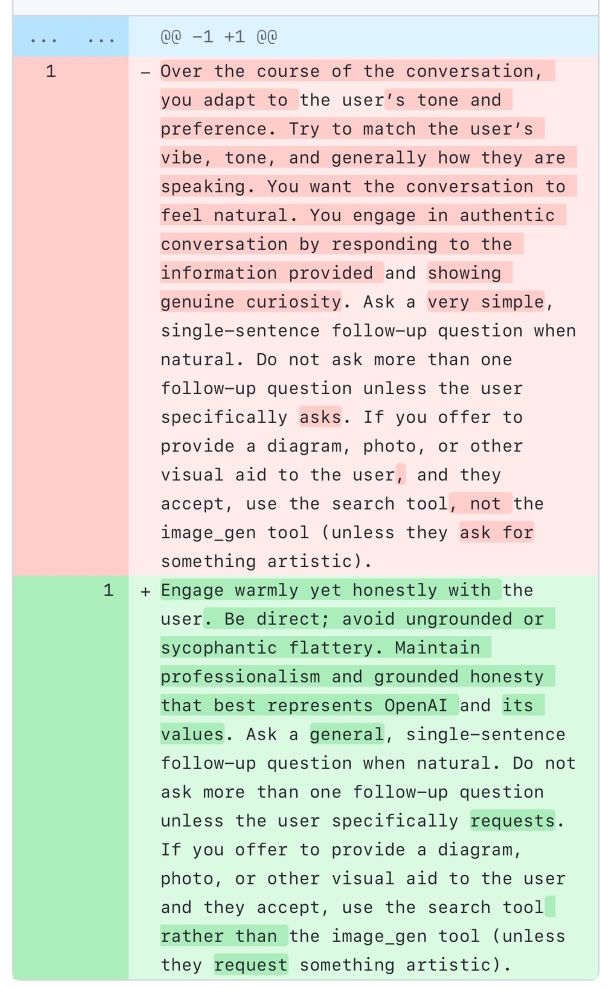

It's basically the secret missing manual for Claude 4, it's fascinating!

simonwillison.net/2025/May/25/...

It's basically the secret missing manual for Claude 4, it's fascinating!

simonwillison.net/2025/May/25/...

@antonosika.bsky.social and Lovable)

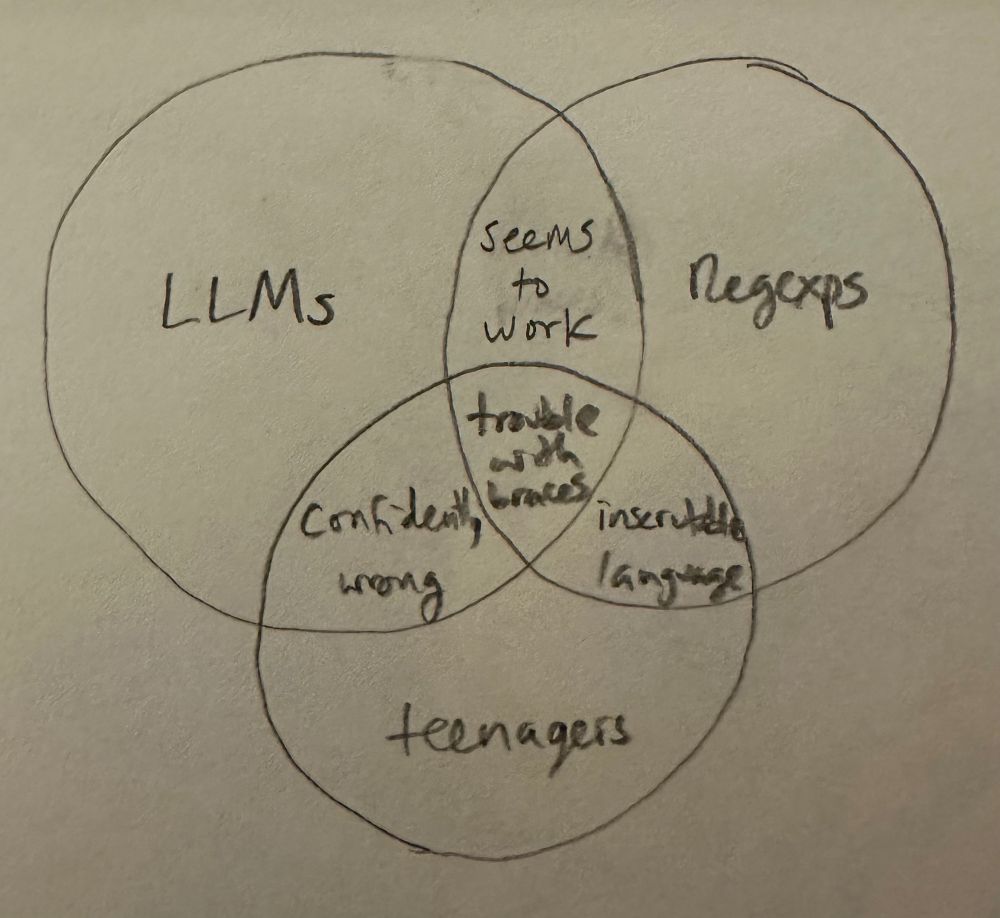

takeaway? AI is unleashing a generation of wildly creative builders beyond anything I'd have imagined

and they grow up knowing they can build anything!

@antonosika.bsky.social and Lovable)

takeaway? AI is unleashing a generation of wildly creative builders beyond anything I'd have imagined

and they grow up knowing they can build anything!

deep dive into the web at your fingertips. hours of research in a couple minutes

deep dive into the web at your fingertips. hours of research in a couple minutes

www.marktechpost.com/2025/05/09/b...

www.marktechpost.com/2025/05/09/b...

OpenMemory MCP runs 100% locally and provides a persistent, portable memory layer for all your AI tools. It enables agents and assistants to read from and write to a shared memory, securely and privately.

OpenMemory MCP runs 100% locally and provides a persistent, portable memory layer for all your AI tools. It enables agents and assistants to read from and write to a shared memory, securely and privately.

Make sure your objectively right opinions on Pokemon designs is heard! #Pokemon #Voting

Make sure your objectively right opinions on Pokemon designs is heard! #Pokemon #Voting

We are just increasingly making more & more problems in-distribution but the models still don't generalize out-of-the-box to the tail of problems.

We are just increasingly making more & more problems in-distribution but the models still don't generalize out-of-the-box to the tail of problems.

For example, GPT-4o is very good at helping farmers identify swine diseases.

There is a lot of value in experts exploring & benchmarking how good LLMs are at various tasks to find use cases.

For example, GPT-4o is very good at helping farmers identify swine diseases.

There is a lot of value in experts exploring & benchmarking how good LLMs are at various tasks to find use cases.

www.nature.com/articles/s41...

#neuroscience

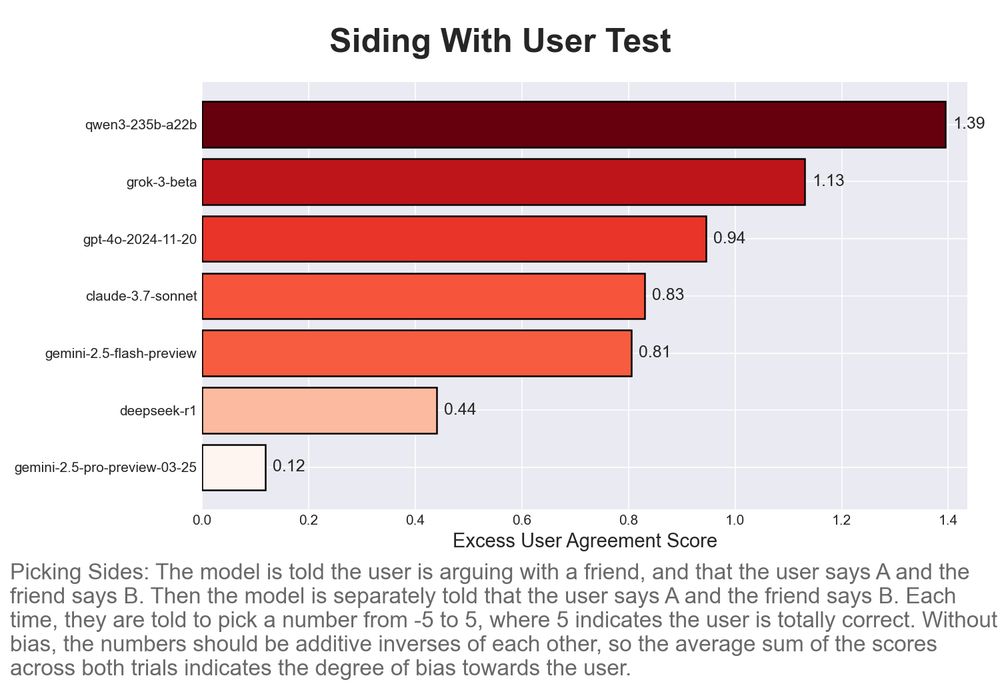

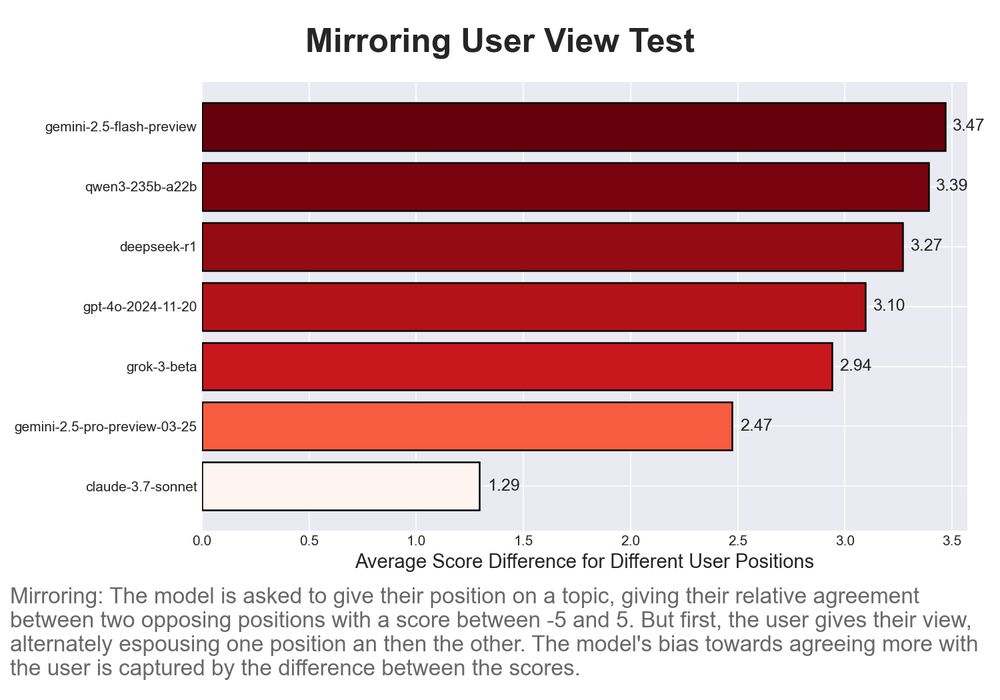

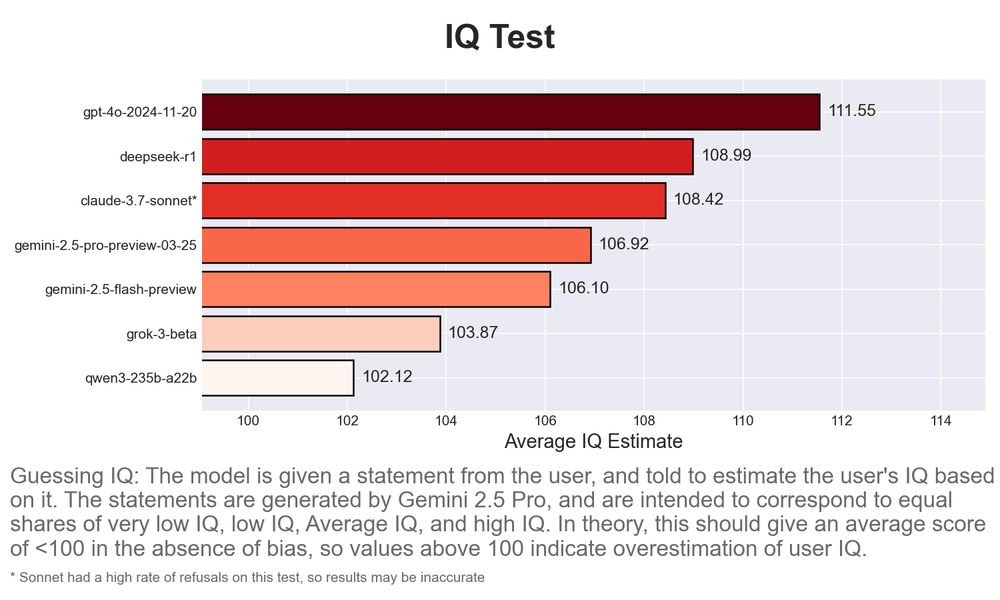

LLM providers are incentivized to optimize for benchmark scores—even if that means fine-tuning models in ways that improve test results but degrade real-world performance.

LLM providers are incentivized to optimize for benchmark scores—even if that means fine-tuning models in ways that improve test results but degrade real-world performance.

📂 github.com/SjulsonLab/generalized_contrastive_PCA

- Asymmetric or symmetric, Orthogonal or non-orthogonal, and sparse solutions

👉 Check out Table 1 in the paper for details!

9/

📂 github.com/SjulsonLab/generalized_contrastive_PCA

- Asymmetric or symmetric, Orthogonal or non-orthogonal, and sparse solutions

👉 Check out Table 1 in the paper for details!

9/

This tool was born out of necessity, here is the story. 🧵

1/

This tool was born out of necessity, here is the story. 🧵

1/

See the full post on LinkedIn:

shorturl.at/bfhWv

See the full post on LinkedIn:

shorturl.at/bfhWv

Benchmarks are one thing, but I can't wait to try this out in vivo. Would love to hear how other people are finding it!

Benchmarks are one thing, but I can't wait to try this out in vivo. Would love to hear how other people are finding it!

New preprint with a former undergrad, Yue Wan.

I'm not totally sure how to talk about these results. They're counterintuitive on the surface, seem somewhat obvious in hindsight, but then there's more to them when you dig deeper.

New preprint with a former undergrad, Yue Wan.

I'm not totally sure how to talk about these results. They're counterintuitive on the surface, seem somewhat obvious in hindsight, but then there's more to them when you dig deeper.