Neehar Kondapaneni

@therealpaneni.bsky.social

56 followers

500 following

19 posts

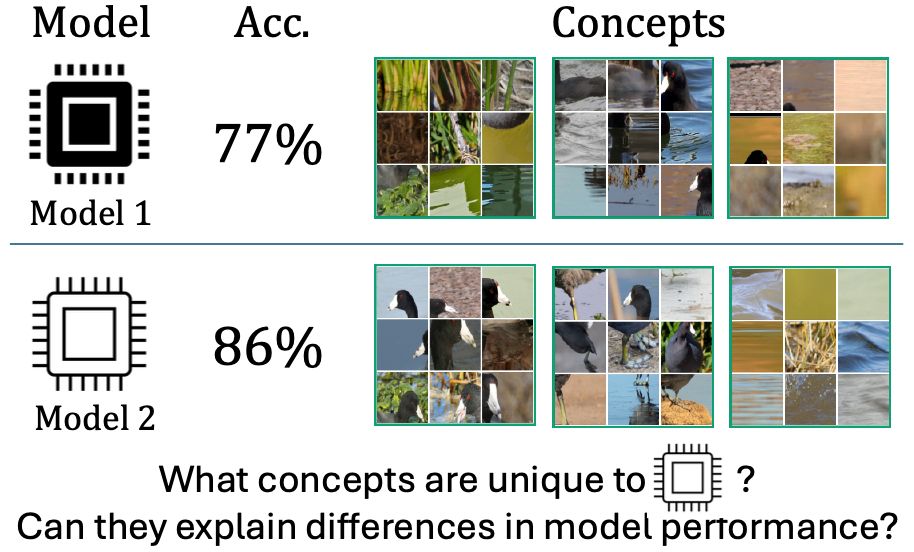

Researching interpretability and alignment in computer vision.

PhD student @ Vision Lab Caltech

Posts

Media

Videos

Starter Packs