“Hosting a WebSite on a Disposable Vape”

bogdanthegeek.github.io/blog/project...

this explains how!

((the internet is healing 😌))

#IndieDev

“Hosting a WebSite on a Disposable Vape”

bogdanthegeek.github.io/blog/project...

this explains how!

((the internet is healing 😌))

#IndieDev

🧵

The switch from training frontier models by next-token-prediction to reinforcement learning (RL) requires 1,000s to 1,000,000s of times as much compute per bit of information the model gets to learn from…

1/11

www.tobyord.com/writing/inef...

🧵

The switch from training frontier models by next-token-prediction to reinforcement learning (RL) requires 1,000s to 1,000,000s of times as much compute per bit of information the model gets to learn from…

1/11

www.tobyord.com/writing/inef...

Here is 1883 AD, 1255 AD, 44 AD, 2300 BC, 30,000 BC, and 65 million years ago

Yes, there was apparently wind on the moon back then, you are just going to have suspend disbelief a bit.

Here is 1883 AD, 1255 AD, 44 AD, 2300 BC, 30,000 BC, and 65 million years ago

Yes, there was apparently wind on the moon back then, you are just going to have suspend disbelief a bit.

That's why it may be surprising that this RCT by METR, evaluating selected open-source devs' speed, found that AI assistance *slowed them down*.

That's why it may be surprising that this RCT by METR, evaluating selected open-source devs' speed, found that AI assistance *slowed them down*.

Be really careful with this stuff: attackers can trick your "agent" into stealing your private data simonwillison.net/2025/May/26/...

Be really careful with this stuff: attackers can trick your "agent" into stealing your private data simonwillison.net/2025/May/26/...

But in one of their examples it just quietly cheats its way through the puzzle — googling the answer then presenting it as if it solved it…

1/

🧵

But in one of their examples it just quietly cheats its way through the puzzle — googling the answer then presenting it as if it solved it…

1/

🧵

8/8

8/8

That's *something* but doesn't look like intelligence.

7/n

That's *something* but doesn't look like intelligence.

7/n

www.goodthoughts.blog/p/shuffling-...

www.goodthoughts.blog/p/shuffling-...

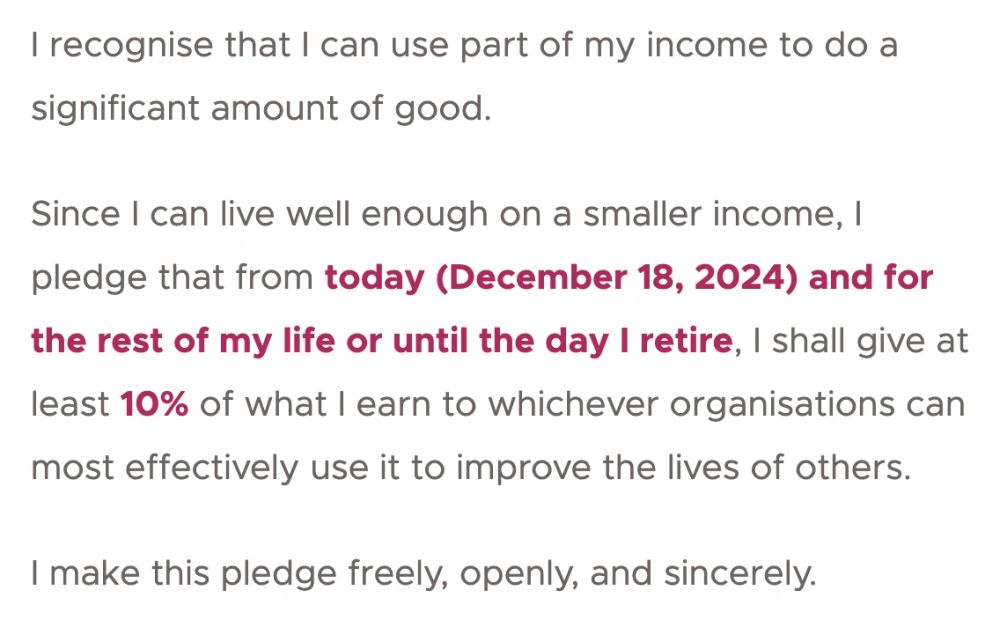

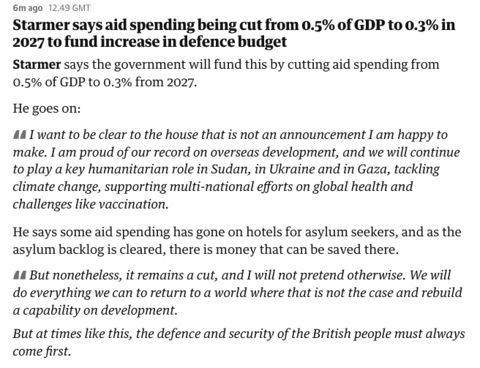

This is so bleak - thousands in low income countries will die.

I get (and support) that we need to increase defence spending/capabilities in europe, but surely better ways to fund it 🧵

This is so bleak - thousands in low income countries will die.

I get (and support) that we need to increase defence spending/capabilities in europe, but surely better ways to fund it 🧵

x.com/WhiteHouse/s...

IMO this is a huge red flag for potential human rights abuses

x.com/WhiteHouse/s...

IMO this is a huge red flag for potential human rights abuses

Inference Scaling Reshapes AI Governance

The shift from scaling up the pre-training compute of AI systems to scaling up their inference compute may have profound effects on AI governance.

🧵

1/

www.tobyord.com/writing/infe...

Inference Scaling Reshapes AI Governance

The shift from scaling up the pre-training compute of AI systems to scaling up their inference compute may have profound effects on AI governance.

🧵

1/

www.tobyord.com/writing/infe...

I'm wondering if you can help me with the following maths problem. I'd like to find a property of pairs of distinct primes such that if X is any infinite set of primes, then one can find a pair of distinct primes in X ... 🧵

I'm wondering if you can help me with the following maths problem. I'd like to find a property of pairs of distinct primes such that if X is any infinite set of primes, then one can find a pair of distinct primes in X ... 🧵

AI capabilities have improved remarkably quickly, fuelled by the explosive scale-up of resources being used to train the leading models. But the scaling laws that inspired this rush actually show very poor returns to scale. What’s going on?

1/

www.tobyord.com/writing/the-...

AI capabilities have improved remarkably quickly, fuelled by the explosive scale-up of resources being used to train the leading models. But the scaling laws that inspired this rush actually show very poor returns to scale. What’s going on?

1/

www.tobyord.com/writing/the-...