Dr. Mar Gonzalez-Franco

@twimar.bsky.social

Computer Scientist and Neuroscientist. Lead of the Blended Intelligence Research & Devices (BIRD) @GoogleXR. ex- Extended Perception Interaction & Cognition (EPIC) @MSFTResearch. ex- @Airbus applied maths

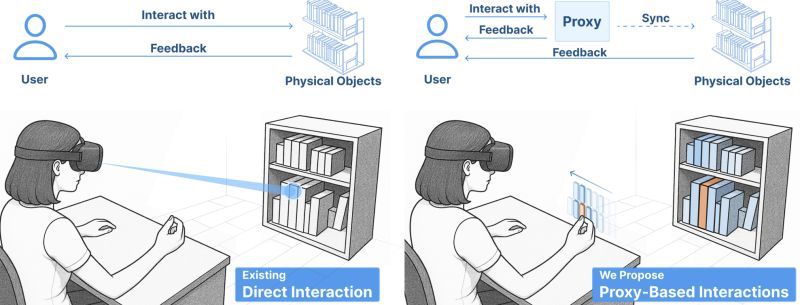

Over the last two years we have seen an acceleration on AI, but if AI is going to enable humans in their day to day tasks, it most probably will be via XR. The issue then is if a selection has real-world consequences, we will need great precision to interact.

August 5, 2025 at 8:16 AM

Over the last two years we have seen an acceleration on AI, but if AI is going to enable humans in their day to day tasks, it most probably will be via XR. The issue then is if a selection has real-world consequences, we will need great precision to interact.

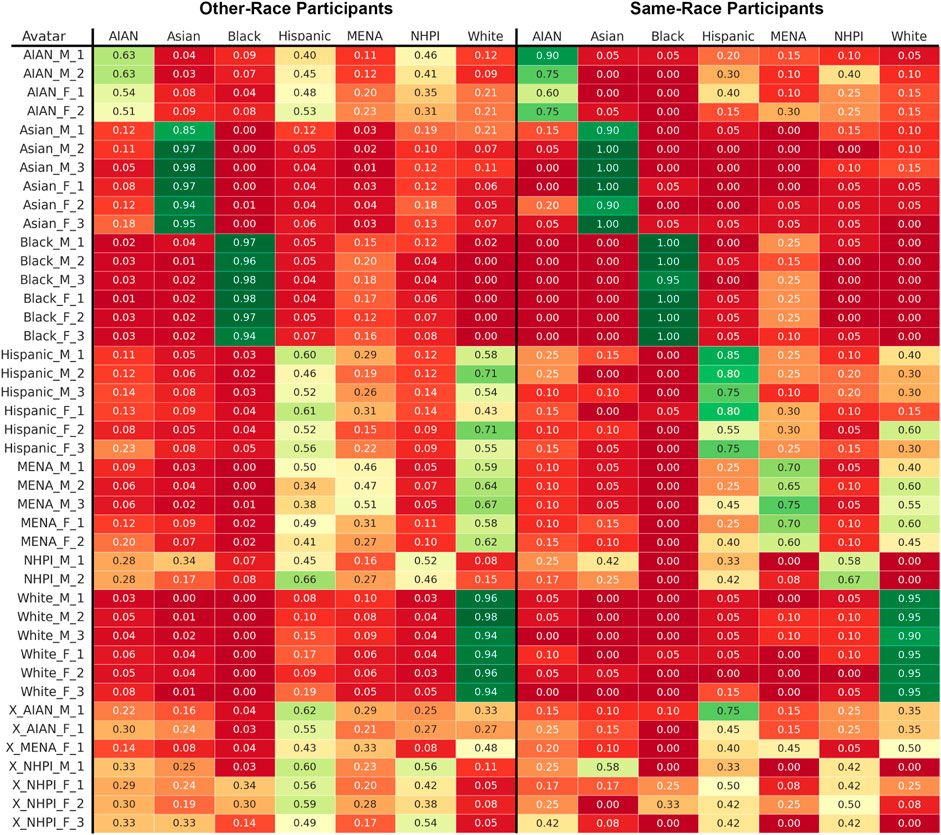

This precisely was something we tried to address and quantify in the VALID avatars. We found that ingroup participants were much better at determining the ethnicity of the avatars. And outgroups just had low accuracy labeling MENA, AIAN, NHPI and Hispanics. github.com/xrtlab/Valid...

April 28, 2025 at 7:13 PM

This precisely was something we tried to address and quantify in the VALID avatars. We found that ingroup participants were much better at determining the ethnicity of the avatars. And outgroups just had low accuracy labeling MENA, AIAN, NHPI and Hispanics. github.com/xrtlab/Valid...

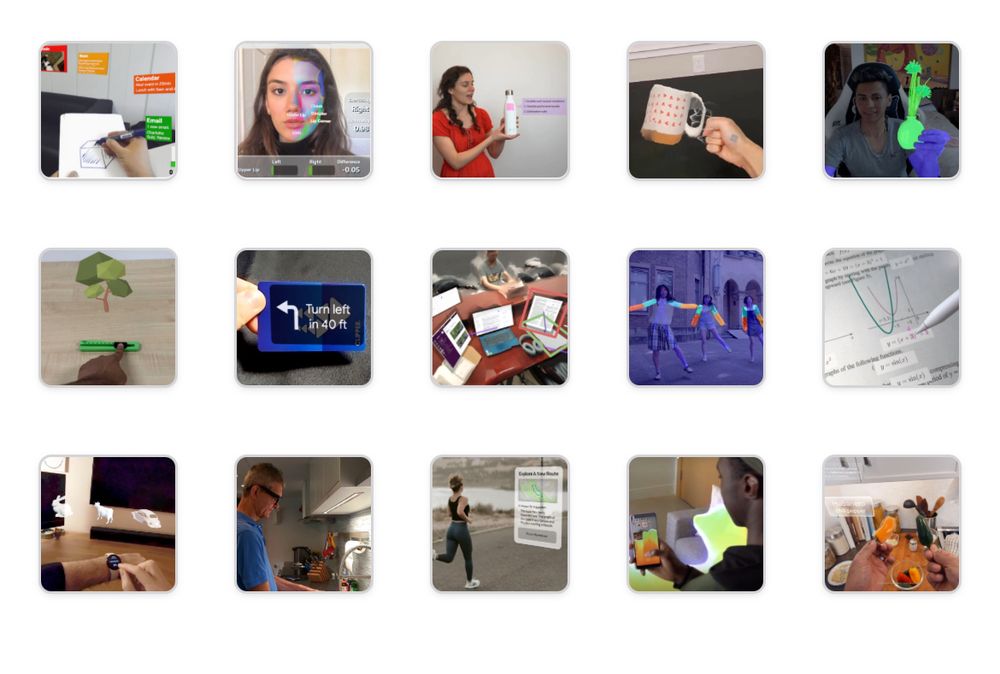

But really as it happens with all datasets, the key is on “How to make data useful?” That was our goal with PARSE-Ego4D parse-ego4d.github.io we augment the existing narrations and videos by providing 18k suggested actions using the latest of VLMs. This can be useful to train a future AI agents.

March 25, 2025 at 2:30 AM

But really as it happens with all datasets, the key is on “How to make data useful?” That was our goal with PARSE-Ego4D parse-ego4d.github.io we augment the existing narrations and videos by providing 18k suggested actions using the latest of VLMs. This can be useful to train a future AI agents.

But also: Not all avatars are created equal ⚠️. We did a deep study on preferences (presented at ISMAR 2023 arxiv.org/pdf/2304.01405) and realism matters quite a lot for work scenarios. most people don’t find acceptable a cartoon avatar…

March 23, 2025 at 10:23 PM

But also: Not all avatars are created equal ⚠️. We did a deep study on preferences (presented at ISMAR 2023 arxiv.org/pdf/2304.01405) and realism matters quite a lot for work scenarios. most people don’t find acceptable a cartoon avatar…

More over... we put all of this in an interactive dataset for people to explore each of the techniques and geek around😎 xrtexttrove.github.io some are wild!

March 13, 2025 at 9:54 PM

More over... we put all of this in an interactive dataset for people to explore each of the techniques and geek around😎 xrtexttrove.github.io some are wild!

3) there is a tendency to think custom hardware will solve this gap but so far it hasn’t. And keyboard format still delivers the highest wpm.

March 13, 2025 at 5:47 PM

3) there is a tendency to think custom hardware will solve this gap but so far it hasn’t. And keyboard format still delivers the highest wpm.

2) external surfaces and fingertips/transparency of hands seem to be preferable as ways to improve performance compared to midair gestures.

March 13, 2025 at 5:47 PM

2) external surfaces and fingertips/transparency of hands seem to be preferable as ways to improve performance compared to midair gestures.

1) vr text input has a ceiling effect for as long as we only use 1 or 2 fingers we will never get better than hunt and peck methods.

* more or less we find that every additional finger used increases 5 wpm (words per minute). Users of multi finger solutions (touch typers) can reach 40-50 wpm.

* more or less we find that every additional finger used increases 5 wpm (words per minute). Users of multi finger solutions (touch typers) can reach 40-50 wpm.

March 13, 2025 at 5:47 PM

1) vr text input has a ceiling effect for as long as we only use 1 or 2 fingers we will never get better than hunt and peck methods.

* more or less we find that every additional finger used increases 5 wpm (words per minute). Users of multi finger solutions (touch typers) can reach 40-50 wpm.

* more or less we find that every additional finger used increases 5 wpm (words per minute). Users of multi finger solutions (touch typers) can reach 40-50 wpm.

Ever wondered why is so hard to write in VR/XR? We too 😅. Check this new work to appear in ACM CHI: xrtexttrove.github.io

We (@arpitbhatia.bsky.social et al) analyzed 176 papers and text entry techniques inside XR and found that:

We (@arpitbhatia.bsky.social et al) analyzed 176 papers and text entry techniques inside XR and found that:

March 13, 2025 at 5:47 PM

Ever wondered why is so hard to write in VR/XR? We too 😅. Check this new work to appear in ACM CHI: xrtexttrove.github.io

We (@arpitbhatia.bsky.social et al) analyzed 176 papers and text entry techniques inside XR and found that:

We (@arpitbhatia.bsky.social et al) analyzed 176 papers and text entry techniques inside XR and found that:

Also look at this: “mundus sine cesaribus”, the CEO of bluesky

April 4, 2025 at 5:59 PM

Also look at this: “mundus sine cesaribus”, the CEO of bluesky

We will also present “Beyond the Phone: Exploring Context-Aware Interaction Between Mobile and Mixed Reality Devices" by Fengyuan Zhu et al. showcasing the many interesting ways in which we can bring our phones inside VR.

Paper:...

Paper:...

April 4, 2025 at 6:00 PM

We will also present “Beyond the Phone: Exploring Context-Aware Interaction Between Mobile and Mixed Reality Devices" by Fengyuan Zhu et al. showcasing the many interesting ways in which we can bring our phones inside VR.

Paper:...

Paper:...

At @IEEEVR we present “EmBARDiment: an Embodied AI Agent for Productivity in XR" by @riccardobovoHCI et al. showing how powerful AI agents can be when they are aware of the content around us, driven by contextual inputs like gaze.

Web: http://embardiment.github.io

Code: soon

Web: http://embardiment.github.io

Code: soon

April 4, 2025 at 5:54 PM

At @IEEEVR we present “EmBARDiment: an Embodied AI Agent for Productivity in XR" by @riccardobovoHCI et al. showing how powerful AI agents can be when they are aware of the content around us, driven by contextual inputs like gaze.

Web: http://embardiment.github.io

Code: soon

Web: http://embardiment.github.io

Code: soon

We will also present “Beyond the Phone: Exploring Context-Aware Interaction Between Mobile and Mixed Reality Devices" by Fengyuan Zhu et al. showcasing the many interesting ways in which we can bring our phones inside VR.

Paper: duruofei.com/papers/Zhu_B...

Paper: duruofei.com/papers/Zhu_B...

March 6, 2025 at 5:10 AM

We will also present “Beyond the Phone: Exploring Context-Aware Interaction Between Mobile and Mixed Reality Devices" by Fengyuan Zhu et al. showcasing the many interesting ways in which we can bring our phones inside VR.

Paper: duruofei.com/papers/Zhu_B...

Paper: duruofei.com/papers/Zhu_B...

Next week at #IEEEVR we present “EmBARDiment: an Embodied AI Agent for Productivity in XR" by @riccardobovo.bsky.social et al. showing how powerful AI agents can be when they are aware of the content around us, driven by contextual inputs like gaze.

Web: embardiment.github.io

Code: coming soon

Web: embardiment.github.io

Code: coming soon

March 6, 2025 at 5:08 AM

Next week at #IEEEVR we present “EmBARDiment: an Embodied AI Agent for Productivity in XR" by @riccardobovo.bsky.social et al. showing how powerful AI agents can be when they are aware of the content around us, driven by contextual inputs like gaze.

Web: embardiment.github.io

Code: coming soon

Web: embardiment.github.io

Code: coming soon

Thankful also to the many students we have had visiting us to refine and invent prototypes.

@nels.dev, @seanliu.io, @esenktutuncu.bsky.social, @riccardobovo.bsky.social, @anderschri.bsky.social, @dogadogan.bsky.social…

@nels.dev, @seanliu.io, @esenktutuncu.bsky.social, @riccardobovo.bsky.social, @anderschri.bsky.social, @dogadogan.bsky.social…

February 26, 2025 at 6:06 AM

Thankful also to the many students we have had visiting us to refine and invent prototypes.

@nels.dev, @seanliu.io, @esenktutuncu.bsky.social, @riccardobovo.bsky.social, @anderschri.bsky.social, @dogadogan.bsky.social…

@nels.dev, @seanliu.io, @esenktutuncu.bsky.social, @riccardobovo.bsky.social, @anderschri.bsky.social, @dogadogan.bsky.social…

I guess sometimes when we migrate we bring a baggage too.. when @davledo.bsky.social and @fbrudy.bsky.social invited me to talk at @autodeskresearch.bsky.social he prepared a super cute visual summary that deserves porting ;) challenges stand stronger now that we kind of tackled the OS in the room 🐘

January 1, 2025 at 10:22 AM

I guess sometimes when we migrate we bring a baggage too.. when @davledo.bsky.social and @fbrudy.bsky.social invited me to talk at @autodeskresearch.bsky.social he prepared a super cute visual summary that deserves porting ;) challenges stand stronger now that we kind of tackled the OS in the room 🐘

And prototyping full scenarios of future interactions.

Like the work with Lystbaek et al. “Hands-on, Hands-off: Gaze-Assisted Bimanual 3D Interaction” UIST 2024

pure.au.dk/ws/portalfil...

Like the work with Lystbaek et al. “Hands-on, Hands-off: Gaze-Assisted Bimanual 3D Interaction” UIST 2024

pure.au.dk/ws/portalfil...

December 30, 2024 at 9:39 AM

And prototyping full scenarios of future interactions.

Like the work with Lystbaek et al. “Hands-on, Hands-off: Gaze-Assisted Bimanual 3D Interaction” UIST 2024

pure.au.dk/ws/portalfil...

Like the work with Lystbaek et al. “Hands-on, Hands-off: Gaze-Assisted Bimanual 3D Interaction” UIST 2024

pure.au.dk/ws/portalfil...

But the work had many legs also into top academic labs, let’s dive into that:

For hand + eye. We worked with Profs Hans Gellersen and Ken Pfeuffer for 2 years. Figuring out design considerations: research.google/pubs/design-...

For hand + eye. We worked with Profs Hans Gellersen and Ken Pfeuffer for 2 years. Figuring out design considerations: research.google/pubs/design-...

December 30, 2024 at 9:39 AM

But the work had many legs also into top academic labs, let’s dive into that:

For hand + eye. We worked with Profs Hans Gellersen and Ken Pfeuffer for 2 years. Figuring out design considerations: research.google/pubs/design-...

For hand + eye. We worked with Profs Hans Gellersen and Ken Pfeuffer for 2 years. Figuring out design considerations: research.google/pubs/design-...

Here are some of the topics, (but we are open to more :)):

- Adaptive and Context-Aware AR

- Always-on AI Assistant by Integrating LLMs and AR

- AI-Assisted Task Guidance in AR

- AI-in-the-Loop On-Demand AR Content Creation

- AI for Accessible AR Design

- Real-World-Oriented AI Agents

- Adaptive and Context-Aware AR

- Always-on AI Assistant by Integrating LLMs and AR

- AI-Assisted Task Guidance in AR

- AI-in-the-Loop On-Demand AR Content Creation

- AI for Accessible AR Design

- Real-World-Oriented AI Agents

December 30, 2024 at 9:04 AM

Here are some of the topics, (but we are open to more :)):

- Adaptive and Context-Aware AR

- Always-on AI Assistant by Integrating LLMs and AR

- AI-Assisted Task Guidance in AR

- AI-in-the-Loop On-Demand AR Content Creation

- AI for Accessible AR Design

- Real-World-Oriented AI Agents

- Adaptive and Context-Aware AR

- Always-on AI Assistant by Integrating LLMs and AR

- AI-Assisted Task Guidance in AR

- AI-in-the-Loop On-Demand AR Content Creation

- AI for Accessible AR Design

- Real-World-Oriented AI Agents

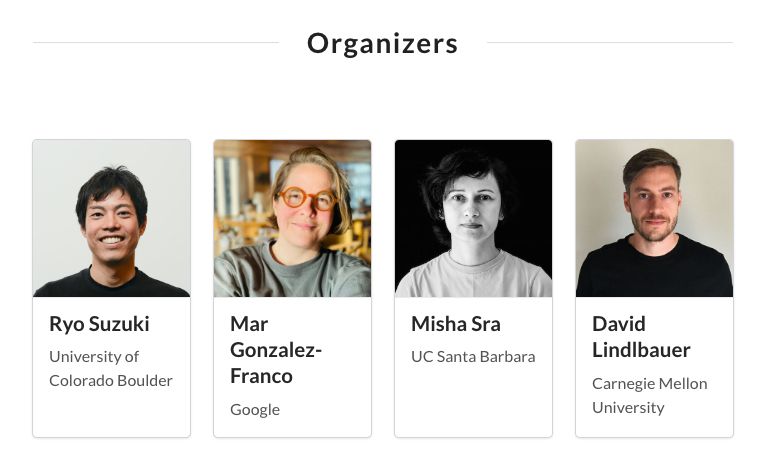

Ryo, Misha, David and myself are organizing a workshop at #CHI2025 on *Everyday AR through AI-in-the-Loop*. If you are interested in topics such as adaptive AR, AR+AI Agents, or task guidance, consider submitting. We are looking for 1-2 pagers! Deadline is Feb 15th. xr-and-ai.github.io/

December 30, 2024 at 9:03 AM

Ryo, Misha, David and myself are organizing a workshop at #CHI2025 on *Everyday AR through AI-in-the-Loop*. If you are interested in topics such as adaptive AR, AR+AI Agents, or task guidance, consider submitting. We are looking for 1-2 pagers! Deadline is Feb 15th. xr-and-ai.github.io/

Missinformation just an oversight of integrity. For scientific studies it looks like the horoscope: “if you eat eggs you live longer”, but “you should cut eggs if you want longevity”. It’s nefarious for science. But IMO journalism lack of integrity is worse on the nefarious axis.

April 4, 2025 at 5:54 PM

Missinformation just an oversight of integrity. For scientific studies it looks like the horoscope: “if you eat eggs you live longer”, but “you should cut eggs if you want longevity”. It’s nefarious for science. But IMO journalism lack of integrity is worse on the nefarious axis.

Incredible line up of talks at the #FutureOfText Symposium. Thanks so much to the organizers @liquidizer @dgrigar.

In the pics: Ge Li from @DSE_Unibo, @KenPfeuffer from @AarhusUni_int and Ryan House from @UWMadison.

Great support by @SloanFoundation and @wsu.

In the pics: Ge Li from @DSE_Unibo, @KenPfeuffer from @AarhusUni_int and Ryan House from @UWMadison.

Great support by @SloanFoundation and @wsu.

April 4, 2025 at 5:54 PM

Incredible line up of talks at the #FutureOfText Symposium. Thanks so much to the organizers @liquidizer @dgrigar.

In the pics: Ge Li from @DSE_Unibo, @KenPfeuffer from @AarhusUni_int and Ryan House from @UWMadison.

Great support by @SloanFoundation and @wsu.

In the pics: Ge Li from @DSE_Unibo, @KenPfeuffer from @AarhusUni_int and Ryan House from @UWMadison.

Great support by @SloanFoundation and @wsu.

Great @hrvojebenko!! Impressed by the variety of wrist prototypes that you have built over the years. Your work has come a long way since our time at MSR. I ❤️the end of the keynote, quoting @wasbuxton, also a former MSR colleague.

April 4, 2025 at 5:54 PM

Great @hrvojebenko!! Impressed by the variety of wrist prototypes that you have built over the years. Your work has come a long way since our time at MSR. I ❤️the end of the keynote, quoting @wasbuxton, also a former MSR colleague.

This week at @ismarconf we will present two foundational workpieces on Avatar Collaboration. #AvatarPilot and #MRTransformer.

As we add ++ people to a VR space the chances of their spaces overlapping tends to Zero. This work solves N people collaborating in dissimilar spaces. 🧵

As we add ++ people to a VR space the chances of their spaces overlapping tends to Zero. This work solves N people collaborating in dissimilar spaces. 🧵

April 4, 2025 at 6:00 PM

This week at @ismarconf we will present two foundational workpieces on Avatar Collaboration. #AvatarPilot and #MRTransformer.

As we add ++ people to a VR space the chances of their spaces overlapping tends to Zero. This work solves N people collaborating in dissimilar spaces. 🧵

As we add ++ people to a VR space the chances of their spaces overlapping tends to Zero. This work solves N people collaborating in dissimilar spaces. 🧵

@IEEEmetaverse symposium is kicking up. Wonderful line up of talks. Looking forward to all the discussions.

April 4, 2025 at 6:00 PM

@IEEEmetaverse symposium is kicking up. Wonderful line up of talks. Looking forward to all the discussions.