Dr. Mar Gonzalez-Franco

@twimar.bsky.social

Computer Scientist and Neuroscientist. Lead of the Blended Intelligence Research & Devices (BIRD) @GoogleXR. ex- Extended Perception Interaction & Cognition (EPIC) @MSFTResearch. ex- @Airbus applied maths

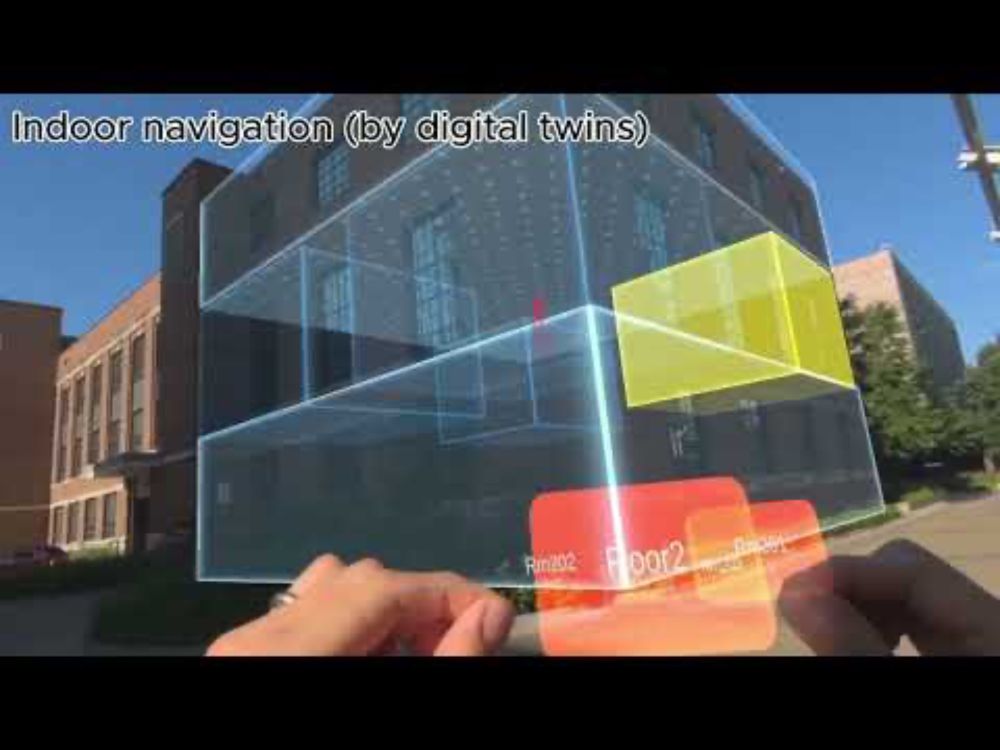

With that in mind we envision Reality Proxy. Check out the video teaser of a world interactable via proxies: youtu.be/F2ul_68PrD0, and stay tuned for more at ACM UIST Conference 2025 in Busan, Korea

Paper: lnkd.in/dFXdc2Bw

Paper: lnkd.in/dFXdc2Bw

Reality Proxy: Fluid Interactions with Real-World Objects in MR via Abstract Representations

YouTube video by Blended Intelligence Research & Devices

youtu.be

August 5, 2025 at 8:17 AM

With that in mind we envision Reality Proxy. Check out the video teaser of a world interactable via proxies: youtu.be/F2ul_68PrD0, and stay tuned for more at ACM UIST Conference 2025 in Busan, Korea

Paper: lnkd.in/dFXdc2Bw

Paper: lnkd.in/dFXdc2Bw

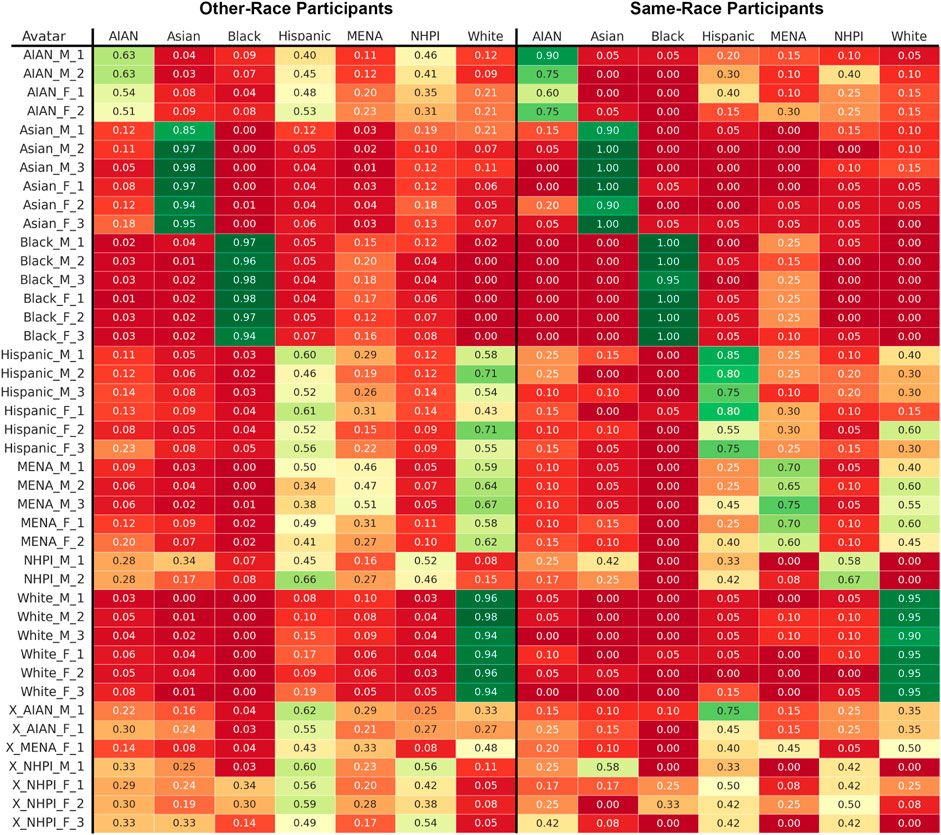

This precisely was something we tried to address and quantify in the VALID avatars. We found that ingroup participants were much better at determining the ethnicity of the avatars. And outgroups just had low accuracy labeling MENA, AIAN, NHPI and Hispanics. github.com/xrtlab/Valid...

April 28, 2025 at 7:13 PM

This precisely was something we tried to address and quantify in the VALID avatars. We found that ingroup participants were much better at determining the ethnicity of the avatars. And outgroups just had low accuracy labeling MENA, AIAN, NHPI and Hispanics. github.com/xrtlab/Valid...

To me experiments (on an homogeneous population) with 40+ participants are suspicious. Maybe they didn’t find significance with less but just trends so they added people until it was significant.

Sometimes a larger sample means higher probability of significance. murphyresearch.com/sample-size-...

Sometimes a larger sample means higher probability of significance. murphyresearch.com/sample-size-...

Sample Size: Is Bigger Always Better?

By now, many of us have seen the recent AT&T commercials featuring a man dressed in a suit asking a group of adorable small children seated around a table "Which is better…bigger or smaller?"

murphyresearch.com

April 18, 2025 at 5:05 AM

To me experiments (on an homogeneous population) with 40+ participants are suspicious. Maybe they didn’t find significance with less but just trends so they added people until it was significant.

Sometimes a larger sample means higher probability of significance. murphyresearch.com/sample-size-...

Sometimes a larger sample means higher probability of significance. murphyresearch.com/sample-size-...

You can check out the work next month at @iclr-conf.bsky.social Bi-Align (bialign-workshop.github.io) and FM-wild (fm-wild-community.github.io) workshops:

“PARSE-Ego4D: Personal Action Recommendation Suggestions for Egocentric Videos”

Or start playing right now :)

“PARSE-Ego4D: Personal Action Recommendation Suggestions for Egocentric Videos”

Or start playing right now :)

March 25, 2025 at 4:24 AM

You can check out the work next month at @iclr-conf.bsky.social Bi-Align (bialign-workshop.github.io) and FM-wild (fm-wild-community.github.io) workshops:

“PARSE-Ego4D: Personal Action Recommendation Suggestions for Egocentric Videos”

Or start playing right now :)

“PARSE-Ego4D: Personal Action Recommendation Suggestions for Egocentric Videos”

Or start playing right now :)

But how do we know if our data is any good? We asked 35k humans in Prolific about how correct and useful they found the suggested actions in reference to every video. Now we opensource the new dataset alongside the human ranks. github.com/google/parse... and all the data generation prompts.

GitHub - google/parse-ego4d: Dataset and code for generating 18,000 action suggestions via LLM querries for EGO4D dataset validated by 36,171 human annotations.

Dataset and code for generating 18,000 action suggestions via LLM querries for EGO4D dataset validated by 36,171 human annotations. - google/parse-ego4d

github.com

March 25, 2025 at 2:30 AM

But how do we know if our data is any good? We asked 35k humans in Prolific about how correct and useful they found the suggested actions in reference to every video. Now we opensource the new dataset alongside the human ranks. github.com/google/parse... and all the data generation prompts.

But really as it happens with all datasets, the key is on “How to make data useful?” That was our goal with PARSE-Ego4D parse-ego4d.github.io we augment the existing narrations and videos by providing 18k suggested actions using the latest of VLMs. This can be useful to train a future AI agents.

March 25, 2025 at 2:30 AM

But really as it happens with all datasets, the key is on “How to make data useful?” That was our goal with PARSE-Ego4D parse-ego4d.github.io we augment the existing narrations and videos by providing 18k suggested actions using the latest of VLMs. This can be useful to train a future AI agents.

But also: Not all avatars are created equal ⚠️. We did a deep study on preferences (presented at ISMAR 2023 arxiv.org/pdf/2304.01405) and realism matters quite a lot for work scenarios. most people don’t find acceptable a cartoon avatar…

March 23, 2025 at 10:23 PM

But also: Not all avatars are created equal ⚠️. We did a deep study on preferences (presented at ISMAR 2023 arxiv.org/pdf/2304.01405) and realism matters quite a lot for work scenarios. most people don’t find acceptable a cartoon avatar…

@ben.roadtovr.com I know you liked LocomotionVault.github.io so you will probably like this one too

Done

LocomotionVault.github.io

March 14, 2025 at 9:53 PM

@ben.roadtovr.com I know you liked LocomotionVault.github.io so you will probably like this one too