@wolfstammer.bsky.social

120 followers

130 following

6 posts

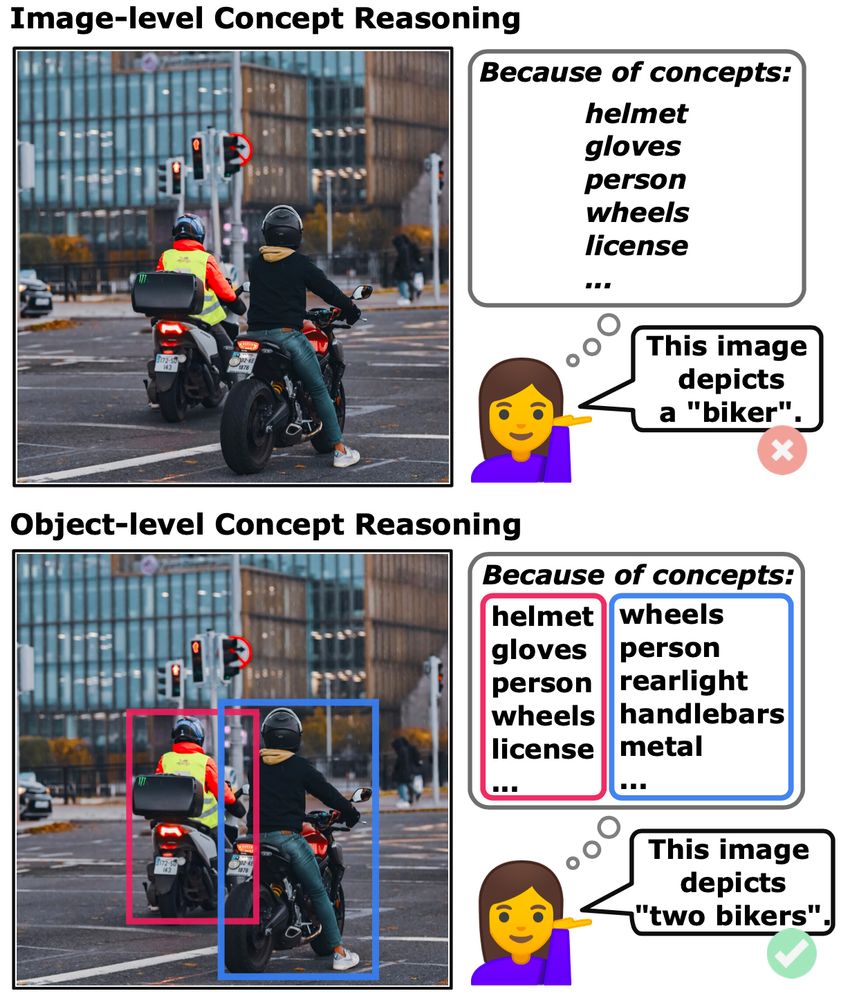

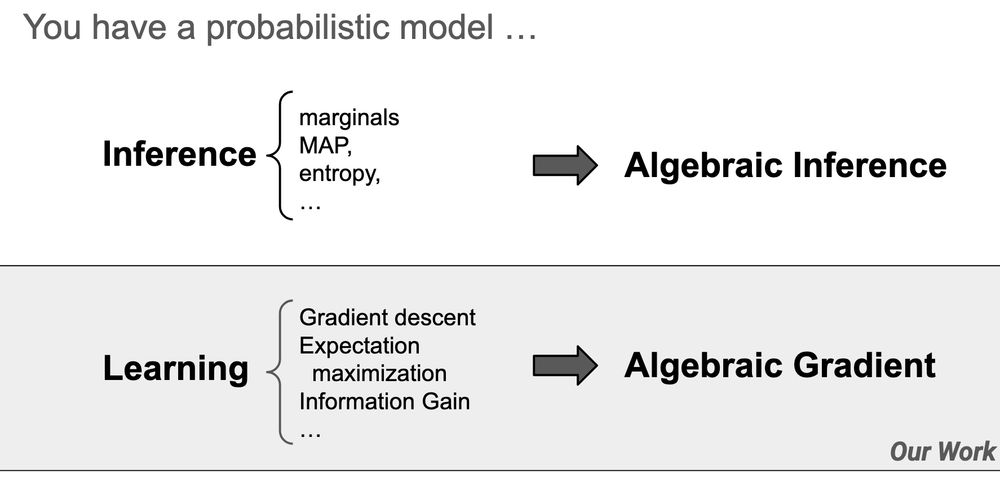

PhD candidate at AI & ML lab @ TU Darmstadt (he/him). Research on deep learning, representation learning, neuro-symbolic AI, explainable AI, verifiable AI and interactive AI

Posts

Media

Videos

Starter Packs

Reposted

TU Darmstadt

@tuda.bsky.social

· May 22

Zwei Exzellenzcluster für die TU Darmstadt

Großer Erfolg für die Technische Universität Darmstadt: Zwei ihrer Forschungsprojekte werden künftig als Exzellenzcluster gefördert. Die Exzellenzkommission im Wettbewerb der prestigeträchtigen Exzell...

www.tu-darmstadt.de

Reposted

Antonia Wüst

@toniwuest.bsky.social

· May 2

Reposted

Reposted

Reposted