Zhaochong An

@zhaochongan.bsky.social

150 followers

170 following

10 posts

PhD student at University of Copenhagen🇩🇰

Member of @belongielab.org, ELLIS @ellis.eu, and Pioneer Centre for AI🤖

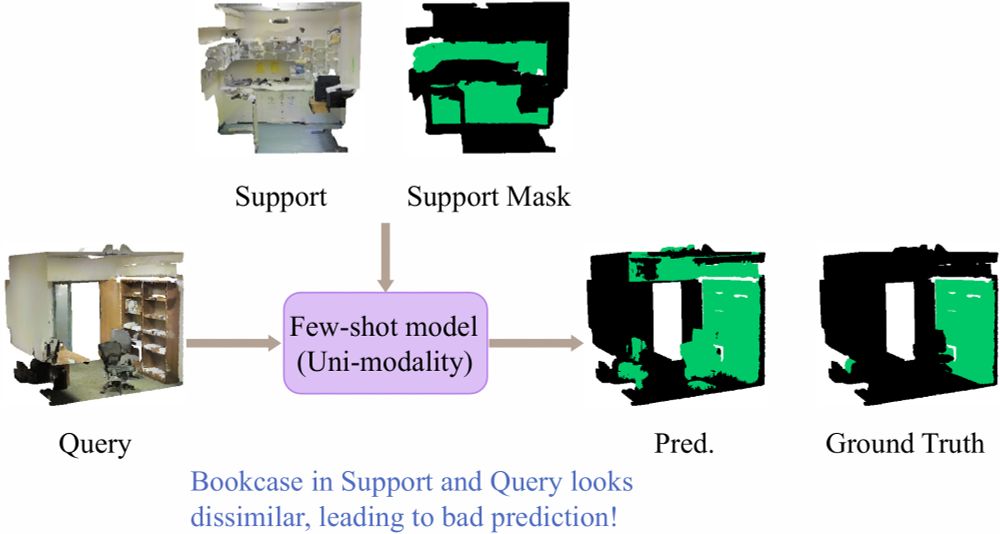

Computer Vision | Multimodality

MSc CS at ETH Zurich

🔗: zhaochongan.github.io/

Posts

Media

Videos

Starter Packs

Pinned