A new smartphone-based imaging system uses AI + autofluorescence to help dentists detect oral cancer early—fitting seamlessly into routine exams.

Read the #BiophotonicsDiscovery news story here: https://bit.ly/4qRrysc

A new smartphone-based imaging system uses AI + autofluorescence to help dentists detect oral cancer early—fitting seamlessly into routine exams.

Read the #BiophotonicsDiscovery news story here: https://bit.ly/4qRrysc

Aufgaben (u.a.)

Erforschung und Implementierung moderner Vision-Modelle (z. B. YOLO, RT-DETRv2, ViT, Swin, ResNet, GNNs)

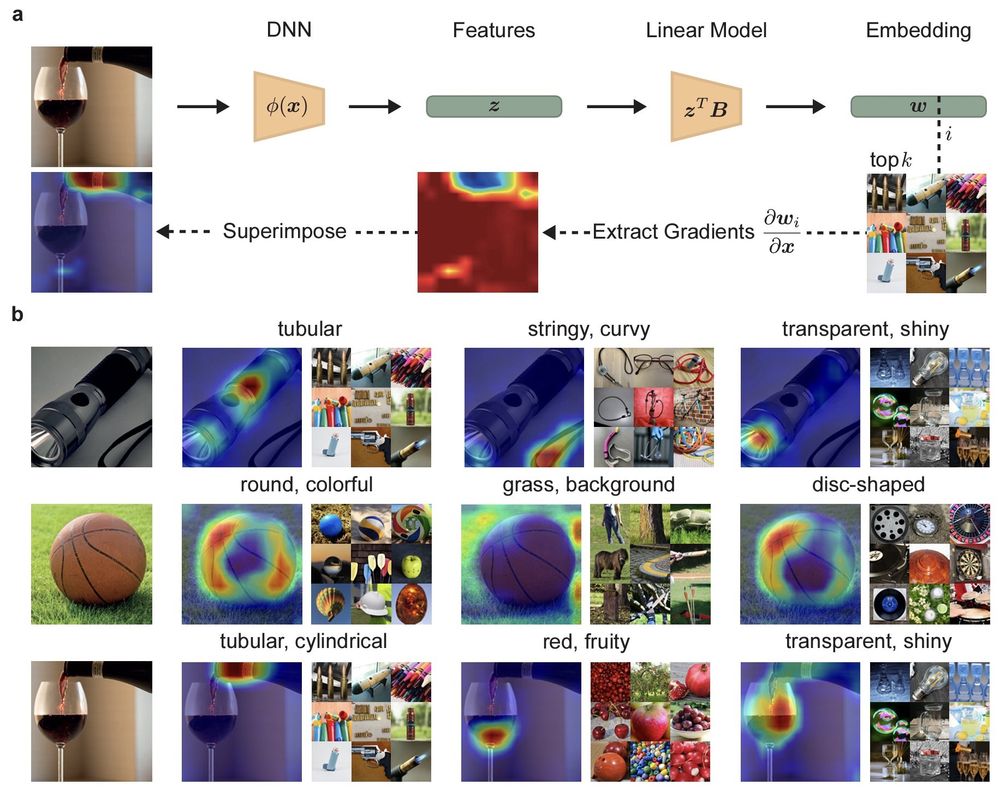

Entwicklung von Klassifikationssystemen (RISE, GradCAM, ViT Shapley u.a.)

jobs.fraunhofer.de/job/Darms...

Aufgaben (u.a.)

Erforschung und Implementierung moderner Vision-Modelle (z. B. YOLO, RT-DETRv2, ViT, Swin, ResNet, GNNs)

Entwicklung von Klassifikationssystemen (RISE, GradCAM, ViT Shapley u.a.)

jobs.fraunhofer.de/job/Darms...

PolypSeg-GradCAM: Towards Explainable Computer-Aided Gastrointestinal Disease Detection Using U-Net Based Segmentation and Grad-CAM Visualization on the Kvasir Dataset

https://arxiv.org/abs/2509.18159

PolypSeg-GradCAM: Towards Explainable Computer-Aided Gastrointestinal Disease Detection Using U-Net Based Segmentation and Grad-CAM Visualization on the Kvasir Dataset

https://arxiv.org/abs/2509.18159

jov.arvojournals.org/article.aspx...

#SPEetologia

This work was the July #ASABEditorsChoice for the #AnimalBehaviourJournal 👏👏👏

#DeepLearning #SexualDimorphism #AIinBiology #BirdResearch #SociableWeaver #Ornithology #GradCAM #WildlifeAI #CrypticDimorphism #MachineLearning #SexRecognition #AnimalAI

Implications. Based on the experimental results and recent advancements, we outline future research directions to enhance [6/7 of https://arxiv.org/abs/2503.08420v1]

Implications. Based on the experimental results and recent advancements, we outline future research directions to enhance [6/7 of https://arxiv.org/abs/2503.08420v1]

(1) Choose Your Explanation: A Comparison of SHAP and GradCAM in Human Activity Recognition

🔍 More at researchtrend.ai/communities/FAtt

(1) Choose Your Explanation: A Comparison of SHAP and GradCAM in Human Activity Recognition

🔍 More at researchtrend.ai/communities/FAtt