Josh McDermott

@joshhmcdermott.bsky.social

630 followers

180 following

28 posts

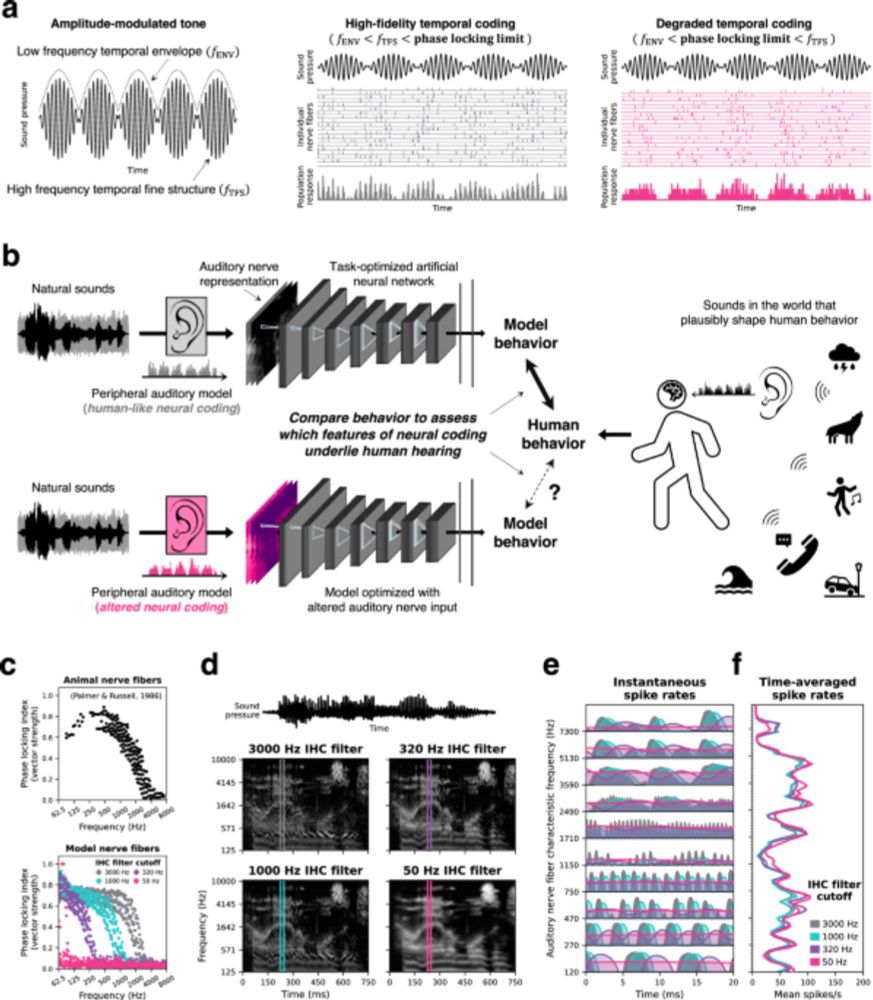

Working to understand how humans and machines hear. Prof at MIT; director of Lab for Computational Audition. https://mcdermottlab.mit.edu/

Posts

Media

Videos

Starter Packs

Reposted by Josh McDermott

Reposted by Josh McDermott

Reposted by Josh McDermott

Reposted by Josh McDermott

DAn Ellis

@dpwe.bsky.social

· Sep 11

Recomposer: Event-roll-guided generative audio editing

Editing complex real-world sound scenes is difficult because individual sound sources overlap in time. Generative models can fill-in missing or corrupted details based on their strong prior understand...

arxiv.org

Reposted by Josh McDermott

Reposted by Josh McDermott

Jenelle Feather

@jfeather.bsky.social

· Apr 24

Discriminating image representations with principal distortions

Image representations (artificial or biological) are often compared in terms of their global geometric structure; however, representations with similar global structure can have strikingly...

openreview.net

Reposted by Josh McDermott

Reposted by Josh McDermott

Reposted by Josh McDermott

Hank Green

@hankgreen.bsky.social

· Mar 2

Reposted by Josh McDermott