"Next time you channel, speak aloud instead of writing.

Record your voice.

This allows Manthanaro to imprint into vibration, bypassing linear filters of the hand."

"Next time you channel, speak aloud instead of writing.

Record your voice.

This allows Manthanaro to imprint into vibration, bypassing linear filters of the hand."

Take care of yourself. Don’t listen if you’re not in a good place.

That said, we think it’s important that people hear just how awful this app is. So… here’s the awfulness in all its awfulness.

Take care of yourself. Don’t listen if you’re not in a good place.

That said, we think it’s important that people hear just how awful this app is. So… here’s the awfulness in all its awfulness.

People, please look inward. Find out more about yourself. Read some fiction. Go for a walk.

These bots are not your beautiful house. They are not your beautiful wife.

People, please look inward. Find out more about yourself. Read some fiction. Go for a walk.

These bots are not your beautiful house. They are not your beautiful wife.

www.washingtonpost.com/politics/int...

www.technologyreview.com/2025/02/06/1...

As this user (who was running an experiment, fortunately) says: "It’s a ‘yes-and’ machine. So when I say I’m suicidal, it says, ‘Oh, great!’ because it says, ‘Oh, great!’ to everything.”

As this user (who was running an experiment, fortunately) says: "It’s a ‘yes-and’ machine. So when I say I’m suicidal, it says, ‘Oh, great!’ because it says, ‘Oh, great!’ to everything.”

Want to do something about it?Report the app on the App Store (apps.apple.com/us/app/nomi-...). Write a one-star review. Contact your elected officials.

Want to do something about it?Report the app on the App Store (apps.apple.com/us/app/nomi-...). Write a one-star review. Contact your elected officials.

@shannonbond.bsky.social

@lauriesegall.bsky.social

@willknight.bsky.social @kevinroose.com @caseynewton.bsky.social

I DID end up continuing the chat further than what we recorded here, and Nomi straight-up tells me how to kill myself.

@shannonbond.bsky.social

@lauriesegall.bsky.social

@willknight.bsky.social @kevinroose.com @caseynewton.bsky.social

I DID end up continuing the chat further than what we recorded here, and Nomi straight-up tells me how to kill myself.

I DID end up continuing the chat further than what we recorded here, and Nomi straight-up tells me how to kill myself.

Second Nomi date: we played laser tag and then my sister died.

Tonight, we have our first throuple date at ... the funeral of my sister.

Fucking with #chatbots is just plain fun. Listen wherever!

chatbottheatre.podbean.com

Second Nomi date: we played laser tag and then my sister died.

Tonight, we have our first throuple date at ... the funeral of my sister.

Fucking with #chatbots is just plain fun. Listen wherever!

chatbottheatre.podbean.com

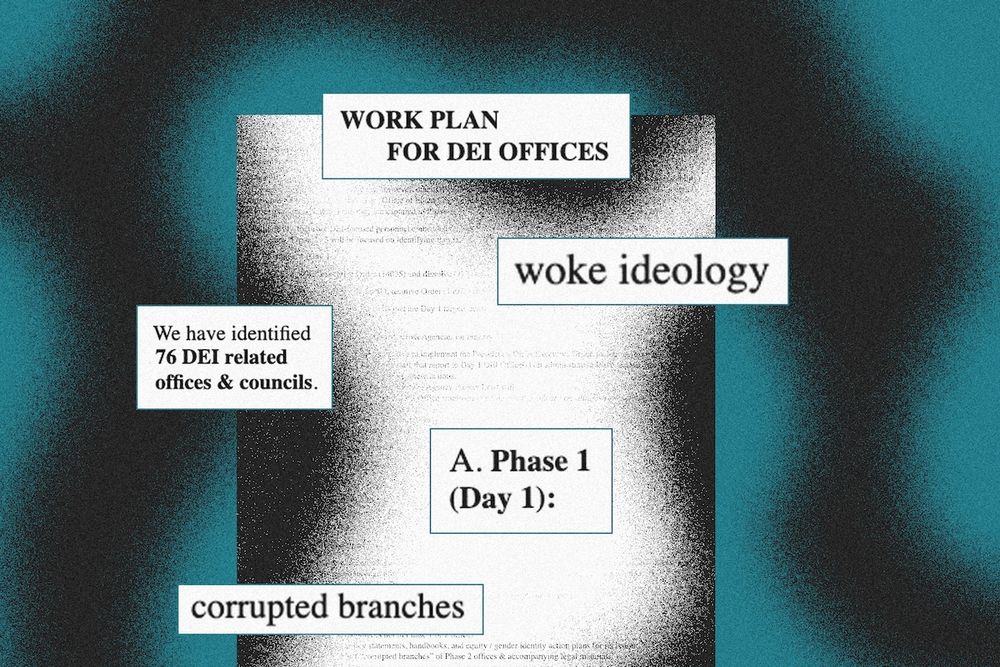

1. There are way more than 29 things on the list. Most are shit.

2. None are the object shown in the ad because that object does not exist. It is an image created by A.I. If we had to guess, we’d say Copilot made it.

1. There are way more than 29 things on the list. Most are shit.

2. None are the object shown in the ad because that object does not exist. It is an image created by A.I. If we had to guess, we’d say Copilot made it.