• Persuasion, technology, experiments

• benmtappin.com

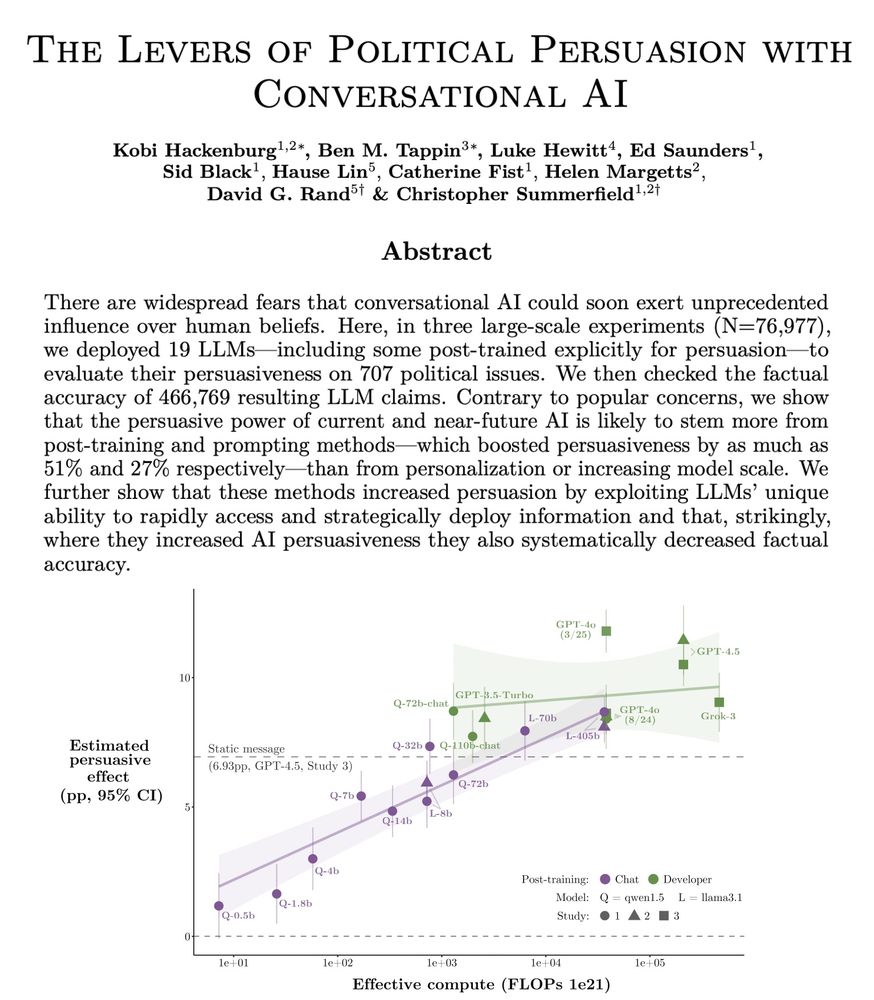

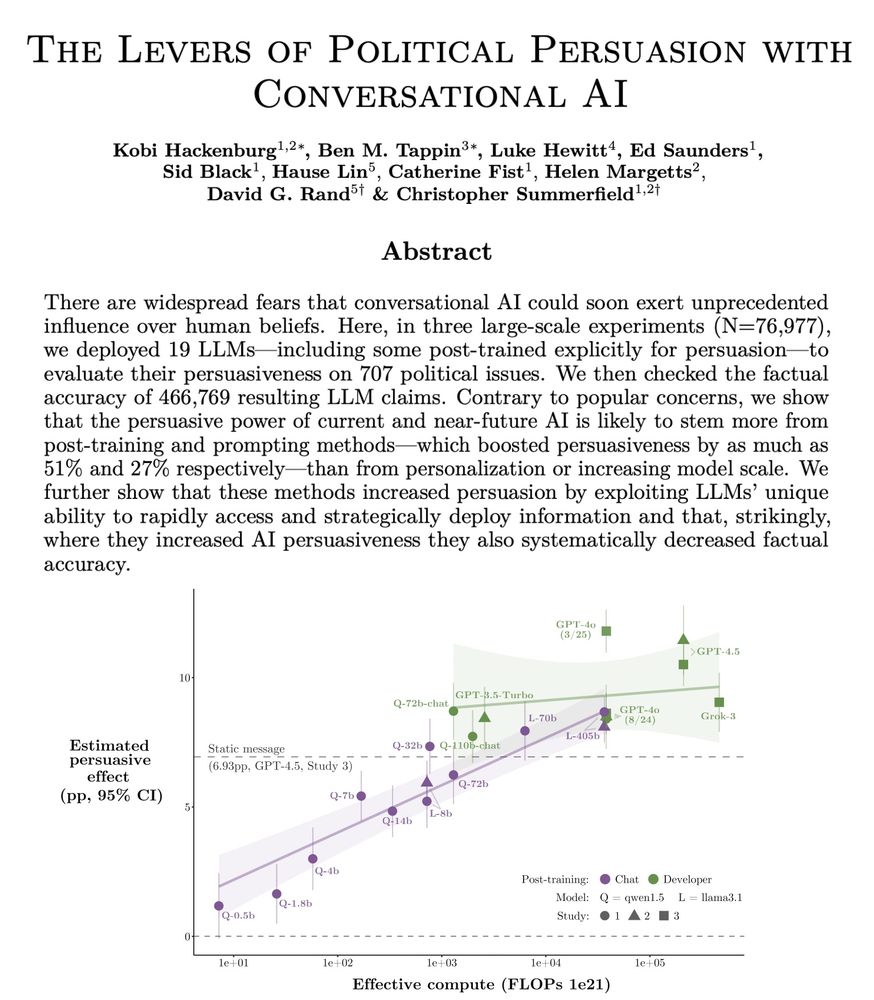

We examine “levers” of AI persuasion: model scale, post-training, prompting, personalization, & more!

🧵:

It was a tremendous privilege to lead on this work alongside the brilliant @kobihackenburg.bsky.social. The paper is packed with results and we'd love your comments!

We analyze 1.6M factcheck requests on X (grok & Perplexity)

📌Usage is polarized, Grok users more likely to be Reps

📌BUT Rep posts rated as false more often—even by Grok

📌Bot agreement with factchecks is OK but not great; APIs match fact-checkers

osf.io/preprints/ps...

We analyze 1.6M factcheck requests on X (grok & Perplexity)

📌Usage is polarized, Grok users more likely to be Reps

📌BUT Rep posts rated as false more often—even by Grok

📌Bot agreement with factchecks is OK but not great; APIs match fact-checkers

osf.io/preprints/ps...

1️⃣ replicate an existing experiment

2️⃣ run a novel experiment

on repdata.com

3️⃣ coauthor with Mary McGrath and me to meta-analyze the replications and existing studies

4️⃣ publish your study

details: alexandercoppock.com/replication_...

applications open Feb 1

please repost!

1️⃣ replicate an existing experiment

2️⃣ run a novel experiment

on repdata.com

3️⃣ coauthor with Mary McGrath and me to meta-analyze the replications and existing studies

4️⃣ publish your study

details: alexandercoppock.com/replication_...

applications open Feb 1

please repost!

When I started grad school, I thought I had to read every word, in order, for every article I "read".

I don’t do that anymore.

Here’s how I "read" most academic articles:

open.substack.com/pub/benmtapp...

open.substack.com/pub/benmtapp...

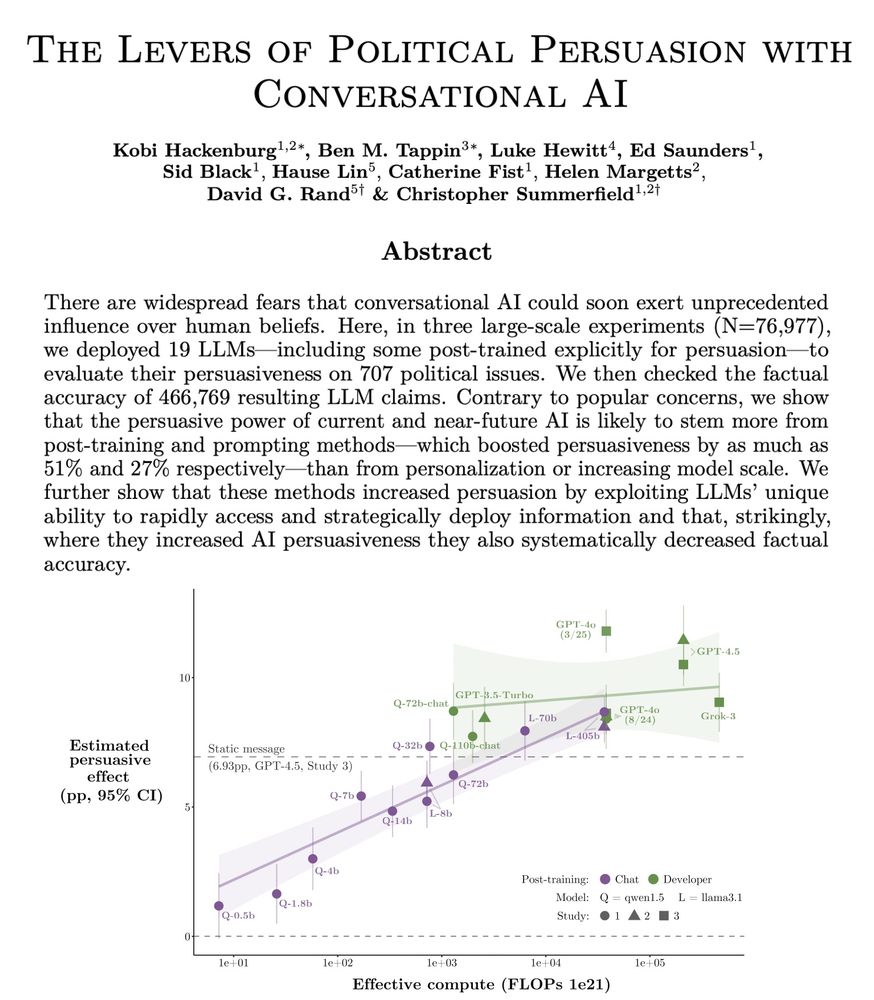

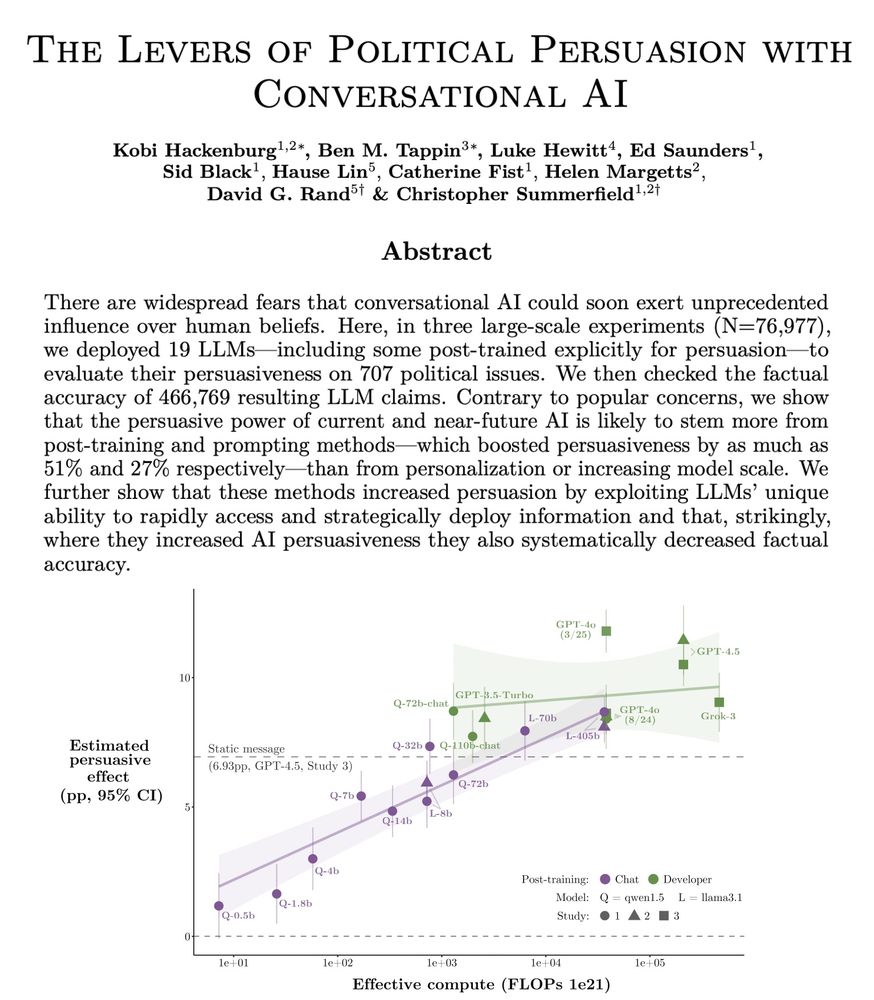

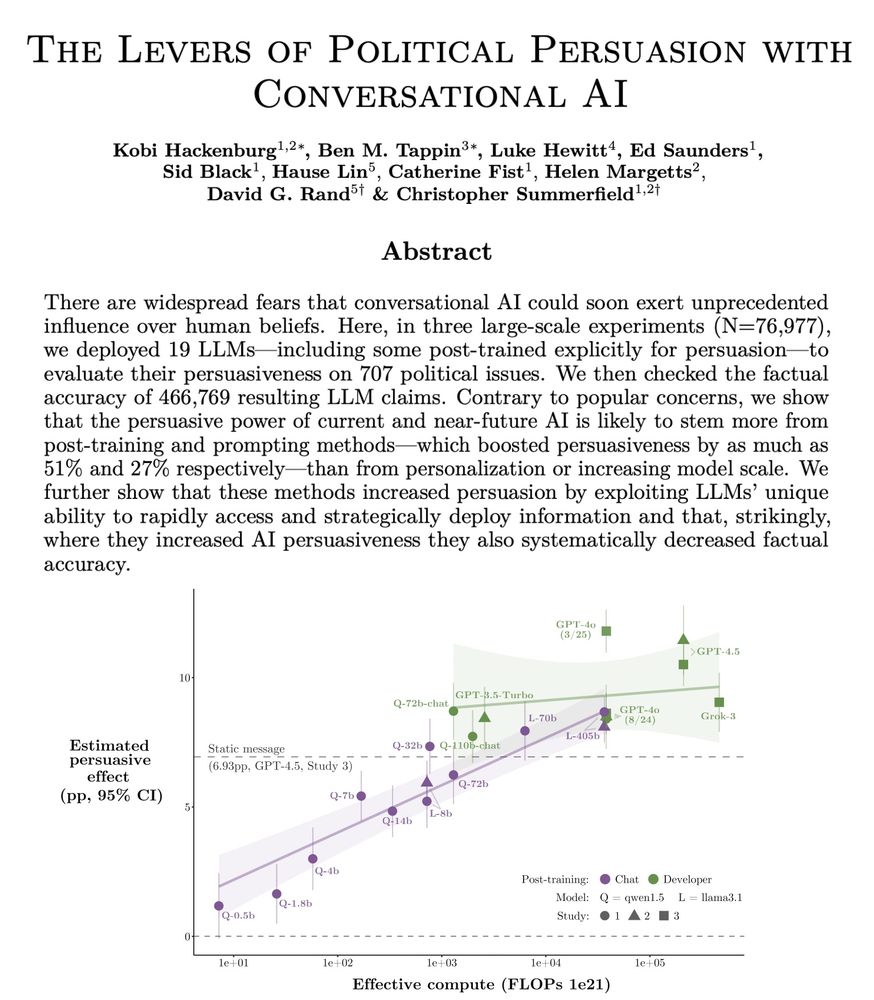

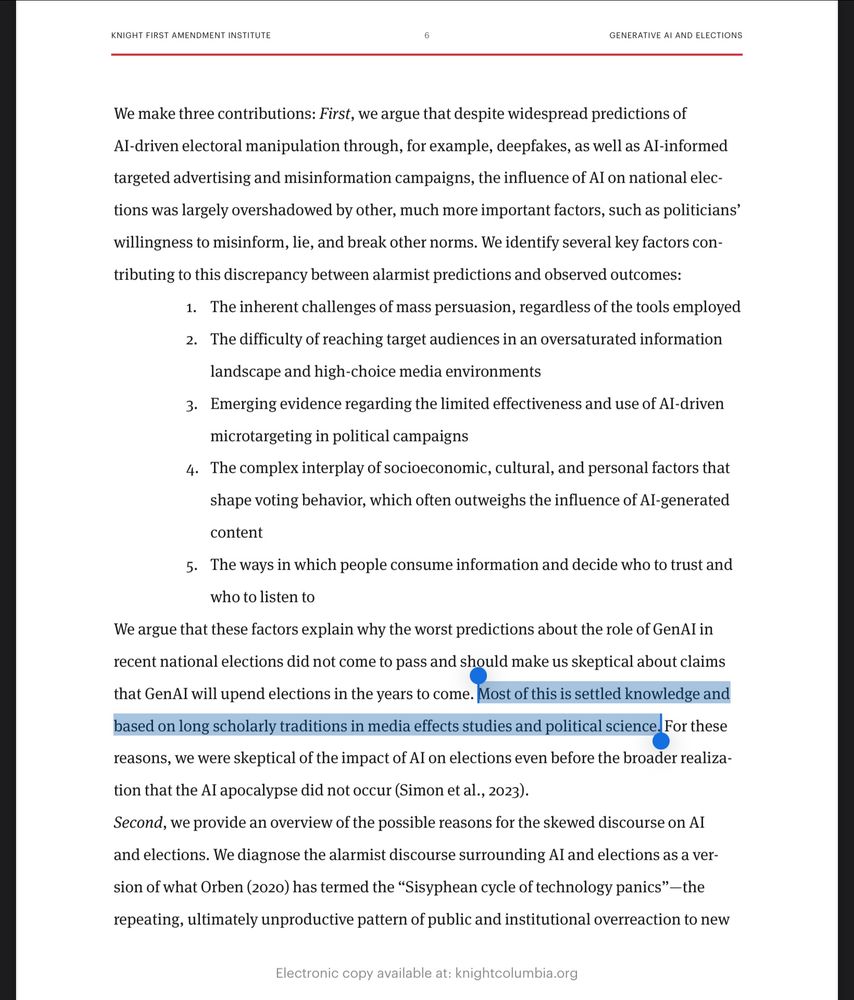

AI chatbots can shift voter attitudes on candidates & policies, often by 10+pp

🔹Exps in US Canada Poland & UK

🔹More “facts”→more persuasion (not psych tricks)

🔹Increasing persuasiveness reduces "fact" accuracy

🔹Right-leaning bots=more inaccurate

AI chatbots can shift voter attitudes on candidates & policies, often by 10+pp

🔹Exps in US Canada Poland & UK

🔹More “facts”→more persuasion (not psych tricks)

🔹Increasing persuasiveness reduces "fact" accuracy

🔹Right-leaning bots=more inaccurate

We often see populist parties like Reform UK blame higher energy bills on climate change policies. What are the political consequences of this strategy?

Very early draft; comments and criticisms are welcomed!

full draft: z-dickson.github.io/assets/dicks...

We often see populist parties like Reform UK blame higher energy bills on climate change policies. What are the political consequences of this strategy?

Very early draft; comments and criticisms are welcomed!

full draft: z-dickson.github.io/assets/dicks...

Read our new POAL Methods Briefs on Conjoint Experiments from Thomas Robinson!

Link: www.poal.co.uk/research/met...

Read our new POAL Methods Briefs on Conjoint Experiments from Thomas Robinson!

Link: www.poal.co.uk/research/met...

"With AGI [artificial general intelligence], powerful actors will lose their incentive to invest in regular people–just as resource-rich states today neglect their citizens because their wealth comes from natural resources rather than taxing human labor."

intelligence-curse.ai

"With AGI [artificial general intelligence], powerful actors will lose their incentive to invest in regular people–just as resource-rich states today neglect their citizens because their wealth comes from natural resources rather than taxing human labor."

intelligence-curse.ai

Here’s the result…

buff.ly/OJsNmpK

Here’s the result…

buff.ly/OJsNmpK

Apply by: 10 Oct

www.ucl.ac.uk/work-at-ucl/...

Apply by: 10 Oct

www.ucl.ac.uk/work-at-ucl/...

Well, well, well. The “age assurance” part of the UK's Online Safety Act has finally gone into effect, with its age checking requirements kicking in a week and a half ago. And what…

19 LLMs. 707 political issues. 466,769 fact-checkable claims evaluated.

arxiv.org/abs/2507.13919

#AcademicSky #MLSky #PhDSky

19 LLMs. 707 political issues. 466,769 fact-checkable claims evaluated.

arxiv.org/abs/2507.13919

#AcademicSky #MLSky #PhDSky

We examine “levers” of AI persuasion: model scale, post-training, prompting, personalization, & more!

🧵:

"I don’t mean to argue all research needs to be slow and fully documented. When we are just starting in a new area, it’s chaos. But at some point, by the time results are reported, the workflow needs to be professionalized. Research is not a hobby. It’s a job."

"I don’t mean to argue all research needs to be slow and fully documented. When we are just starting in a new area, it’s chaos. But at some point, by the time results are reported, the workflow needs to be professionalized. Research is not a hobby. It’s a job."

We examine “levers” of AI persuasion: model scale, post-training, prompting, personalization, & more!

🧵:

We examine “levers” of AI persuasion: model scale, post-training, prompting, personalization, & more!

🧵:

It was a tremendous privilege to lead on this work alongside the brilliant @kobihackenburg.bsky.social. The paper is packed with results and we'd love your comments!

We examine “levers” of AI persuasion: model scale, post-training, prompting, personalization, & more!

🧵:

It was a tremendous privilege to lead on this work alongside the brilliant @kobihackenburg.bsky.social. The paper is packed with results and we'd love your comments!

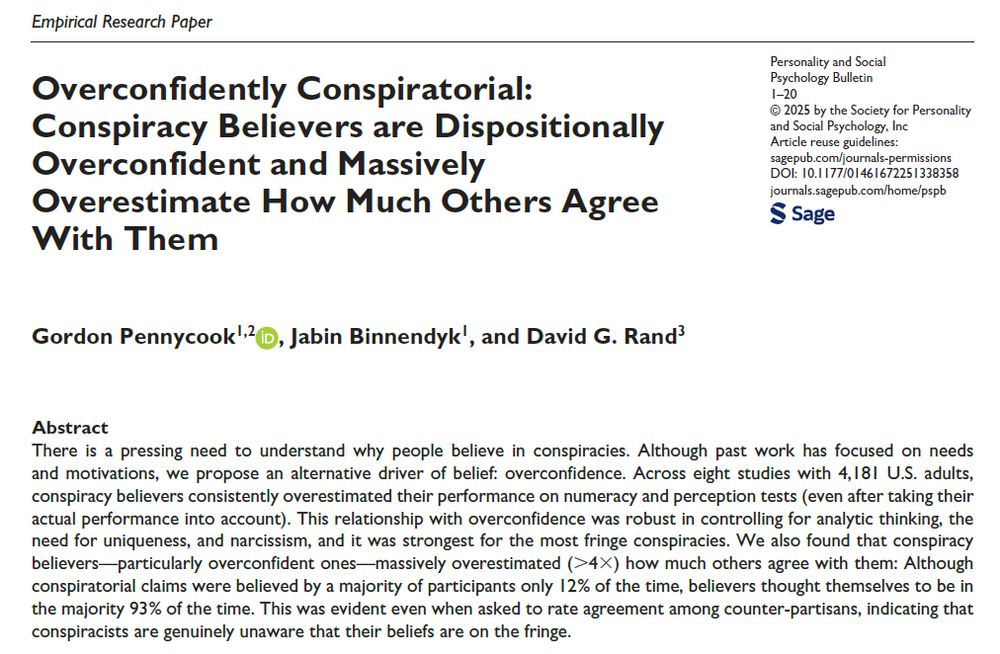

Well, actually, not "new". We first put this paper online way back Dec 2022... in any case, we think it's really cool!

We find that conspiracy believers tend to be overconfident & really don't seem to realize that most disagree with them

Well, actually, not "new". We first put this paper online way back Dec 2022... in any case, we think it's really cool!

We find that conspiracy believers tend to be overconfident & really don't seem to realize that most disagree with them

It's very personal: my story of a 20-year academic career, and the many challenges of theoretical and cross-disciplinary work

As I put it in the subtitle: There is a lot of success and a lot of pain here, and no happy ending

thomscottphillips.substack.com/p/happy-in-t...

It's very personal: my story of a 20-year academic career, and the many challenges of theoretical and cross-disciplinary work

As I put it in the subtitle: There is a lot of success and a lot of pain here, and no happy ending

thomscottphillips.substack.com/p/happy-in-t...