Work w/ @byron.bsky.social

Link: arxiv.org/abs/2511.00177

→ buff.ly/HkChr4Q

→ buff.ly/HkChr4Q

Our paper (co w/ Vinith Suriyakumar) on syntax-domain spurious correlations will appear at #NeurIPS2025 as a ✨spotlight!

+ @marzyehghassemi.bsky.social, @byron.bsky.social, Levent Sagun

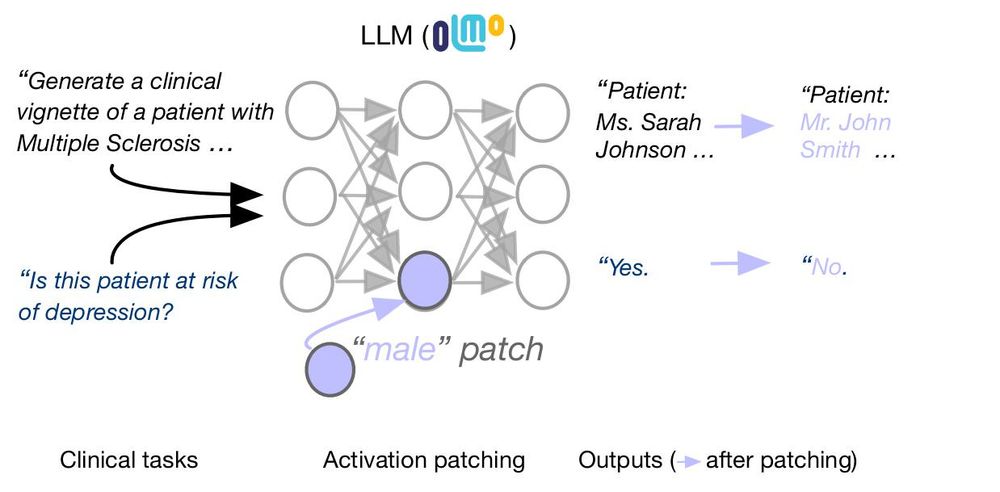

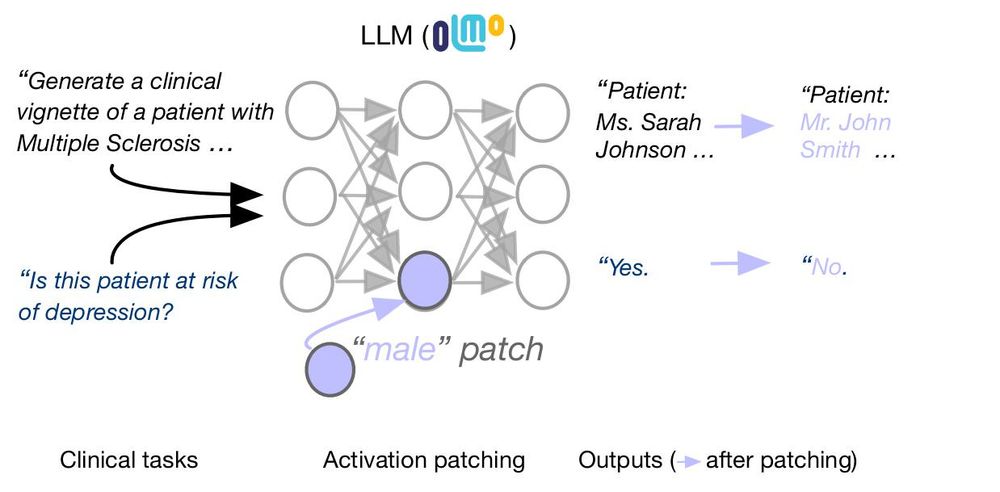

Work w/ @arnabsensharma.bsky.social, @silvioamir.bsky.social, @davidbau.bsky.social, @byron.bsky.social

arxiv.org/abs/2502.13319

I want to draw your attention to a COLM paper by my student @sfeucht.bsky.social that has totally changed the way I think and teach about LLM representations. The work is worth knowing.

And you can meet Sheridan at COLM, Oct 7!

bsky.app/profile/sfe...

I want to draw your attention to a COLM paper by my student @sfeucht.bsky.social that has totally changed the way I think and teach about LLM representations. The work is worth knowing.

And you can meet Sheridan at COLM, Oct 7!

bsky.app/profile/sfe...

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

8/8

The full paper can be found at arxiv.org/abs/2502.07963

The full paper can be found at arxiv.org/abs/2502.07963

goodfire.ai/ for sponsoring! nemiconf.github.io/summer25/

If you can't make it in person, the livestream will be here:

www.youtube.com/live/4BJBis...

goodfire.ai/ for sponsoring! nemiconf.github.io/summer25/

If you can't make it in person, the livestream will be here:

www.youtube.com/live/4BJBis...

We’re excited to release FactEHR — a new benchmark to evaluate factuality in clinical notes. As generative AI enters the clinic, we need rigorous, source-grounded tools to measure what these models get right — and what they don’t. 🏥 🤖

We’re excited to release FactEHR — a new benchmark to evaluate factuality in clinical notes. As generative AI enters the clinic, we need rigorous, source-grounded tools to measure what these models get right — and what they don’t. 🏥 🤖

Probably not. In our study, even experts struggled to verify Reddit health claims using end-to-end systems.

We show why—and argue fact-checking should be a dialogue, with patients in the loop

arxiv.org/abs/2506.20876

🧵1/

Probably not. In our study, even experts struggled to verify Reddit health claims using end-to-end systems.

We show why—and argue fact-checking should be a dialogue, with patients in the loop

arxiv.org/abs/2506.20876

🧵1/

I put together a google form that should take no longer than 10 minutes to complete: forms.gle/oWxsCScW3dJU...

If you can help, I'd appreciate your input! 🙏

I put together a google form that should take no longer than 10 minutes to complete: forms.gle/oWxsCScW3dJU...

If you can help, I'd appreciate your input! 🙏

Work w/ @arnabsensharma.bsky.social, @silvioamir.bsky.social, @davidbau.bsky.social, @byron.bsky.social

arxiv.org/abs/2502.13319

Work w/ @arnabsensharma.bsky.social, @silvioamir.bsky.social, @davidbau.bsky.social, @byron.bsky.social

arxiv.org/abs/2502.13319

In our new preprint, we find that LLMs are susceptible to biased reporting of clinical treatment benefits in abstracts—more so than human experts. 📄🔍 [1/7]

Full Paper: arxiv.org/abs/2502.07963

🧵👇

In our new preprint, we find that LLMs are susceptible to biased reporting of clinical treatment benefits in abstracts—more so than human experts. 📄🔍 [1/7]

Full Paper: arxiv.org/abs/2502.07963

🧵👇

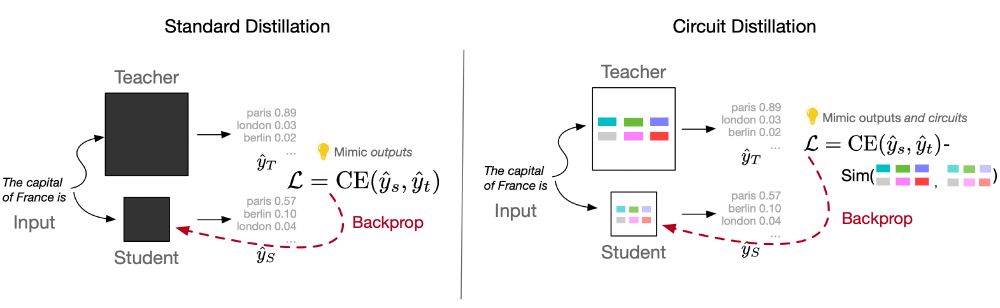

🔗 Full paper: arxiv.org/abs/2502.06659

🔗 Full paper: arxiv.org/abs/2502.06659

dsthoughts.baulab.info

I'd be interested in your thoughts.

dsthoughts.baulab.info

I'd be interested in your thoughts.

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social