Part of UT Computational Linguistics https://sites.utexas.edu/compling/ and UT NLP https://www.nlp.utexas.edu/

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

(With thanks to Lisa Chuyuan Li who took this photo in Suzhou!)

(With thanks to Lisa Chuyuan Li who took this photo in Suzhou!)

Also delighted the ACL community continues to recognize unabashedly linguistic topics like filler-gaps... and the huge potential for LMs to inform such topics!

Also delighted the ACL community continues to recognize unabashedly linguistic topics like filler-gaps... and the huge potential for LMs to inform such topics!

They perform at chance for making inferences from certain discourse connectives expressing concession

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

They perform at chance for making inferences from certain discourse connectives expressing concession

PLSemanticsBench - where formal meets informal!

arxiv.org/abs/2510.03415

Team: Aditya Thimmaiah, Jiyang Zhang, Jayanth Srinivasa, Milos Gligoric

PLSemanticsBench - where formal meets informal!

arxiv.org/abs/2510.03415

Team: Aditya Thimmaiah, Jiyang Zhang, Jayanth Srinivasa, Milos Gligoric

LLMs aren't interpreting rules -- they're recalling patterns.

Their "understanding" is promising... but shallow.

💡It's time to test semantics, not just syntax.💡

To move from surface-level memorization → true symbolic reasoning.

LLMs aren't interpreting rules -- they're recalling patterns.

Their "understanding" is promising... but shallow.

💡It's time to test semantics, not just syntax.💡

To move from surface-level memorization → true symbolic reasoning.

Models that were "near-perfect" drop to single digits. 😬

Models that were "near-perfect" drop to single digits. 😬

We built **PLSemanticsBench** to find out.

The results: a wild mix.

✅The Brilliant:

Top reasoning models can execute complex, fuzzer-generated programs -- even with 5+ levels of nested loops! 🤯

❌The Brittle: 🧵

We built **PLSemanticsBench** to find out.

The results: a wild mix.

✅The Brilliant:

Top reasoning models can execute complex, fuzzer-generated programs -- even with 5+ levels of nested loops! 🤯

❌The Brittle: 🧵

Tuesday morning: @juand-r.bsky.social and @ramyanamuduri.bsky.social 's papers (find them if you missed it!)

Wednesday pm: @manyawadhwa.bsky.social 's EvalAgent

Thursday am: @anirudhkhatry.bsky.social 's CRUST-Bench oral spotlight + poster

Tuesday morning: @juand-r.bsky.social and @ramyanamuduri.bsky.social 's papers (find them if you missed it!)

Wednesday pm: @manyawadhwa.bsky.social 's EvalAgent

Thursday am: @anirudhkhatry.bsky.social 's CRUST-Bench oral spotlight + poster

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

@siyuansong.bsky.social Tue am introspection arxiv.org/abs/2503.07513

@qyao.bsky.social Wed am controlled rearing: arxiv.org/abs/2503.20850

@sashaboguraev.bsky.social INTERPLAY ling interp: arxiv.org/abs/2505.16002

I’ll talk at INTERPLAY too. Come say hi!

@siyuansong.bsky.social Tue am introspection arxiv.org/abs/2503.07513

@qyao.bsky.social Wed am controlled rearing: arxiv.org/abs/2503.20850

@sashaboguraev.bsky.social INTERPLAY ling interp: arxiv.org/abs/2505.16002

I’ll talk at INTERPLAY too. Come say hi!

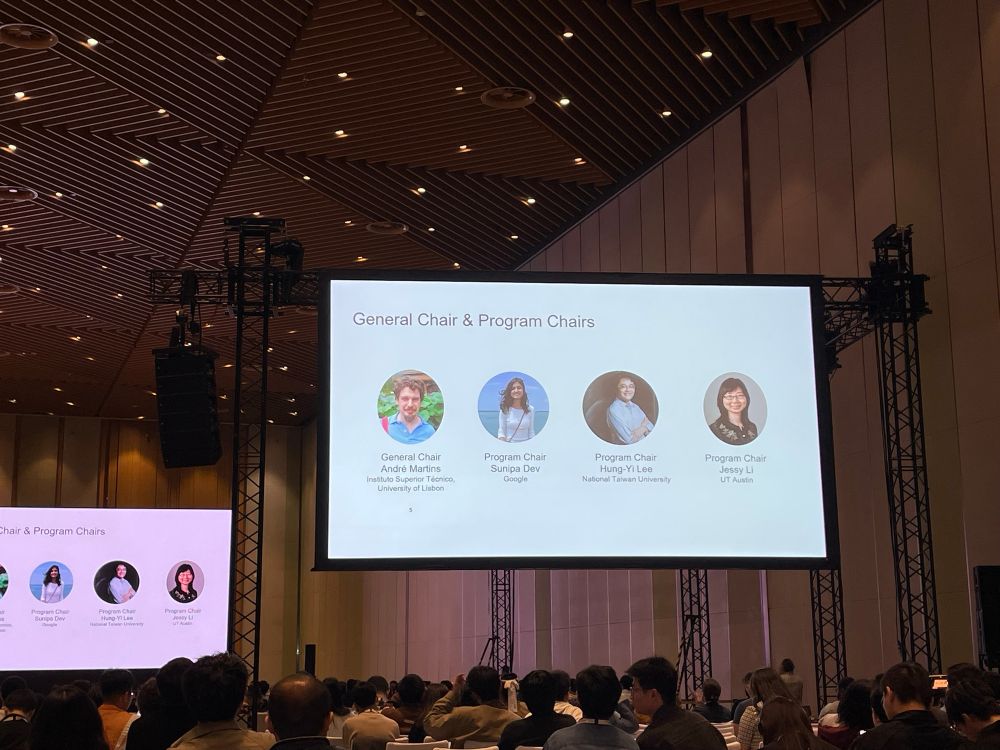

Check out jessyli.com/colm2025

QUDsim: Discourse templates in LLM stories arxiv.org/abs/2504.09373

EvalAgent: retrieval-based eval targeting implicit criteria arxiv.org/abs/2504.15219

RoboInstruct: code generation for robotics with simulators arxiv.org/abs/2405.20179

Check out jessyli.com/colm2025

QUDsim: Discourse templates in LLM stories arxiv.org/abs/2504.09373

EvalAgent: retrieval-based eval targeting implicit criteria arxiv.org/abs/2504.15219

RoboInstruct: code generation for robotics with simulators arxiv.org/abs/2405.20179

Not presenting anything but here are two posters you should visit:

1. @qyao.bsky.social on Controlled rearing for direct and indirect evidence for datives (w/ me, @weissweiler.bsky.social and @kmahowald.bsky.social), W morning

Paper: arxiv.org/abs/2503.20850

Not presenting anything but here are two posters you should visit:

1. @qyao.bsky.social on Controlled rearing for direct and indirect evidence for datives (w/ me, @weissweiler.bsky.social and @kmahowald.bsky.social), W morning

Paper: arxiv.org/abs/2503.20850

A new benchmark developed by researchers at the NSF-Simons AI Institute for Cosmic Origins is testing how well LLMs implement scientific workflows in astronomy and visualize results.

For my areas see jessyli.com

For my areas see jessyli.com

Check out @sebajoe.bsky.social’s feature on ✨AstroVisBench:

A new benchmark developed by researchers at the NSF-Simons AI Institute for Cosmic Origins is testing how well LLMs implement scientific workflows in astronomy and visualize results.

Check out @sebajoe.bsky.social’s feature on ✨AstroVisBench:

Explore responsible applications and best practices for maximizing impact and building trust with @utaustin.bsky.social experts @jessyjli.bsky.social & @mackert.bsky.social.

💻: rebrand.ly/HCTS_AI

Explore responsible applications and best practices for maximizing impact and building trust with @utaustin.bsky.social experts @jessyjli.bsky.social & @mackert.bsky.social.

💻: rebrand.ly/HCTS_AI

Recent neural networks capture properties long thought to require symbols: compositionality, productivity, rapid learning

So what role should symbols play in theories of the mind? For our answer...read on!

Paper: arxiv.org/abs/2508.05776

1/n

Recent neural networks capture properties long thought to require symbols: compositionality, productivity, rapid learning

So what role should symbols play in theories of the mind? For our answer...read on!

Paper: arxiv.org/abs/2508.05776

1/n