Thread with my favourite quotes 👇

1/🧵

Thread with my favourite quotes 👇

1/🧵

CRITICAL THINKING 🤔

“Critical Thinking is deep engagement with the relationships between statements about the world.”

Guest, Suarez, & van Rooij (2025). Towards Critical Artificial Intelligence Literacies. doi.org/10.5281/zeno...

12/🧵

CRITICAL THINKING 🤔

“Critical Thinking is deep engagement with the relationships between statements about the world.”

Guest, Suarez, & van Rooij (2025). Towards Critical Artificial Intelligence Literacies. doi.org/10.5281/zeno...

12/🧵

Recently, Mark updated Ch 9 & 10 so they have embedded, running and editable, code again.

Check it out! ✨

computationalcognitivescience.github.io/lovelace/

Recently, Mark updated Ch 9 & 10 so they have embedded, running and editable, code again.

Check it out! ✨

computationalcognitivescience.github.io/lovelace/

doi.org/10.1007/s421...

doi.org/10.1007/s421...

In diesem Preprint wird diskutiert, wie KI im Bereich Psychologie unterstützen kann:

📄 van Rooij, I., & Guest, O. (2025). Combining Psychology with Artificial Intelligence: What could possibly go wrong?

🔗 philpapers.org/rec/VANCPW

In diesem Preprint wird diskutiert, wie KI im Bereich Psychologie unterstützen kann:

📄 van Rooij, I., & Guest, O. (2025). Combining Psychology with Artificial Intelligence: What could possibly go wrong?

🔗 philpapers.org/rec/VANCPW

Guest, O. Suarez, M., et al. (2025). Against the Uncritical Adoption of 'AI' Technologies in Academia. doi.org/10.5281/zeno...

By @olivia.science @marentierra.bsky.social @altibel.bsky.social @lucyavraamidou.bsky.social @jedbrown.org @felienne.bsky.social, me & others

4/🧵

Guest, O. Suarez, M., et al. (2025). Against the Uncritical Adoption of 'AI' Technologies in Academia. doi.org/10.5281/zeno...

By @olivia.science @marentierra.bsky.social @altibel.bsky.social @lucyavraamidou.bsky.social @jedbrown.org @felienne.bsky.social, me & others

4/🧵

doi.org/10.5281/zeno...

doi.org/10.5281/zeno...

“Critical AI Literacies for Resisting and Reclaiming"

Organisers and teachers:

👉 @marentierra.bsky.social

👉 @olivia.science

👉 myself

Deadline for application:

🐦 31 March 2026 (early bird fee)

1/🧵

www.ru.nl/en/education...

“Critical AI Literacies for Resisting and Reclaiming"

Organisers and teachers:

👉 @marentierra.bsky.social

👉 @olivia.science

👉 myself

Deadline for application:

🐦 31 March 2026 (early bird fee)

1/🧵

www.ru.nl/en/education...

👉 For many more relevant resources for Critical AI literacy, check out this website maintained by @olivia.science with videos, news, opinion pieces, blogs, articles, posters, and more. 👇

olivia.science/ai

6/🧵

👉 For many more relevant resources for Critical AI literacy, check out this website maintained by @olivia.science with videos, news, opinion pieces, blogs, articles, posters, and more. 👇

olivia.science/ai

6/🧵

Guest, O., Suarez, M., & van Rooij, I. Zenodo. Towards Critical Artificial Intelligence Literacies. Zenodo. doi.org/10.5281/zeno...

By @olivia.science @marentierra.bsky.social and me.

5/🧵

Guest, O., Suarez, M., & van Rooij, I. Zenodo. Towards Critical Artificial Intelligence Literacies. Zenodo. doi.org/10.5281/zeno...

By @olivia.science @marentierra.bsky.social and me.

5/🧵

As a newly minted UKRN (@ukrepro.bsky.social) Local Network Lead, I'm co-organizing a hybrid event about this next Friday (30th January)

Join us at @livunipsyc.bsky.social or online via Teams!

As a newly minted UKRN (@ukrepro.bsky.social) Local Network Lead, I'm co-organizing a hybrid event about this next Friday (30th January)

Join us at @livunipsyc.bsky.social or online via Teams!

![In this paper, I turn this frame on its head (cf. Andersen et al., 2023; Bannon, 2011;

Bishop, 2021; Nick Dyer-Witheford, 2019; Rogers, 2022; Ryan, 2024; Schmager et al., 2025;

Shneiderman, 2022; Steinhoff, 2021; Walton & Nayak, 2021). Using a unique (re)definition of

AI that releases us from a correlationist1 grip, we then examine three triplets as case studies of

techno-social relationships between cognition and artifacts. To presage the coming analyses,

herein AI is any techno-social relationship that outsources to machines or algorithms some

part, however small, of human cognitive labour (cf. van Rooij et al., 2024). I demonstrate

that AI is human-centric, not because it behaves like or is designed to be like humans, but

because it requires a ghost in the machine, often literally an obfuscated human-in-the-loop

to properly function (also see Guest & Martin, 2025) because AI is humans albeit in fetishised

(Braune, 2020; Morris, 2017; Mota & Cosentino Filho, 2024), obfuscated forms (e.g. Erscoi

et al., 2023). That is, AI “is in reality produced by relations among people [even though

it] appears before us in a fantastic form as relations among things” (Pfaffenberger, 1988, p.

250). AI’s “technological veil” hides human cognitive labour (Mota & Cosentino Filho, 2024).

I bring this anthropological, sociotechnical and broadly computational cognitive scientific

angle to understanding AI, that is unlike “the skills and techniques of behavioural research,

previously applied to humans and other animals[, which claim to help us] better understand

the ‘psychology’ of complex black-box ANNs” (Wills, 2025, n.p. cf. Raley and Rhee, 2023). In

fact behavioural probing of such systems using such experimental techniques assumes their

psychological standing to be equal to, or comparable to, biological organisms: it begs the

question (see for relevant analyses: Forbes & Guest, 2025; Guest & Martin, 2023, 2024; Raji

et al., 2022).](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:vsyj5jtayxcq5dw5mtja4xtb/bafkreifq2kxakpbsa44my235o2jn72u4dzyrqgvnuoldrzlddstahocdxm@jpeg)

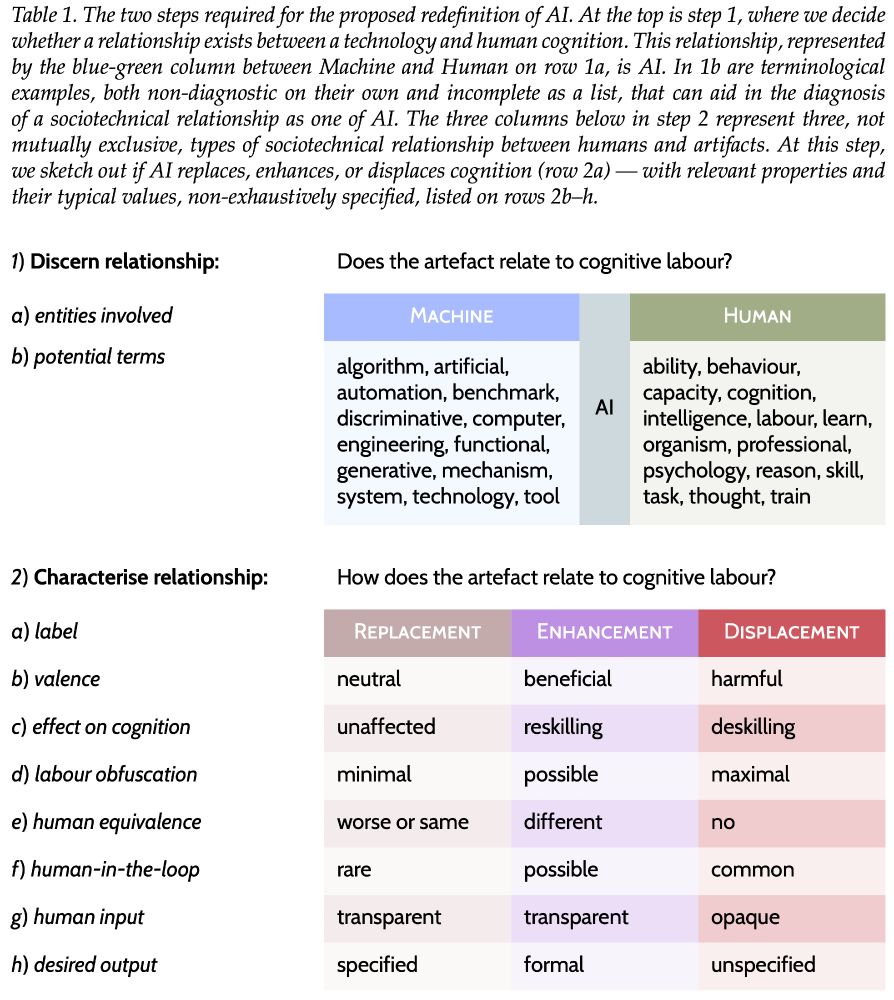

Wherein I analyse HCAI & demonstrate through 3 triplets my new tripartite definition of AI (Table 1) that properly centres the human. 1/n

Οι μεγάλες εταιρείες ΑΙ αποτελούν ένα σύγχρονο παράδειγμα τεχνοφασισμού και συγκεντρωτισμού

The big AI companies are a modern example of technofascism and centralisation

www.philenews.com/politismos/p...

Οι μεγάλες εταιρείες ΑΙ αποτελούν ένα σύγχρονο παράδειγμα τεχνοφασισμού και συγκεντρωτισμού

The big AI companies are a modern example of technofascism and centralisation

www.philenews.com/politismos/p...

1️⃣ LLMs are usefully seen as lossy content-addressable systems

2️⃣ we can't automatically detect plagiarism

3️⃣ LLMs automate plagiarism & paper mills

4️⃣ we must protect literature from pollution

5️⃣ LLM use is a CoI

6️⃣ prompts do not cause output in authorial sense

2/🧵

2/🧵

Summer School open for applications! www.ru.nl/en/education...

"This course is designed to foster critical AI literacies in participants to empower them to develop ways of resisting or reclaiming AI in their own practices & social context”

Learning goals 👇

3/🧵

Summer School open for applications! www.ru.nl/en/education...

"This course is designed to foster critical AI literacies in participants to empower them to develop ways of resisting or reclaiming AI in their own practices & social context”

Learning goals 👇

3/🧵

@apache.be you rule 🖤

@apache.be you rule 🖤

doi.org/10.5281/zeno...

We unpick the tech industry’s marketing, hype, & harm; and we argue for safeguarding higher education, critical

thinking, expertise, academic freedom, & scientific integrity.

1/n

> While the AI industry claims its models can “think,” “reason,” and “learn,” their supposed achievements rest on marketing hype & stolen intellectual labor

🧵

bit.ly/48FNcJj

> While the AI industry claims its models can “think,” “reason,” and “learn,” their supposed achievements rest on marketing hype & stolen intellectual labor

🧵

bit.ly/48FNcJj

bit.ly/48FNcJj

we need to divest of the technology provided by a country that very obviously wants to be our enemy.)

we need to divest of the technology provided by a country that very obviously wants to be our enemy.)