We love to trash dominant hypothesis, but we need to look for evidence against the manifold hypothesis elsewhere:

This elegant work doesn't show neural dynamics are high D, nor that we should stop using PCA

It’s quite the opposite!

(thread)

We love to trash dominant hypothesis, but we need to look for evidence against the manifold hypothesis elsewhere:

This elegant work doesn't show neural dynamics are high D, nor that we should stop using PCA

It’s quite the opposite!

(thread)

Our new Nature Communications paper examines a rare population: people born with dense bilateral cataracts—a short blindness occurring during a critical window of visual development.

🔗 rdcu.be/eQjMH

Our new Nature Communications paper examines a rare population: people born with dense bilateral cataracts—a short blindness occurring during a critical window of visual development.

🔗 rdcu.be/eQjMH

We asked a simple-but-big question:

What changes in the brain when someone becomes an expert?

Using chess ♟️ + fMRI 🧠 + representational geometry & dimensionality 📈, we ask:

1️⃣ WHAT information is encoded?

2️⃣ HOW is it structured?

3️⃣ WHERE is it expressed?

1/n

We asked a simple-but-big question:

What changes in the brain when someone becomes an expert?

Using chess ♟️ + fMRI 🧠 + representational geometry & dimensionality 📈, we ask:

1️⃣ WHAT information is encoded?

2️⃣ HOW is it structured?

3️⃣ WHERE is it expressed?

1/n

We went looking for dissociations between types of recurrence in DNNs, but we found something quite different.. hopefully that can tell us somehting about our models!

rdcu.be/eLwBA

We went looking for dissociations between types of recurrence in DNNs, but we found something quite different.. hopefully that can tell us somehting about our models!

rdcu.be/eLwBA

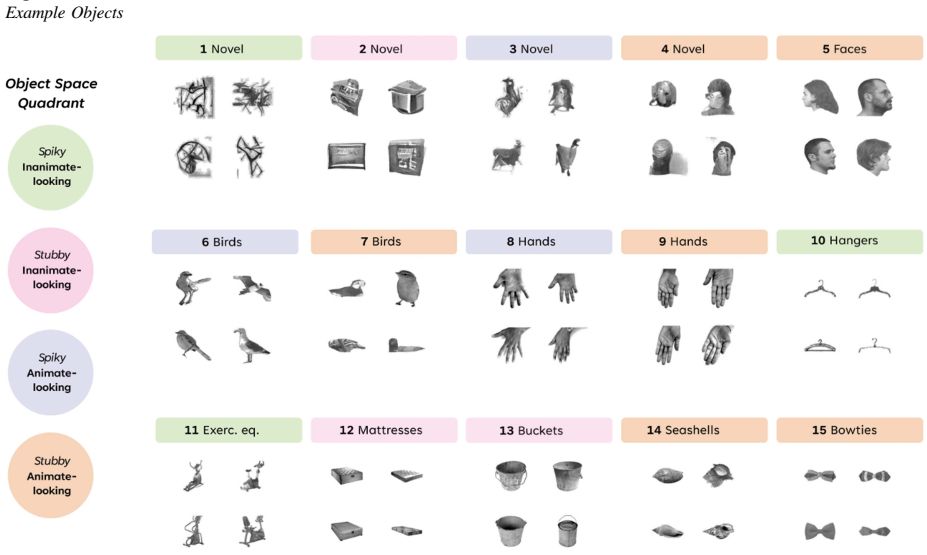

We find a striking dissociation: it’s not shared object recognition. Alignment is driven by sensitivity to texture-like local statistics.

📊 Study: n=57, 624k trials, 5 models doi.org/10.1101/2025...

We find a striking dissociation: it’s not shared object recognition. Alignment is driven by sensitivity to texture-like local statistics.

📊 Study: n=57, 624k trials, 5 models doi.org/10.1101/2025...

We 👀 into how #chess experts represent the board, and how the content, structure, and location of these repr shift w/ expertise.⬇️

We 👀 into how #chess experts represent the board, and how the content, structure, and location of these repr shift w/ expertise.⬇️

#neuroskyence

www.thetransmitter.org/neural-dynam...

#neuroskyence

www.thetransmitter.org/neural-dynam...

Our latest study, led by @DrewLinsley, examines how deep neural networks (DNNs) optimized for image categorization align with primate vision, using neural and behavioral benchmarks.

Our latest study, led by @DrewLinsley, examines how deep neural networks (DNNs) optimized for image categorization align with primate vision, using neural and behavioral benchmarks.

www.pnas.org/doi/10.1073/...

www.pnas.org/doi/10.1073/...

Research.

Research.

2025🚨

Join me in Geneva Switzerland #unige to learn more about colour perception. Using neuroimaging & computational modelling, you'll be working with an international & interdisciplinary team to understand how we transform light into a colourful world!🧠👁️🌈 #neurojobs

2025🚨

Join me in Geneva Switzerland #unige to learn more about colour perception. Using neuroimaging & computational modelling, you'll be working with an international & interdisciplinary team to understand how we transform light into a colourful world!🧠👁️🌈 #neurojobs